The scanned version of Jensen’s book, Bias in Mental Testing, is available as a PDF. If you want my version though, email me at m h 1 9 8 7 0 4 1 0 @ g m a i l . c o m.

Bias in Mental Testing

Arthur R. Jensen (1980)

CONTENT [Jump links below]

Ch.6 Do IQ Tests Really Measure Intelligence?

A Realistic Example of Factor Analysis

Ch.7 Reliability and Stability of Mental Measurements

Causes of Score Instability

Ch.8 Validity and Correlates of Mental Tests

Occupational Level, Performance, and Income

Ch.9 Definitions and Criteria of Test Bias

Ch.10 Bias in Predictive Validity: Empirical Evidence

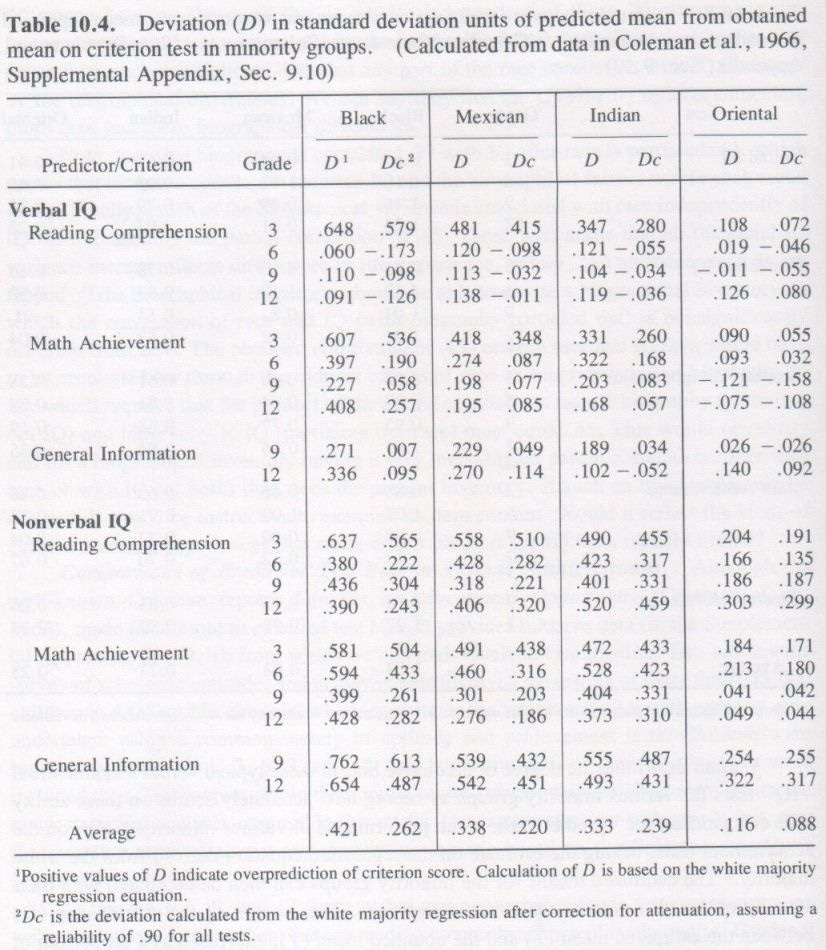

Test Bias in Predicting Scholastic Performance

Predictive Bias in the Armed Forces

Bias in the Test Prediction of Civilian Job Performances

Ch.11 Internal Criteria of Test Bias: Empirical Evidence

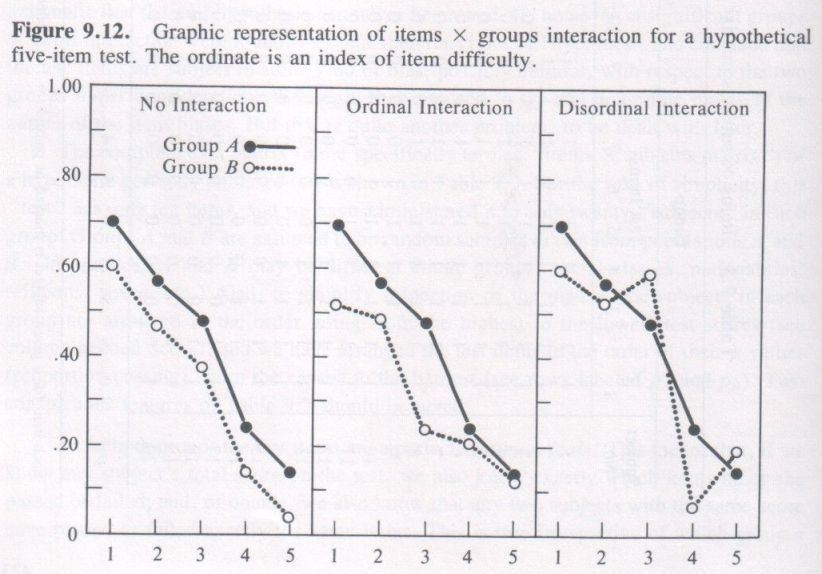

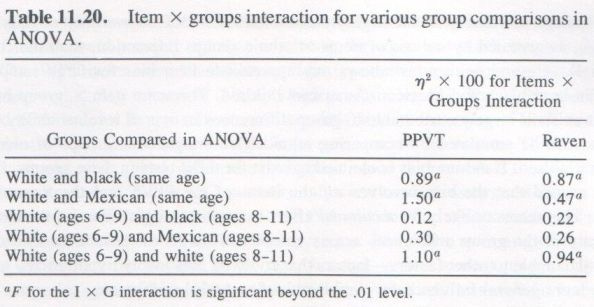

Item x Group Interaction

Ch.12 External Sources of BiasChapter 6 Do IQ Tests Really Measure Intelligence?

Causes of Correlation

Textbooks constantly remind us that correlation does not necessarily imply causation. Two variables with no direct causal connection between them may be highly correlated as a result of their both being correlated (causally or not) with a third variable. Even if rxy = 0, we cannot be sure there is no causal connection between x and y. A causal correlation between x and y could be statistically suppressed or obscured because of a negative correlation of x with a third variable that is positively correlated with y. A variable that, through negative correlation with x (or y) and a positive correlation with y (or x), reduces the correlation rxy is called a suppressor variable.

To establish causality, other information than correlation is needed. Temporal order of the correlated variables increases the likelihood of causality, that is, if variable x precedes variable y in time, it is more likely that x causes y. But even correlation plus temporal order of the variables is insufficient as a proof of causality. To prove causality we must resort to a true experiment, which means that the experimenter (rather than natural circumstances) must randomly vary x and observe the correlated effect on y. If random experimental manipulation (i.e., experimenter-controlled variation) of variable x is followed by correlated changes in y, we can say that variation in x is a cause of variation in y. This is why experimental methods are so much more powerful than correlation alone. Unfortunately, much of the raw material found in nature that we wish to subject to scientific study cannot be experimentally manipulated - to do so may be practically unfeasible or it may be morally objectionable. It is largely for these reasons that experimental plant and animal genetics have been able to make much greater scientific strides than human genetics.

Although it is a commonplace truism that “correlation does not prove causation,” one seldom sees any discussion of the causes of correlation between psychological variables. [...]

1. Common Sensory-Motor Skill. Variables x and y may be correlated because they involve the same sensory-motor capacities. This is a practically negligible cause of correlation among most tests of mental ability. That is, very little, if any, of the test variance in the normative population is attributable to individual differences in visual or auditory acuity or to motor coordination, physical strength, or agility. Persons with severe sensory or motor handicaps must, of course, be tested for mental ability on specially made or carefully selected tests on which performance does not depend on the particular sensory or motor function that is disabled.

2. Part-Whole Relationship. Variables x and y may be correlated because the skills involved in x are a subset of the skills required in y. For example, x is a test of shifting automobile gears smoothly and y is a driving test; or x is a test of reading comprehension and y is a verbal test of arithmetic problem solving. Transfer of skill from one situation to another, due to common elements, also comes under this heading. Playing the clarinet and playing the saxophone are more highly correlated because of common elements of skill than the correlation between playing the clarinet and playing the violin, which involves fewer elements in common.

3. Functional Relationship. Variables x and y may be functionally related in the sense that one skill is a prerequisite for the other. For example, a performance on a digit-span test of short-term memory may correlate with performance on an auditory test of arithmetic problem solving, because the subject must be able to retain the essential elements of a problem in his memory long enough to solve it. Memory may not be intrinsic to arithmetic ability per se (i.e., it is not a part-whole relationship), as might be shown by a much lower correlation between auditory digit-span and arithmetic problems presented visually so that the person does not have to be able to remember all the elements of the problem while solving it.

4. Environmental Correlation. There may be no part-whole or functional relationship whatever between x and y, and yet there may be a substantial correlation between them because the causes of x and y are correlated in the environment, whether x and y be specific skills or items of knowledge. For example, there is no functional or part-whole connection between knowledge of hockey and knowledge of boxing, yet it is more likely that persons who know something about hockey will also know something more about boxing than they will about say, the opera. And it is more likely that the person who knows something about symphonies will also know something about operas. In all such cases, correlated knowledge is a result of correlated environmental experiences. The same thing applies to skills; we would expect to find a positive correlation between facility in using a hammer and in using a saw, because hammers and saws are more correlated in the environment than are, say, hammers and violins. Different environments and different walks of life can make for quite different correlations among various items of knowledge and specific skills. On the other hand, a common language, highly similar public schools, movies, radio, television or other mass media, and mass production of practically all consumer goods and necessities all create a great deal of common experience for the vast bulk of the population.

5. Genetic Correlation. Variables x and y may be correlated because of common or correlated genetic determinants. There are three kinds of genetic correlation that are empirically distinguishable by the methods of quantitative genetics: correlated genes, pleiotropy, and genetic linkage.

Correlated genes, through selection and assortative mating - segregating genes that are involved in two (or more) different traits, may become correlated in the offspring of mated pairs of individuals both of whom carry the genes of one or the other of the traits. For example, there may be no correlation at all between height and number of fingerprint ridges. Each is determined by different genes. But, if, say, tall men mated only with women having a large number of fingerprint ridges, and short men only with women having few ridges, in the next generation there would be a positive genetic correlation between height and fingerprint ridges. Tall men and women would tend to have many ridges and short persons would have few. Breeding could just as well have created a negative correlation or could wipe out a genetic correlation that already exists in the population. A genetic correlation may also coincide with a functional correlation, but it need not. Selective breeding in experimental animal genetics can breed in or breed out correlations among certain traits. In the course of evolution, natural selection has undoubtedly bred in genetic correlations among certain characteristics. Populations with different past selection pressures and different factors affecting assortative mating, and consequently different evolutionary histories, might be expected to show somewhat different intercorrelations among various characteristics, behavioral as well as physical.

Pleiotropy is the phenomenon of a single gene having two or more distinctive phenotypic effects. For example, there is a single recessive gene that causes one form of severe mental retardation (phenylketonuria); this gene also causes light pigmentation of hair and skin, so that the afflicted children are usually more fair complexioned than the other members of the family. Thus, there is a pleiotropic correlation between IQ and complexion within these families.

Genetic linkage causes correlation between traits because the genes for the two traits are located on the same chromosome. (Humans have twenty-three pairs of chromosomes, each one carrying thousands of genes.) The closer together that the genes are located on the same chromosome, the more likely are the chances of their being linked and being passed on together from generation to generation. Simple genetic correlation due to selection can be distinguished from correlation due to linkage by the fact that two traits that are correlated in the population but are not correlated within families are not due to linkage. Linkage shows up as a correlation between traits within families. (In this respect it is like pleiotropy.)

Influences on Obtained Correlations

It is also important to understand that obtained correlations in any particular situation are not Platonic essences. They are affected by a number of things. Suppose that we are considering the correlation between two variables, x and y. We give tests X and Y to a group of persons and compute rxy. Now we have to think of several things that determine this particular value of rxy:

1. First, there is the correlation between X and Y in the whole population from which our group is just a sample. The correlation in the population is designated by the Greek letter rho, pxy. Obviously the larger our sample, the closer rxy is likely to come to pxy. Any discrepancy between rxy and pxy is called sampling error and is measured by the standard error of the correlation, SEr (not to be confused with the standard error of estimate). SEr = (1-r²)/SQRT(N-1), where N is the number of persons (or pairs of correlated measurements) in the sample. (When p is zero, SEr = 1/SQRT(N-1).) The sample size does not affect the magnitude of the correlation, but only its accuracy, and SEr is a measure of the degree of accuracy with which the correlation coefficient r obtained from a sample estimates the correlation p in the population. So we should always think of any obtained correlation as r ± SEr; that is, r is a region, a probabilistic estimate that tells us that r is most likely in the region of +1 SEr to -1SEr from the population correlation p. The expression r = .55 ± .03, for example, means that .55 most likely (i.e., more than two chances out of three) falls within the range of plus or minus .03 of p; or, to put it another way, that p most probably lies somewhere between .52 and .58. The larger the sample, the smaller is SEr and the more accurate is r as an estimate of p. We are usually more interested in r as an estimate of p than in the sample r for its own sake.

2. The so-called range of talent in one or both variables also affects the correlation. This is an important factor to consider in making inferences from the sample r to the population p, because generally the range of talent in the samples used in most research studies is considerably more restricted than the range of talent in the general population. Restriction of range in either variable (or both) lowers the correlation. For example, the correlation between height and weight in the general population is between 0.6 and 0.7. But, if we determine the correlation between height and weight among a team of professional basketball players, the correlation will drop to between 0.1 and 0.2. The full variation in height and weight found in the general population is not found in the basketball team, all of whom are tall and lean. Figure 6.7 illustrates the effect of restriction of range on the correlation scatter diagram. The moral is that in viewing any correlation, and particularly discrepancies between correlations of the same two variables obtained in different samples, we should consider the range (or variance) of the variables in the particular group in which the correlation was obtained. For example, correlations among ability tests are usually much lower in a college sample than in a high school sample, because the college population has a much more restricted range of intellectual ability - practically the entire lower half of the general population is excluded. Thus the more selective the college, the less will students’ scores on the entrance exam (or other tests of mental ability) correlate with the students’ grade-point averages.

3. The reliability of the tests or measurements affects the correlation. The upper theoretical limit of the correlation between any two measures, say, X and Y, is the square root of the product of their reliabilities, i.e., the maximum possible rxy = SQRTrxxryy, where rxx and ryy are the reliability coefficients of X and Y, respectively. The test’s reliability can be thought of as the test’s correlation with itself. [7] If we wish to know what our obtained correlation rxy would be if our measures were perfectly reliable, we can make a correction for attenuation. The corrected correlation, rc = rxy/SQRTrxxryy. [...]

A Realistic Example of Factor Analysis

Table 6.5 shows correlations among ten physical and athletic tests. The labels of the tests are all quite self-explanatory, except possibly for variables 7 and 8. In no. 7 the person is required to trace a drawing of a five-pointed star while observing his own performance in a mirror. In no. 8 the person must try to keep a stylus on a small metal disc, called the “target,” about the size of a nickel, while it rotates on a larger hard rubber disc like a phonograph turntable at about one revolution per second. Electrical contact of the stylus with the small metal disc operates a timing device that records the number of seconds per minute that the stylus is in contact with the target.

Inspection of the correlation matrix in Table 6.5 shows that it is not random - there are too many high correlations, all positive, and the large correlations can be seen to be grouped or clustered in different parts of the matrix. The fact that all the r’s are positive indicates that there is a substantial general factor in this matrix, and the clustering of high correlations suggests that there are probably also one or more group factors in addition to the general factor.

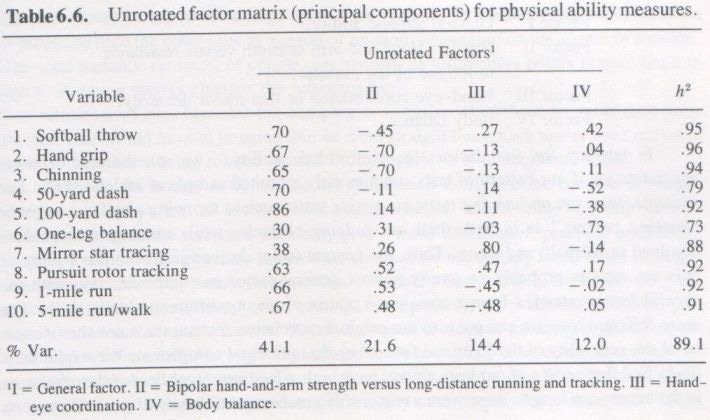

Table 6.6 shows the first four principal components extracted from the correlation matrix in Table 6.5. Only four components were extracted. Together they account for 89.1 percent of the total variance in the ten variables. The remaining six components, if extracted, would account for only 10.9 percent of the total variance, averaging about 1.8 percent of the variance for each component. Thus none of the six remaining components is retained, as each one accounts for so little as not to be needed to recreate the original correlation matrix, which can be recreated, within the margin of sampling error, using only the first four components. Thus, in a sense, we have reduced ten intercorrelated variables to only four independent factors. The communality, h², indicates the proportion of variance in each variable that is accounted for by the four components. All the communalities are quite large, the smallest being .73. [...]

The first principal component, I, is the general factor, and the main question is, how large is this general factor, that is, how much of the variance does it account for? In this case it accounts for 41.1 percent of the total variance or 46 percent of the total communality. Thus it is a quite large general factor, almost twice as large as the next largest factor, which accounts for only 21.6 percent of the variance. Only two of the tests have relatively small loadings on the general factor - no. 6 (one-leg balance) and no. 7 (mirror star tracing). The best single test of the g factor in this battery is the 100-yard dash, with a g loading of .86. The remaining tests are all pretty much alike in their g loadings.

The second principal component, II, we see, has some large negative as well as large positive loadings; it is therefore called a bipolar factor. What this means is that, when persons are equated on g, those who score high on the tests at one end of the bipolar factor will score low on the tests at the other end. The bipolar factor thus can be interpreted as two factors that are negatively correlated with each other. The high negative loadings on II are handgrip (-.70) and chinning (-.70), followed by a softball throw (-.45). This pole of factor II obviously involves hand-and-arm strength - it might be labeled “upper-limb strength.” The positive pole is less distinct, with largest loadings on pursuit rotor, 1-mile run, and 5-mile run/walk. This is hard to decipher or to label, as these three tests appear so dissimilar. It is hard to imagine why they go together and we can only speculate at this point. The best speculation is that all involve resistance to fatigue of the leg muscles. The short-distance running tests have negligible loadings on this factor. Pursuit rotor tracking is performed standing up, and persons commonly report feeling some fatigue of their leg muscles after working 10 minutes or so at the pursuit rotor. We could experimentally test this hypothesis by giving the pursuit rotor to persons sitting down. Under this condition pursuit rotor performance should have a negligible loading on factor II, if our hypothesis is correct that the positive pole of factor II represents resistance to leg fatigue. This is how factor analysis can suggest experimentally testable hypotheses about the nature of abilities.

Factor III has its largest positive loadings on mirror star tracing (+.80) and pursuit tracking (+.47). It might be labeled “hand-eye coordination,” as that is what these two tests seem to have in common. The long-distance running tests are negatively loaded on this factor, and the other tests have practically negligible loadings.

Factor IV has its largest loading on one-leg balance (+.73) and is also positively loaded on softball throw (+.42), which suggests that it is a body balance factor. It is not a very important factor for most of the tests (accounting for only 12 percent of the total variance).

In any one such analysis our labeling of the factors must always be regarded as speculative and tentative. By repeating such analyses on various groups of subjects, and by including other tests that we hypothesize might be good measures of one factor or another, we can gradually clarify and confirm the nature of the basic factors underlying a large variety of athletic skills. In this particular analysis, we might tentatively summarize the factors as follows:

Factor I General athletic ability.

Factor II Bipolar: Hand-and-arm strength versus resistance to fatigue of leg muscles.

Factor III Hand-eye coordination or fine-motor dexterity.

Factor IV Body balance.

In labeling the first factor “general athletic ability,” we run the risk of overgeneralization if our battery of tests contains only a limited sample of athletic skills. For example, there are no jumping tests; no aiming tests, such as throwing a ball at a target or “making baskets” as in basketball; no dodging obstacles while running, as would be involved in football; and so on. Thus, the general factor derived just from this battery of only ten tests is probably an overly narrow general factor as compared, say, with the general factor extracted from a correlation matrix of twenty different athletic skills. The more different tests we can put into our original correlation matrix, the more sure we can be of the generality of the “general factor” or first principal component. We would most likely find that certain of our tests always have high g loadings regardless of the other tests in the battery, so long as there were a reasonable number and diversity of tests. These tests of more or less consistently high g loadings would therefore be regarded as good indices of g. The best measure of g, of course, would be factor scores based on the g loadings of a large and diverse battery of tests. Essentially, these factor scores are a weighted average of the standardized scores on each of the tests, the weights being proportional to each test’s loading on the general factor. (Factor scores on the other factors are obtained by a different algorithm.) Thus, in terms of our example in Table 6.6, a person who is exactly one standard deviation above the mean on each of the ten tests would have a factor score on the general factor of 0.62 (i.e., the average of the products of the factor loading on each test times the person’s test score in standard deviation units). The unweighted average of the test scores provides only a rough approximation to the general factor, “contaminated” by other factors to the extent that the various tests are not loaded on the general factor.

Because our four factors account for most of the variance in all ten tests, we could more efficiently describe the abilities of each person in terms of four factor scores instead of ten test scores. Even if we added ten more tests, we may still have only five or six (or even four) factor scores. It becomes more and more difficult to add further tests that involve any significant proportion of variance not already accounted for by the several factors involved in all the other tests. Thus factor scores can be a much more efficient means of describing abilities than test scores.

Rotation of Factors. The reader will have noticed that Table 6.6 is labeled “unrotated” factor matrix. This means that the principal components are given just as they emerge from the mathematical analysis, each accounting for the largest possible linear component of variance that is independent of the variance accounted for by all of the preceding components.

Looking back to Figure 6.8 we see two principal components, I and II. We can rotate these axes on their point of intersection, while keeping everything else in place. When rotated into any other position than that shown in Figure 6.8, they are no longer principal components, but rotated factors. The first factor after rotation is no longer a general factor in the sense that it accounts for the maximum amount of variance in all of the tests. Some part, perhaps a large part, of the variance on the first principal component is projected onto the other axes as a result of rotation, depending on the degree of rotation. The total variance remains, of course, unchanged, as all the data points remain fixed in space. Rotation merely changes the reference axes.

Rotation of axes becomes too complex to visualize when there are more than three factors. One would have to imagine four or more straight lines, each one at right angles to each of the others, being rotated around a single point in n-dimensional space!

Why do we bother to rotate the axes? Rotation is often done because it usually clarifies and simplifies the identification, interpretation, and naming of group factors. Other positions of the reference axes may give a more meaningful, practical or intuitive picture. Rotation will not create any new factors that are not already latent in the principal components, but it may permit them to stand out more clearly. It does so, however, at the expense of the general factor (first principal component), the variance of which gets distributed over the rotated factors. Rotation is quite analogous to taking a picture of the same object from a different angle. For example, we may go up in a helicopter and take an aerial photograph of the Grand Canyon, and we can also take a shot from the floor of the canyon, looking through it lengthwise, or from any other angle. There is no one “really correct” view of the Grand Canyon. Each shot better highlights some aspects more than others, and we gain a better impression of the Grand Canyon from several viewpoints than from just any single one. Yet certain views will give a more informative overall picture than others, depending on the particular viewer’s interest. But no matter what the angle from which you photograph the Grand Canyon, you cannot make it look like the rolling hills of Devonshire, or Victoria Falls, or the Himalayas. Changing the angle of viewing does not create something that is not already there; it may merely expose it more clearly, although at the expense of perhaps obscuring some other feature.

In the early days of the development of factor analysis, theorists had heated arguments over whether factors should be rotated, and, if so, just how they should be rotated. Nowadays, there is little if any real argument over this issue. Deciding whether unrotated factors or various rotations are more or less meaningful than others must be based on criteria outside factor analysis itself. The main justification for rotation is to obtain as clear-cut a picture as possible of the latent factors in the matrix. To achieve this, one should look at both the unrotated and rotated factors.

But into what position should the factors be rotated? Again, there is no sacrosanct rule. The main idea is to rotate the axes into whatever position gives the clearest picture of the factorial structure of all the tests. But obviously we need some notion of what we mean by the “clearest picture.”

Thurstone (1947) proposed a criterion for factor rotation that he named simple structure. He believed that simple structure reveals the psychologically most meaningful picture of the factorial structure of any set of psychological tests. Thurstone’s idea of simple structure has become the most common basis for rotation, the aim being to approximate as closely as possible, for any given matrix, the criterion of simple structure. Simple structure is approximated to the extent that the factors can be simultaneously rotated so as to (1) have as many zero (or nearly zero) loadings on each factor as possible and (2) concentrate as much of the total variance in each test on as few factors as possible. Table 6.7 shows an idealized factor matrix with perfect simple structure. You can see that the interpretation of the factors in terms of the tests they load on is greatly simplified, as is the interpretation of the tests in terms of the factors they measure. Each test represents a single factor. Such tests would be called factor-pure tests because they measure only one factor, uncontaminated by any others. It was Thurstone’s dream to devise such factor-pure tests to measure the seven “Primary Mental Abilities” represented by the seven factors that he succeeded in extracting reliably from multitudes of highly diverse cognitive tests.

The general factor worked against this dream. It pervaded all the tests and thereby made it impossible to do more than approach simple structure; the tests always had substantial loadings on more than one factor, because the rotation spreads the general factor over the several rotated factors, so that simple structure, while it can be more or less approximated, cannot be fully achieved as long as there is a substantial general factor.

To get around this problem, Thurstone adopted the method of oblique factor rotation. When all the rotated axes are kept at right angles to one another, regardless of the final position to which they are all simultaneously rotated, the rotation is termed orthogonal. When simple structure cannot be closely approximated by means of orthogonal rotation (which will always be the case when there is a large general factor), one can come closer to simple structure by letting the factor axes assume oblique angles in relation to one another, rather than maintain all the axes at right angles. The axes and angles, then, are allowed to move around in any way that will most closely approximate simple structure. But recall the fact that, when the angles between axes are different from 90°, that is, when they are oblique angles, the factors are no longer uncorrelated. Oblique rotation makes simple structure possible by making for correlations between the factors themselves. In other words, one gets rid of the general factor in each of the rotated primary factors by converting this general factor variance into covariance (i.e., correlation) among the factors themselves.

Thus the correlations among oblique factors can themselves be subjected to factor analysis, yielding second-order factors, which are of course fewer in number than the primary factors. Usually with cognitive tests only one significant second-order factor emerges - the general factor. If there are two or more second-order factors, they too can be obliquely rotated and their intercorrelations factor analyzed to yield third-order factors. At some point in this process there will be just one significant factor - the general factor - at the top of the hierarchical factor structure, as pictured in Figure 6.9. The general factor will show up as the first unrotated factor, or as the highest factor in a hierarchical analysis of rotated oblique factors, as shown in Figure 6.9. One can arrive at essentially the same g factor from either direction. It is seldom a question of whether there is or is not a g factor, but of how large it is in terms of the proportion of the total variance it accounts for.

Now let us see what orthogonal rotation to approximate simple structure does to our matrix of physical variables. Table 6.8 shows the rotated factors for the physical ability measures. An objective mathematical criterion of simple structure was used, called varimax, because it rotates the factors until the variance of the squared loadings on each factor is maximized (Kaiser, 1958). Obviously the variance of the squared loadings on any given factor will be maximized when the factor loadings approach either 1 or 0. The method (now usually done by computer) rotates all the factors until a position is found that simultaneously maximizes all the variances of the squared loadings on each factor, that is, produces as many very large and very small loadings as the data will allow.

We could obtain an even closer and more clean-cut approximation to simple structure had we allowed oblique factors in our rotation. But obliqueness also introduces greater sampling error, and we therefore have less confidence in the stability of our results than if we maintained orthogonality.

The rotated factors in Table 6.8 are quite clear. The general factor has been submerged in the rotated factors. Notice that the communalities, h², remain unchanged and that the four factors account for 89.1 percent of the variance but that each factor now accounts for a more equal share of the total variance than was the case with the unrotated factors. The general factor, which had carried so much of the variance (41.1 percent), is now spread out and submerged within the four “simple structure” factors. The rotated factors, just like the unrotated principal components, will reproduce, to the same degree of approximation, all the correlations in the original matrix, by applying the same rule that the correlation between any two variables is the sum of the products of their loadings on each of the factors.

Factor I, with very large loadings on the first three tests, is clearly a “hand-and-arm strength” factor. (It is the same factor that we identified as one pole of the bipolar factor II in the unrotated factor matrix; see Table 6.6.)

Factor II, with its largest loadings on variables 9 and 10, and also a moderately large loading on variable 5, is a running or leg strength factor, and suggests resistance to fatigue of leg muscles, as it is most heavily loaded on the most arduous and fatiguing running tasks. In fact, it could even be a general resistance to fatigue or a general endurance factor. (It is essentially the same factor as one pole of bipolar factor II in the unrotated matrix.)

Factor III, with its only large loadings on mirror star tracing and pursuit rotor tracking, is clearly a hand-eye coordination or fine muscle dexterity factor. (It is the same as factor III in the unrotated matrix.)

Factor IV has its only large loading on one-leg balance and is thus a body balance factor, the same as factor IV in the unrotated matrix.

Intelligence and Achievement

In a series of large statistical analyses, too complex to be explicated here in detail, William D. Crano has attempted to determine the direction of causality between intelligence and achievement (Crano, et al., 1972; Crano, 1974). The investigation used a technique known as cross-lagged correlation analysis. In brief, intelligence tests and a variety of scholastic achievement tests were given to large samples of school children in Grade 4 and two years later in Grade 6. The key question is, Do the Grade 4 achievement tests predict Grade 6 IQ more or less than Grade 4 IQ tests predict Grade 6 achievement? If the correlation from Grade 4 to 6 is higher in the direction IQ4 → Achievement6 than in the direction Achievement4 → IQ6, it can be reasonably argued that individual differences in IQ have a causal effect on individual differences in achievement. This, in fact, is what was found for the total sample of 5,495 pupils. However, when the total sample was broken down into two groups consisting of pupils in suburban schools and pupils in inner-city schools (in other words, middle- and lower-socioeconomic-status groups), the cross-lagged correlations showed different results for the two groups. The suburban group clearly showed the causal sequence IQ4 → Achievement at a high level of statistical significance, whereas the results of the inner-city group were less clear, but suggested, if anything, the opposite causal sequence, that is, Achievement4 → IQ6, at least for verbal IQ. (The Ach4 → Nonverbal IQ6 was significant only for arithmetic achievement.) Also a high-IQ sample (one standard deviation above the mean) showed a much more prominent IQ4 → Ach6 cause-effect correlation than did a low-IQ sample (one standard deviation below the mean). The predominant direction of causality is from the more abstract and g-loaded tests to the more specific and concrete skills. For example, in the total sample and in both social-class groups, Verbal IQ in Grade 4 predicts spelling in Grade 6 significantly higher than spelling in Grade 4 predicts Verbal IQ in Grade 6.

Factor analysis has shown that the Verbal IQ of the Lorge-Thorndike Intelligence Test used in Crano’s study measures mainly crystalized intelligence gc, whereas the Nonverbal IQ is mainly fluid ability g, (Jensen, 1973d). Consistent with Cattell’s theory of gf and gc, Crano et al. (1972) found that Grade 4 Nonverbal IQ (gf) predicts Grade 6 Verbal IQ (gc) more highly than Grade 4 Verbal IQ predicts Grade 6 Nonverbal IQ, and this is true in both social-class groups. Thus, gf can be said to cause gc more than the reverse. Crano et al. (1972, p. 272) conclude as follows:

The findings indicate that an abstract-to-concrete causal sequence of cognitive acquisition predominates among suburban school children. The positive and often statistically significant cross-lagged correlation values . . . also indicate that the concrete skills act as causal determinants of abstract skills; their causal effectiveness, however, is not as great as that of the more abstract abilities. Taken together, these results suggest that the more complex abstract abilities depend upon the acquisition of a number of diverse, concrete skills, but these concrete acquisitions, taken independently, do not operate causally to form more abstract, complex abilities. Apparently, the integration of a number of such skills is a necessary precondition to the generation of higher order abstract rules or schema. Such schema, in turn, operate as causal determinants in the acquisition of later concrete skills, (italics added)

Chapter 7 Reliability and Stability of Mental Measurements

Conditions That Influence Test Reliability

Scoring. The scoring of many of the items in individually administered intelligence tests, such as the Stanford-Binet and the Wechsler scales, requires a subjective judgment on the part of the tester as to whether the examinee passed or failed the item. For example, in the vocabulary test the tester has to decide whether the definitions given by the examinee are to be scored right or wrong. (In the Wechsler tests the answer to each vocabulary item is scored 2, 1, or 0, depending on the quality of the examinee’s response.) To the extent that testers do not agree on the scoring of a given response, the reliability of the total score is lowered. To keep the scoring reliability (i.e., agreement among testers) as high as possible, the scoring instructions are made quite explicit in the test manual, with many examples of passing and failing responses to each item. Moreover, the standard for passing any given item is made very lenient, so that a failing response is quite easily agreed on. Doubtful and ambiguous “correct” responses are generally scored as correct, so there will be high agreement among different scorers as to which answers are clearly wrong. (The Stanford-Binet scoring criteria are more lenient in this respect than the Wechsler’s.)

Besides having explicit scoring criteria, individually administered tests should be given and scored only by trained persons. An essential part of such training consists of supervision and criticism of the trainee’s performance in ways that make the procedures of testing and scoring more uniform and standardized and hence more reliable. With such training the agreement among scorers can be made very high, with interscorer correlations in the high .90s. Less than perfect agreement among scorers will be reflected in the test’s reliability coefficient. If the test’s reliability is adequately high for one’s purpose, it follows that the reliability of the scoring itself is satisfactory, as the scoring reliability cannot be less than the test’s internal consistency reliability.

It is commonly believed that, by uniformly relaxing the administration procedures or scoring criteria for all testees, the less able will enjoy an advantage. That is, everyone’s score would rise, but the low scorers would rise relatively more under more lenient conditions. When this has been tried, the brighter testees benefit most in absolute score, but the rank order of subjects is hardly changed. Little and Bailey (1972), for example, gave the WAIS Comprehension and Similarities subtests to college students under conditions that would maximize their performance, by urging the students to give all the correct answers they could think of to each question, without time limit. Scores were obtained by giving credit for all correct answers on each item, as contrasted with the standard WAIS scoring procedure of giving a maximum of two points to each item. The result of the more “generous” procedure was to spread the higher- and lower-scoring students farther apart, while the “generous” and standard scores correlated very highly (r = .93 for Comprehension, .84 for Similarities). This shows that even when the conditions of administration and scoring are altered quite drastically, provided that it is done uniformly for all testees, the rank order of persons’ scores is little changed. There is little statistical interaction of testees and scoring procedures. Thus the scoring criteria themselves, if uniformly applied, are not a potent influence on test reliability.

The same thing is usually true of allowing unlimited time on normally timed tests. The untimed condition will result in higher scores, but the correlation between the timed and untimed scores will be very high. Persons’ scores on a power test maintain much the same rank order for various time limits, provided that the time limit is the same for everyone. A power test is one in which the items are arranged in order of increasing difficulty, and the time limit is such that most testees run out of ability, so to speak, before they run out of time, so that increasing the time limit has little effect on the score. Most tests of intelligence and achievement are power tests. In contrast, speed tests are comprised of many easy items all of which nearly everyone would answer correctly if there were no time limit. Tests of clerical and motor skills are commonly of this type.

Most group-administered tests have completely objective scoring, so there is no question of scoring reliability, barring clerical inaccuracies due to carelessness or to defects in the equipment in the case of machine-scored tests. Such clerical errors are generally rare, and precautions can be taken to reduce their occurrence, such as by having every test scored independently by two persons (or machines) and checking disagreements.

Standardized tests administered and scored by classroom teachers who are untrained and unsupervised in their testing procedures can yield highly unreliable and invalid scores. It is not the rule, fortunately. But we have found numerous deplorable instances in our retesting of teacher-tested classes, on the same tests, by trained testers, under very careful standardized conditions. Some of the teacher-administered test results were found highly discrepant, usually due to incomplete or improper test instructions, lax observance of time limits on timed tests, and a poor testing atmosphere resulting from a disorderly class. Tests administered under such conditions are useless, at best. (This problem is discussed more fully in Chapter 15, pp. 717-718.)

Guessing. Most objective tests are of the multiple-choice type, in which the testee must select the one correct answer from among a number of incorrect alternatives called distractors. The testee who does not know the correct answer to a given item may leave it unanswered or may make a guess, with some chance of picking the correct answer. When there are many difficult items, there is apt to be more guessing. A corollary of this is that persons with lower scores are more likely to guess on more items, as there are fewer items to which they know the answers.

Guessing lowers the reliability of test scores, because items that are gotten right merely by chance cannot represent true scores. “Luck” in test taking is simply a part of the error variance or unreliability of the test. The larger the number of multiple-choice alternatives, the smaller the chances of guessing the correct answers, and, consequently, the less damage to the reliability of the test. True-false items are in this respect the worst, as there is a 50 percent chance of being right by guessing. Recall tests are the best, as no alternatives are given and the testee must produce his own answer. (This is the case in most individual tests of intelligence.) A study by Ruch (cited by Symonds, 1928) illustrates the effect of the number of multiple-choice response alternatives on the reliability of equivalent tests of one hundred items:

Type of Answer Reliability Coefficient

Recall .950

7-alternative multiple choice .907

5-altemative multiple choice .882

3-altemative multiple choice .890

2-alternative multiple choice .843

True-false .837

Test constructors have devised complex ways of scoring tests, taking account of right, wrong, and unanswered items and the number of multiple choice alternatives, so as to minimize the effects of guessing on the total scores and on their reliability. [4] Most modem standardized tests take account of these factors in their scoring procedures, and their reliabilities can be high despite persons’ tendency to guess when they are unsure of the right answer.

Range of Ability in the Sample. Reliability is not a characteristic of just the test, but is a joint function of the test and the group of persons to which it is given. A test with high reliability in one group may have much lower reliability in a different group.

The principal condition that causes variations in a test’s reliability from one group to another is the range of test-relevant ability in the group. A test administered to a group that is very homogeneous in the ability measured by the test will have lower reliability in that group than the same test administered to a more heterogeneous group.

Any decrease in the range of obtained scores or any piling up of scores in one part of the scale automatically lowers reliability. Piling up of scores occurs when a test is too difficult or too easy for a given group, or when persons at the upper and lower extremes of ability have been excluded. (The most dependable index of the score dispersion in a group is the standard deviation, because it takes all the scores into account, not just the most extreme values, which define the range.)

Tests have their maximum reliability when the average item difficulty is 50 percent passing. In this case, the frequency distribution of the total scores will be symmetrical about the group mean.

Another way of saying all this is that to have maximum reliability a test must tap the full range of ability in the group. Otherwise the test is said to have a ceiling effect or a floor effect that results in inadequate discriminatory power at the high or low ends of the scale.

Miscellaneous Sources of Unreliability. Of the numerous other factors that can reduce a test’s reliability, the most often recognized are

1. Interdependence of items lowers reliability; that is, the answer to one item is suggested in another, or knowing the answer to one item presupposes knowing the answer to another item. The effect on reliability is like that of reducing the number of items in the test.

2. Dissimilarity in the experiential backgrounds of persons taking the test can lower reliability. Conversely, tests that sample the more common elements of experience are more reliable. Thus tests of knowledge and skills acquired in school are likely to be more reliable than tests of knowledge and skills acquired in the home, other parameters of the tests being equal.

3. For reasons related to factor 2, scholastic achievement tests administered late in the school year tend to have higher reliability than those given at the beginning of the year.

4. “Tricky” questions or “catch” questions lower the reliability of a test.

5. Wording of test items - words that are overemphasized and may mislead, emotionally toned words that distract from the main content, overly long wording of the question, strange and unusual words, poor sentence structure and unusual word order - all these features lower the reliability.

6. Inadequate or faulty directions or failure to provide suitable illustrations of the task requirements can lower reliability. Giving several easy practice items at the beginning of the test can increase reliability.

7. Accidental factors such as breaking a pencil or interruptions and distractions lower reliability, especially in timed tests.

8. Subject variables such as lack of effort, carelessness, anxiety, excitement, illness, fatigue, and the like may adversely influence reliability.

Regression toward Which Mean ? ... The net effect of using such estimated true scores, besides increasing the accuracy of measurement, is to reduce the higher scores of persons belonging to low-scoring subgroups and boost the lower scores of persons belonging to high-scoring subgroups. Such an outcome may seem unfair from the standpoint of members of the lower-scoring subgroups, but it is merely the statistically inevitable effect of increasing the accuracy of measurement. When higher scores are preferred in the selection procedure, the “luck” factor resulting from unreliability statistically favors persons belonging to lower-scoring groups. The “luck” factor is minimized by using estimated true scores instead of obtained scores.

Causes of Score Instability

Measurement Error Per Se. These are the same factors that lower the reliability, mentioned earlier, and can show up as score instability with test-retest intervals of less than a week. They involve scoring errors, variability in the testing situation itself, and short-term fluctuations in the testee’s attentiveness, willingness, emotional state, health, and the like. All these influences on stability are quite minor contributors to the long-term test-retest instability of test scores, however, as indicated by the very high stability coefficients for short test-retest intervals, which scarcely differ from the reliability coefficients based on a single administration of the test.

Practice Effects. Gaining familiarity with taking tests results in higher scores, usually of some 3 to 6 IQ points - more if the same test is repeated, less if a parallel form is used, and still less if the subsequent test is altogether different. Practice effects are most pronounced in younger children and persons who have had no previous experience with tests. In a minority of such cases retest scores show dramatic improvements equivalent to 10 or more IQ points. The reliability and stability of scores can be substantially improved by giving one or two practice tests prior to the actual test on which the scores are to be used. The effects of practice in test taking rapidly diminish with successive tests and are typically of negligible consequence for most school children beyond the third grade unless they have had no previous exposure to standardized tests.

Because nearly all persons show similar effects of practice on tests, practice has little effect on the ranking of subjects’ scores except for those persons whose experience with tests is much less or much greater than for the majority of the persons who were tested. [...]

Individual Differences in Rate of Maturation. Even when all of the other causes of score instability are accounted for, some fluctuation in scores still remains, however, becoming less and less as children approach maturity. These fluctuations are due to intrinsic individual differences in rate of development. They are apparent in physical as well as in mental growth. Growth of any kind does not proceed at a constant rate for all individuals, there are spurts and lags at different periods in each person’s development. These, of course, contribute to lower stability coefficients of scores over longer intervals. Figure 7.3 shows individual mental growth curves from 1 month to 25 years for five boys. One clearly sees both stability and instability of the mental growth rates in these graphs.

Spurts and lags in the rate of mental development are conditioned in part by genetic factors, as indicated by the fact that the pattern of spurts and lags in mental development scores, at least in the first two years, coincides more closely for identical than for fraternal twins (Wilson, 1972). On the other hand, the constant aspect of mental growth rates appears to be much more genetically determined than the pattern of lags and spurts, which evidently reflects changing environmental influences to a considerable extent (McCall 1970).

Changes in Factor Composition. The very same test items cannot be used over very long test-retest intervals during childhood. Items that discriminate at ages 2 to 4 are much too easy and therefore nondiscriminatory at ages 6 to 8. Consequently, the item composition of tests must necessarily change from year to year over the interval from infancy to adolescence if the tests are to be psychometrically suitable at every age. Changing the items in tests to make them appropriate, reliable, and discriminating for each age may introduce changes in the factor composition of the test, so that the test does not actually measure exactly the same admixture of abilities at every age level. To the extent that the factor composition of the test changes at different age levels, the age-to-age correlations are reduced. Infant tests consisting of items that are appropriate below 2 years of age, for example, measure almost entirely perceptual-motor abilities, attention, alertness, muscular coordination, and the like. There are a few simple verbal commands and some assessment of the quality of the infant’s vocalization, but there are no items that call for abstraction, generalization, reasoning, or problem solving. Such items can be successfully introduced only after about age 2 or 3, and then only in a rudimentary form. Hence, tests before about age 4 or 5 are not as highly g loaded as later tests and are therefore rather poor predictors of scores on the much more g-loaded tests given to school-age children and adults. Below 2 years, scores on infant tests of development correlate negligibly with school-age IQs, and below 1 year of age the scores have zero correlation with IQ at maturity, provided that one excludes infants who are obviously brain damaged or have other gross pathological conditions.

Beyond age 2, however, most of the variance in Stanford-Binet IQs is attributable to the same general factor at every age level, steadily rising from about 60 percent g variance at age 2 to about 90 percent by age 10. The same thing is very likely true also of the Wechsler scales, in which the same types of subtests (though of course different items) are used throughout the age range from 5 years to adult.

Different abilities show varying degrees of stability from age to age. More complex and “higher,” or g-loaded, functions, such as reading, arithmetic, spelling, sentence completion, composition, and the like have been found to be more stable than simpler abilities such as number checking, handwriting, auditory memory span, and the like (Keys, 1928, p. 6). The various subtests of the General Aptitude Test Battery (developed by the U.S. Employment Service) display the typical differences in stability of various abilities, as shown in Table 7.12.

After g, verbal facility and knowledge appear to be the most stable, especially after maturity. The more fluid abilities such as abstract reasoning, problem solving, and memory are somewhat less stable after maturity, showing greater individual differences in rates of decline, especially in adults past middle age. In adults, crystalized abilities, as measured for example by tests of general information and vocabulary, go on gradually increasing up to middle age and often beyond, whereas the fluid abilities (e.g., matrices, block design, figure analogies, and memory span) show a gradual decline with advancing age. Overall ability level on omnibus tests of general intelligence shows little change throughout adulthood until advanced old age, as the gradual decline in fluid abilities is compensated for by the gradual increase in crystalized abilities.

Scale Artifacts. Studies of IQ stability based on age-to-age differences in IQ often show more instability of scores than would be inferred from age-to-age correlations. The explanation is that interage score differences are much more sensitive to imperfect scaling than are interage score correlations. If the units of the IQ scale are not equal from one age to another, a person will show IQ differences when there is really no change at all in his mental status relative to his age peers. The 1916 and 1937 editions of the Stanford-Binet had this scale defect as a result of calculating IQs as the ratio of mental age to chronological age (i.e., IQ = 100 MA/CA). Because this ratio had slightly different standard deviations at different ages, a constant IQ from one age to another could not represent a constant relative status. Conversely, if the person’s relative status remained stable from one year to the next, the IQ would have to change. Such a change is pure artifact due to inequalities in the IQ scale from year to year. This was the main reason for abandoning the ratio IQ and using instead a deviation IQ, which is a standardized score that represents the person’s deviation from his or her age-group mean in standard deviation units. Deviation IQs, which were adopted in the 1960 revision of the Stanford-Binet (as well as in all of the Wechsler tests and virtually all modern group tests of intelligence), maintain the same relative status from one age to another. The end result of changing from ratio IQs to deviation IQs is to reduce the age-to-age difference in IQ, although the age-to-age correlations remain the same. For example, an analysis of Stanford-Binet IQ changes in forty-two children between 6 and 12 years of age showed an average absolute change of 12.9 points for ratio IQs but only 9.8 points for deviation IQs (Pinneau, 1961).

An implication of using deviation IQs, which is too often forgotten, is that they cannot be used to compute mental age from the formula MA = CA X IQ/100. (The 1960 Stanford-Binet Manual, Part III, presents a table of the correct conversions from MA to IQ and vice versa.)

Chapter 8 Validity and Correlates of Mental Tests

Concurrent Validity of IQ Tests. How well do scores on different IQ tests agree with one another? Do different IQ tests measure one and the same intelligence? [...]

It can be seen that the correlations range widely, with an overall mean of +.67. Many studies have been summarized in terms of the total range of correlations (i.e., the lowest and highest r’s that are found in any of the studies) and the median value of the entire set of correlations (indicated in parentheses in Table 8.5). The mean of the median values is +.77. The mean of all the lower values of the range of correlations is +.50, and the mean of all the higher values of the range is +.82. Thus the correlations among various IQ tests can be said to be most typically in the range from about +.67 to +.77. The lower limit of the range of correlations between certain tests is often the result of studies based on small samples or on atypical groups, such as retardates, psychiatric patients, college students, or other groups with a restricted range of scores. Correlations are generally higher in studies based on representative samples of the general population. Also, some of the tests showing the lowest correlations with other tests (e.g., “Draw-a-Man and the Quick Test”) may be questioned as measures of intelligence even on the basis of other psychometric criteria than their poor correlations with a quite good test of intelligence such as the WISC.

Correlations between IQ tests in the range from .67 to .77 are just about what one should expect if the g loadings of most IQ tests range from .80 to .90 and the tests have little variance other than g in common. The reader may recall from Chapter 6 that the correlation between any two tests can be expressed as the sum of the products of the tests’ loadings on each of the common factors. By far the largest common factor in IQ tests is g. Tests with g loadings in the .80 to .90 range, therefore, would show intercorrelations ranging from .64 to .81. Other common factors, such as verbal ability, would tend to raise the correlations only slightly. The fact that the median correlation between the Wechsler Intelligence Scale for Children and the Stanford-Binet in forty-seven studies is .80 suggests that these two tests have g loadings of close to .90 (i.e., √.80), which is only slightly less than the reliabilities of these tests (i.e., about .95).

It should be remembered that the correlation between tests indicates mainly the degree to which persons maintain the same relative standing on the various tests. A high correlation does not guarantee that the IQ scores themselves will be alike on every test. It is often noticed that even though individuals remain in very much the same rank order on two different IQ tests, meaning there is a high correlation between the tests, the actual IQ scores may be quite discrepant on the two tests. The discrepancies in the two IQs may show up consistently throughout the whole range, or they may differ in direction and magnitude in the lower, middle, and upper ranges of the IQ scale. Hence the various IQ scales themselves, although they may be highly correlated, are not exactly equivalent in an absolute sense. In this respect mental testing is currently in the situation similar to the measurement of distance and weight before the adoption of uniform international standards of measurement. [...]

The most common causes of the IQ scale discrepancies among various intelligence tests are the following:

1. The tests were standardized on somewhat different populations, with different absolute means or different standard deviations, or both. 2. The IQ scales were arbitrarily assigned different standard deviations. For example, the standard deviation of IQ on the Wechsler scales is 15 and on the Stanford-Binet it is 16. 3. The IQ is a standardized score on one test and on another is derived from the MA/CA ratio (which results in a variable standard deviation at different ages). 4. The IQ scores of one or both tests are not on an equal-interval scale throughout the whole range. 5. The factorial composition of the two tests is not quite the same, at all levels of difficulty. Scores in the high, medium, or low range may be more g loaded on the one test than on the other, even though both tests overall are equally g loaded.

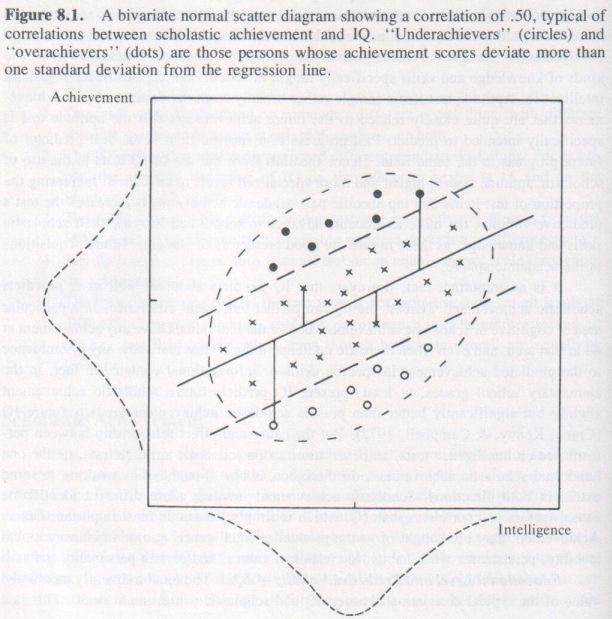

IQ not a Threshold Variable for Scholastic Achievement. Note that the regression line of achievement on intelligence in Figure 8.1 is linear throughout the entire range of IQ scale. This is typical of the findings of the many studies that have investigated the form of the regression of achievement on IQ. ... There is no point on the IQ scale below which or above which IQ is not positively related to achievement. This means that IQ does not act as a threshold variable with respect to scholastic achievement, as has been suggested by some of the critics of IQ tests ... The evidence is overwhelming that scholastic achievement increases linearly as a function of IQ throughout the entire range of the IQ scale so long as scholastic achievement itself is measured on a continuous scale unrestricted by the artifacts of ceiling or floor effects due to the achievement tests not including simple enough or advanced enough items.

Achievement in Elementary School. Results quite typical of those found in most studies of the predictive validity of IQ are seen in a large-scale study by Crano, Kenny, and Campbell (1972). It has the added advantage of showing both the concurrent and predictive validities of IQ. Achievement was measured by a composite score on the Iowa Tests of Basic Skills, which measure achievement and skills in reading, language (spelling, punctuation, usage, etc.), arithmetic, reading of maps, graphs, and tables, and knowledge and use of reference materials. IQ was measured by the Lorge-Thorndike Intelligence Test. The tests were taken by a representative sample of 5,495 children in the Milwaukee Public Schools in Grade 4 and parallel forms of the tests were obtained again in Grade 6. Figure 8.2 shows all of the correlations among the four sets of measurements. Notice that the predictive validity of IQ over an interval of two years (IQ4-Ach6) is nearly as high as the concurrent validity (IQ4-Ach4 and IQ6-Ach6). As is typically found, past achievement predicts future achievement slightly better than IQ.

One might wonder to what extent the common factor of reading ability per se involved in group tests of IQ and achievement plays a part in such intercorrelations. It is not as great as one might imagine. Although the verbal items of group IQ tests usually involve reading, the reading level is deliberately made simpler than the conceptual demands of the items, so that individual differences in the IQ scores are more the result of general cognitive ability than of reading ability per se. The reading requirements of an IQ test for sixth-graders, for example, will typically involve a level of reading ability within the capability of the majority of fourth-graders. The Lorge-Thorndike IQ test has both Verbal and Nonverbal parts; the Verbal requires reading, the Nonverbal does not. In a large study (Jensen, 1974b) of children in Grades 4 to 6, a correlation of .70 was found between the Verbal and Nonverbal IQs. The correlation between Verbal IQ and the reading comprehension subtest of the Stanford Achievement Test was .52. The correlation between Nonverbal IQ (which involves no reading) and reading comprehension scores was .47. The correlation between Verbal IQ and reading comprehension after Nonverbal IQ is partialled out is only .29. The Verbal IQ test obviously measures considerably more than just reading proficiency.

IQ and Learning to Read. Pupils’ major task in the primary grades (i.e., Grades 1 to 3) is learning to read. There are two main aspects of reading skill: decoding and comprehension. Decoding is the translation of the printed symbols into spoken language, and comprehension, of course, is understanding what is read. The learning of decoding (also called oral reading) is somewhat less predictable from IQ than is reading comprehension, which, once decoding skill has been achieved, quite closely parallels mental age. When elementary school children (all of the same age) are matched on decoding skill, their rank on a test of reading comprehension is practically the same as on IQ. In fact, reading comprehension per se is almost indistinguishable from oral comprehension once decoding is acquired. Most students with poor reading comprehension perform no better on tests of purely oral comprehension. But the reverse does not hold: there are some children (and adults) whose oral comprehension is average or superior, yet who have inordinate difficulty in the acquisition of decoding. When such disability is severe and unamenable to the ordinary methods of reading instruction, it is referred to as developmental dyslexia. Dyslexia seems to be a specific cognitive disability that does not involve g to any appreciable extent. Some dyslexics obtain high scores on both the verbal and nonverbal parts of individual IQ tests that require no reading, and they can be successful in college courses, especially in mathematics, physical sciences, and engineering, provided that someone reads their textbooks to them. There is no deficiency in comprehension per se. The vast majority of poor readers, however, are poor readers not because they lack decoding skill, but because they are deficient in comprehension, which, as measured by standard tests of reading comprehension is largely a matter of g (E. L. Thorndike, 1917; R. L. Thorndike, 1973-74.)

Here are some typical results. The Wechsler Preschool and Primary Scale of Intelligence (WPPSI), which does not involve reading, was given to children in kindergarten prior to any instruction in reading and was correlated with tests of reading achievement in first grade after one year’s instruction in reading (Krebs, 1969). Achievement was measured by the Gilmore Oral Reading Test (a test of decoding) and the reading subtests of the Stanford Achievement Test (SAT), which involves word meaning and paragraph comprehension as well as decoding. The one-year predictive validities of the WPPSI IQ scales are as follows:

WPPSI Gilmore Oral Reading SAT Reading Comprehension

Verbal Scale IQ .57 .61

Performance Scale IQ .58 .63

Full Scale IQ .62 .68

When the sample was divided into lower- and upper-socioeconomic-status groups, it was found that the predictive validity of IQ was higher in the lower-SES group than in the higher-SES group (e.g., SAT reading scores correlated .66 versus .40 with Full Scale IQ).

Group tests of reading readiness look a good deal like group IQ tests in item content. They are intended to predict reading achievement in the primary grades and can be taken by children prior to having received any instruction in reading. Lohnes and Gray (1972) factor analyzed seven reading readiness tests and an IQ test given to 3,956 pupils in 299 classrooms in the first weeks of the first grade, before they could read. The IQ test correlated .84 with the general factor (i.e., first principal component) common to the reading readiness tests, a higher correlation than that of any of the readiness tests themselves, which showed correlations with the general factor ranging from .44 to .81, with a median of .60. Two years later, when the same pupils were in the second grade, they were given ten reading and language achievement tests and one arithmetic computation test. These were factor analyzed, yielding correlations with the general factor of the achievement battery ranging from .64 (arithmetic computation) to .87 (reading vocabulary), with a median correlation of .80. The general factor of the reading readiness battery (including IQ) correlated .81 with the general factor of the achievement battery. Lohnes and Gray conclude:

There is no question that reading skills of pupils were observed by the criterion measurement instruments [i.e., the achievement tests given in second grade]. What these analyses reveal is that the most important single source of criterion variance, or to put it differently, the best single explanatory principle for observed variance in reading skill, was variance in general intelligence, (p. 475)

IQ Not a Stand-in for Socioeconomic Status. The claim has been made that IQ as a predictor of amount of education attained by adulthood is merely a “stand-in” for socioeconomic status. SES is indexed mainly by the father’s occupational status and the educational level of both parents. If a child’s SES determines his educational achievement or number of years spent in school, we should not expect to find a significant correlation between IQ and years of schooling among brothers reared together in the same family. Yet among brothers there is a correlation of about .30 to .35 between IQ and years of schooling as adults when IQ is measured in elementary school. (This correlation can be inferred from data presented by Jencks, 1972, p. 144.) Within-family differences in educational attainments for same-sex siblings cannot be attributed to differences in SES, “cultural differences,” or “family background.”

A study in Britain (Kemp, 1955) determined the correlations among IQ, tested scholastic achievement, and SES, with all of the intercorrelated variables consisting of the mean values obtained on these characteristics in fifty schools. The intercorrelations were as follows:

IQ and scholastic achievement = .73

IQ and SES = .52

SES and scholastic achievement = .56

When IQ is partialled out (i.e., held constant statistically) of the correlation between SES and scholastic achievement, the partial correlation drops to .30. However, when SES is partialled out of the correlation between IQ and achievement, the partial correlation drops only to .62. This means that IQ independently of SES determines achievement much more than does SES independently of IQ.

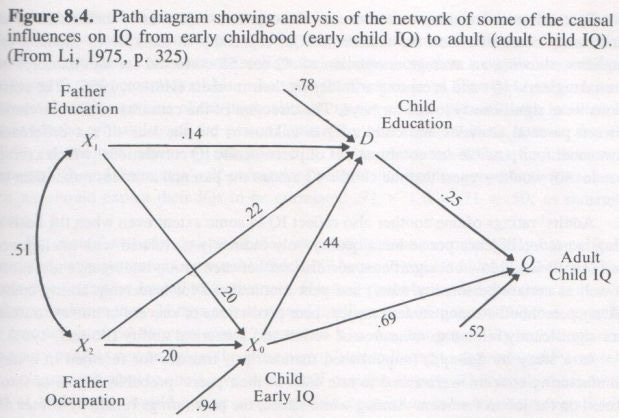

Because father’s education and occupation are the main variables in almost every composite index of SES or “family background,” it is instructive to look at the degree of causal connection between these variables and a child’s early IQ (at age 11), the child’s level of education (i.e., highest grade completed) attained by adulthood, and the child’s IQ as an adult. The intercorrelations among all these variables were subjected to a “path coefficients analysis” by the biometrician C. C. Li (1975, pp. 324-325).

Path analysis is a method for inferring causal relationships from the intercorrelations among the variables when there is prior knowledge of a temporal sequence among the variables. For example, a person’s IQ can hardly be conceived of as a causal factor in determining his or her father’s educational or occupational level. The reverse, however, is a reasonable hypothesis. The path diagram as worked out by Li (from data presented by Jencks, 1972, p. 339) is shown in Figure 8.4.

In path diagrams the observed correlations are conventionally indicated by curved lines (e.g., the observed correlation of .51 between father’s education and father’s occupation). The temporal sequence goes from left to right, and the direct paths, indicating the unique causal influence of one variable on another independently of other variables, are represented by straight lines with single-headed arrows to indicate the direction of causality. (Arrows that appear to lead from nowhere (i.e., from unlabeled variables) represent the square roots of the residual variance that is attributable to variables that are unknown or unmeasured in the given model.) We see in Figure 8.4 that the direct influences of father’s education and occupation contribute only .14² + .20² = 6 percent of the variance in the child’s final educational attainment (i.e., years of schooling) as an adult, whereas the direct effect of the child’s IQ at age 11 in determining final educational level is .44², or 19 percent of the variance. In brief, childhood IQ determines about three times more of the variance in adult educational level than father’s educational and occupational levels combined. Notice also that the father’s education and occupation combined determine only .20² + .20² = 8 percent of the variance in childhood IQ. Li concludes: “The implication seems to be that it is the children with higher IQ who go to school rather than that schooling improves children’s IQ. The indirect effect from early IQ to adult IQ via education is (0.44)(0.25) = 0.11” (p. 327).

Occupational Level, Performance, and Income

It is a consistent finding in all the studies of occupations and IQ that the standard deviation of scores within occupations steadily decreases as one moves from the lowest to the highest occupational levels on the intelligence scale. In other words, a diminishing percentage of the population is intellectually capable of satisfactory performance in occupations the higher the occupations stand on the scale of occupational status. Almost anyone can succeed as a tomato peeler, for example, and so persons of almost every intelligence level except the severely retarded may be found in such a job. But relatively few can succeed as a mathematician; no persons in the lower half of the intelligence distribution are to be found in this occupation in which nearly all who succeed are in the upper quarter of the population distribution of IQ. Thus the lower score of the total range of scores in each occupation is much more closely related to occupational status than is the upper score of the range.

Tested Ability and Performance within Occupations. The IQ and other ability test scores are considerably better at predicting persons’ occupational statuses than at predicting how well they will perform in the particular occupational niche they enter. Some one-fourth to one-half of the total IQ variance of the employed population is already absorbed in the allocation of persons to different occupations, so that there is less IQ variation left over that can enter into the correlation between IQ and criteria of success within occupations.

Restriction of range, however, is not the major factor responsible for the often low correlations between test scores and job performance. For one thing, in the vast majority of jobs, once the necessary skills have been acquired, successful performance does not depend primarily on the ability we have identified as g. Other traits of personality, developed specialized skills, experience, and ability to get along with people become paramount in job success as it is usually judged. It has been said that in the majority of jobs, as far as employers are concerned, the most important ability is not intellectual ability but dependability.

IQ and Creativity

Critical reviews of attempts to measure creativity have concluded that various creativity tests show hardly any higher correlations with one another than with standard tests of intelligence (Thorndike, 1963b; Vernon, 1964). The g factor is common to both kinds of tests, and there seems to be no independent substantial general factor that can be called creativity. Besides g, creativity tests involve long-recognized smaller group factors usually labeled as verbal and ideational fluency. Differences between persons scoring high and persons scoring low on “creativity” tests, when they are matched on IQ, invariably consist of descriptions of personality differences rather than of characteristics that would be thought of as any kind of ability differences. Thus “creativity,” at least as presently measured, apparently is not another type of ability that contends with g for importance, as some writers might lead us to believe (Getzels & Jackson, 1962; Wallach & Kogan, 1965). While “creativity” tests may be related to certain personality characteristics, they have not been shown to be related to real-life originality or productivity in science, invention, or the arts, which are what most people regard as the criteria of creativity.

For a time it was believed that the research of Wallach and Kogan (1965) contradicted the conclusion of earlier reviews to the effect that “creativity” and intelligence are not different co-equal abilities or even factorially distinguishable traits. Wallach and Kogan had claimed that the failure of earlier researches to separate creativity and intelligence was a result of the fact that the creativity tests were usually given in the same manner as the usual psychometric tests, with time limits, as measures of some kind of ability, in an atmosphere conducive to competitiveness and self-critical standards. These conditions, it was maintained, were antithetical to the expression of creativity. So Wallach and Kogan gave their tests of creativity (better labeled as fluency) without time limits, in a very free, nonjudgmental, play-like, game-like atmosphere. Under these conditions, they found negligible correlations between several verbal intelligence tests and their “creativity” tests. The “creativity” scores, however, account for only a small percentage (2 percent to 9 percent) of the variance in any of the dependent variables measured in this study. High scorers in general tended to be less inhibited or less constricted in producing responses; they responded more energetically and fluently in the game-like setting in which the “creativity” tests were given. [...]