How to remove the influence of confoundings on a (continuous) variable by way of linear regression

To see how it works, the best way is to show the process. If we need to remove the influence of, say, age and gender, on IQ, that would mean IQ would not correlate with either gender or age anymore. Besides, the mean IQ difference between the age group and gender group must be reduced to zero. For the present illustration, I will use the NLSY97, available here. The syntax (SPSS) is given at the end of the post.

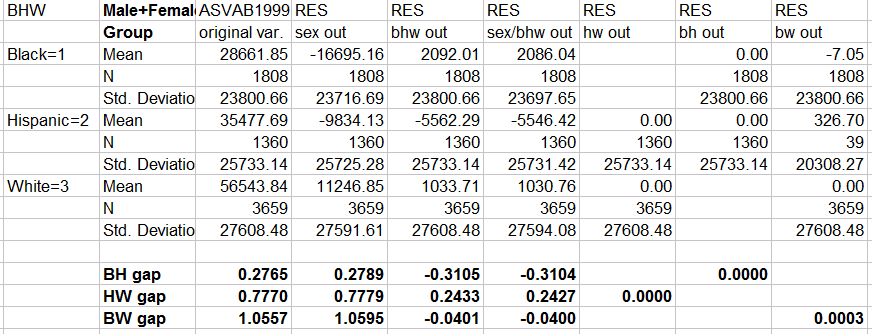

In a nutshell, the procedure consists in entering the dependent variable for the variable (ASVAB here) on which the impact of covariates must be removed. These covariates could be age, gender and other demographic variables. Here, for the illustration, I use age, gender, race 3-categories (black, hispanic, white), race 2-categories (black-white, hispanic-white, black-hispanic) for the independent variables. When performing the regression, we must "save" the residuals, either unstandardized, standardized, or both. Here, I use unstandardized, because it helps for the comparison, but the numbers will be the same whether we use standardized values or not.

We begin with racial d gaps by gender grouping. The formula for computing the d gap is shown here. The racial d gap is always expressed as higher scoring group minus lower scoring group, e.g., white minus hispanic, white minus black, hispanic minus black.

On the above table, we see something interesting. First, the variable with ASVAB BHW-regressed out, has racial gap that is totally different from the original ASVAB. For Black-White IQ gap, it has been reduced to almost zero. But for Black-Hispanic and Hispanic-White racial IQ gaps, the difference is not zero. However, when the HW dichotomized variable has been regressed out on the ASVAB, the HW gap is zero. Same thing happened with BH gap when we used BH variable (instead of BHW) to be regressed out of the ASVAB test. Why the BW gap has been already reduced to zero with BHW is a good question. Perhaps, I assume, the lowest scoring group is black (1) whereas the highest scoring group here is white (3) considering that controlling for race (3-category variables) will put the scores given the mean of these values (0.5*(3+1)=2). There is another problem too. Although the difference is near zero, it's not zero. The reason is that I have divided the sample by gender groups whereas I haven't done that when I performed my series of multiple regression. So, when I examine the racial gaps with males and females grouped together, I get the following :

Next table is the male-female comparison (full sample, not disaggregated by race groups). No further comment seems needed. Partialling out gender variable on ASVAB always reduced the gender difference to zero. Controlling for gender means that the score difference is expressed as if gender=1.5 (for 1=male and 2=female).

The following table now shows the gender d gap for each racial group (the gender gap is always expressed as female score minus male score, i.e., positive d gaps denote advantage for females). This time, something unexpected happens. Gender gap among hispanics and whites is reduced to almost zero when gender is partialled out of ASVAB, but the gender gap is reduced by half among blacks. Why is it behaving like this ? That's because I regressed out the effect of gender on the full sample, not separately in each racial group. This is why the correction is rather imperfect. The proof is given in the 3 last columns. After filtering in the race group of interest, I performed another regression, and removed sex out of ASVAB. This time, the gender difference in each racial group is 0.000. I will not recommend such practice however. Because in doing so, the IQ mean is centered to zero on each racial group separately, so that the scores can't be compared across races. However imperfect it can be, I believe a regression adjustment on the full sample is the only one appropriate.

Finally, I will show the correlation between the variables. SEX correlates zero only with all ASVABs sex-regressed. AGE correlates zero only with all ASVABs age-regressed. Same thing with BHW (race) variable which does not correlate anymore (r=0.000) with either ASVAB with BHW regressed out, or BH out, or HW out, or BW out. ASVAB correlates least with ASVAB BW regressed out, correlates a little more if HW regressed out, and correlates even more if BH regressed out. In other words, the more the racial gap is stronger, and the less the ASVAB race-regressed correlates with the original ASVAB.

My conclusion is that when we need to study the full sample, regression on the full sample poses no problem. If we analyze however one subset of the full sample, say, only blacks and whites, it is possible to use regression by filtering in whites and blacks. But keep in mind that the mean IQ score will be centered to zero given that subset of the data. That may be desirable or not, depending on the goal of that practice.

Addendum (1) : for the information, removing covariates on a dependent variable X is also called semi-partial correlation, if we correlate X with Y. But if we have also removed the same covariates on the dependent variable Y, and we correlate X with Y, this should be called partial correlation.

Addendum (2) : the last analysis involves a continuous variable (e.g., age) that is partialled out. Age is not entered along with its squared and cubic terms (implying that age effect is assumed to be linear) and all these linear and non-linear terms of age are not interacting with race and/or sex. If we get the predicted Y or residuals variable in this manner, we assume there is no interaction between age and race, age and sex. This may not be plausible. Finally, if age, or any other variable has not the value zero in its range of values (if we didn't use centering approach), then the intercept of the regression model becomes meaningless but the values of the predicted Y or residuals do not change at all.

Syntax :

SET MXCELLS=9000.

IF R0538700=2 BW=1.

IF R0538700=1 and R1482600=4 BW=2.

IF R0538700=2 BH=1.

IF R1482600=2 BH=2.

IF R1482600=2 HW=1.

IF R0538700=1 and R1482600=4 HW=2.

IF R0538700=2 BHW=1.

IF R1482600=2 BHW=2.

IF R0538700=1 and R1482600=4 BHW=3.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300 R0536402

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536402

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER BHW

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300 BHW

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300 R0536402 BHW

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER HW

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER BH

/SAVE RESID ZRESID.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER BW

/SAVE RESID ZRESID.

USE ALL.

COMPUTE filter_$=(BHW=1).

VARIABLE LABELS filter_$ ‘BHW=1 (FILTER)’.

VALUE LABELS filter_$ 0 ‘Not Selected’ 1 ‘Selected’.

FORMATS filter_$ (f1.0).

FILTER BY filter_$.

EXECUTE.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300

/SAVE RESID ZRESID.

USE ALL.

COMPUTE filter_$=(BHW=2).

VARIABLE LABELS filter_$ ‘BHW=2 (FILTER)’.

VALUE LABELS filter_$ 0 ‘Not Selected’ 1 ‘Selected’.

FORMATS filter_$ (f1.0).

FILTER BY filter_$.

EXECUTE.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300

/SAVE RESID ZRESID.

USE ALL.

COMPUTE filter_$=(BHW=3).

VARIABLE LABELS filter_$ ‘BHW=3 (FILTER)’.

VALUE LABELS filter_$ 0 ‘Not Selected’ 1 ‘Selected’.

FORMATS filter_$ (f1.0).

FILTER BY filter_$.

EXECUTE.

REGRESSION

/MISSING LISTWISE

/STATISTICS COEFF OUTS R ANOVA

/CRITERIA=PIN(.05) POUT(.10)

/NOORIGIN

/DEPENDENT R9829600

/METHOD=ENTER R0536300

/SAVE RESID ZRESID.

FILTER OFF.

USE ALL.

EXECUTE.

MEANS TABLES=R9829600 RES_1 RES_4 RES_5 RES_7 RES_8 RES_9 BY

R0536300

/CELLS MEAN COUNT STDDEV.

MEANS TABLES=R9829600 RES_1 RES_4 RES_5 RES_7 RES_8 RES_9 BY

BHW

/CELLS MEAN COUNT STDDEV.

MEANS TABLES=R9829600 RES_1 RES_4 RES_5 RES_7 RES_8 RES_9 BY

R0536300 BY BHW

/CELLS MEAN COUNT STDDEV.

MEANS TABLES=R9829600 RES_1 RES_4 RES_5 RES_7 RES_8 RES_9 RES_10 RES_11 RES_12 BY

BHW BY R0536300

/CELLS MEAN COUNT STDDEV.

CORRELATIONS

/VARIABLES=R0536300 R0536402 BHW HW BH BW R9829600 RES_1 RES_2 RES_3 RES_4 RES_5 RES_6 RES_7 RES_8 RES_9

/PRINT=TWOTAIL NOSIG

/MISSING=PAIRWISE.