The Nature and Nurture of High IQ: An Extended Sensitive Period for Intellectual Development

The Nature and Nurture of High IQ: An Extended Sensitive Period for Intellectual Development

Angela M. Brant, Yuko Munakata, Dorret I. Boomsma, John C. DeFries, Claire M. A. Haworth, Matthew C. Keller, Nicholas G. Martin, Matthew McGue, Stephen A. Petrill, Robert Plomin, Sally J. Wadsworth, Margaret J. Wright, and John K. Hewitt. 2013.

Abstract

IQ predicts many measures of life success, as well as trajectories of brain development. Prolonged cortical thickening observed in individuals with high IQ might reflect an extended period of synaptogenesis and high environmental sensitivity or plasticity. We tested this hypothesis by examining the timing of changes in the magnitude of genetic and environmental influences on IQ as a function of IQ score. We found that individuals with high IQ show high environmental influence on IQ into adolescence (resembling younger children), whereas individuals with low IQ show high heritability of IQ in adolescence (resembling adults), a pattern consistent with an extended sensitive period for intellectual development in more-intelligent individuals. The pattern held across a cross-sectional sample of almost 11,000 twin pairs and a longitudinal sample of twins, biological siblings, and adoptive siblings.

Adult IQ is a measure of cognitive ability that is predictive of social and occupational status, educational and job performance, adult health, and longevity (Gottfredson, 1997; Neisser et al., 1996; Whalley & Deary, 2001). Individuals with IQ scores at the high end of the distribution show distinct timing of postnatal structural changes in cortical regions known to support intelligence, and it has been posited that this distinct pattern of cortical development reflects an extended sensitive period (Shaw et al., 2006). Specifically, change in cortical thickness in frontal and temporal regions follows a cubic function during development, with these regions initially thickening in childhood and then thinning in late childhood and adolescence, until change levels out in young adulthood (see also Shaw et al., 2008); these changes match the patterns of synaptogenesis and pruning observed in post-mortem prefrontal tissue (Petanjek et al., 2011). Compared with individuals of average and high IQ, those with superior IQ show more intense and prolonged cortical thickening, followed by more rapid thinning. This distinct trajectory may reflect prolonged synaptogenesis and an extended sensitive period, during which the brain is particularly responsive to environmental input (Shaw et al., 2006).

Further evidence for a link between cortical thickness and IQ comes from work showing overlap in the genes that influence change in cortical thickness and IQ in adulthood (Brans et al., 2010). In addition, across development, IQ and cortical thickness show similar patterns of change in the magnitude of genetic and environmental influences. Specifically, the heritability (magnitude of genetic influence) of IQ and the heritability of cortical thickness in brain regions associated with IQ both increase during childhood and adolescence, while environmental influences decrease in importance (Bartels, Rietveld, Van Baal, & Boomsma, 2002; Brant et al., 2009; Haworth et al., 2010; Lenroot et al., 2009).

These results are suggestive of an extended sensitive period for IQ development: Cortical thickening, which is positively associated with IQ, occurs over an extended period for individuals with higher IQ, and this extended period of thickening may correspond to prolonged sensitivity to the environment. These results are only suggestive, however, because developmental changes do not necessarily correspond to changes in sensitivity to the environment. There is no direct evidence for individual differences in the length of a sensitive period for IQ.

We empirically tested this extended-sensitive-period hypothesis by examining changes in the magnitude of genetic and environmental influences on individual differences in IQ scores throughout development. As noted, the magnitude of environmental influences on IQ decreases across development. We tested whether these decreases occur later in development for individuals with higher IQ, which would be consistent with a prolonged sensitivity to the environment. We focused on influences of the shared family environment rather than individual-specific environment because the developmental change in environmental influences on intelligence is driven mainly by a reduction in the influence of the shared family environment. Additionally, the shared family environment should arguably be the driving force behind experiential influences on IQ because shared family environmental influences are highly correlated across different ages, such that their effects can accumulate across development, whereas individual-specific environmental factors tend to be more age-specific and include measurement error (Brant et al., 2009).

We used a cross-sectional sample of almost 11,000 twin pairs ages 4 to 71 years and a smaller longitudinal replication sample of twins, biological siblings, and adoptive siblings tested from ages 1 to 16. Previously published investigations using the data sets we examined tested for differences between individuals with high IQ and those with IQ in the normal range. Although no difference was reported in the etiology of individual differences (Haworth et al., 2009; cross-sectional Genetics of High Cognitive Ability, or GHCA, Consortium sample) or in their trajectories of developmental change (Brant et al., 2009; Longitudinal Twin Sample), these investigations treated IQ as a discrete variable rather than examining continuous trends, and they did not test whether the relationship between IQ score and heritability of IQ or magnitude of environmental influence on IQ was specific to adolescence. In the present study, we tested this possibility explicitly. We predicted that environmental influences on IQ would remain high for longer in higher-IQ individuals, and that genetic influences, conversely, would remain lower for longer. Thus, we expected that IQ score would be associated with the magnitude of genetic and environmental influences on IQ in adolescence (but not in childhood, when environmental influences should be high regardless of IQ, or in adulthood, when genetic influences should be high, regardless of IQ).

Method

Participants and measures

Participants for the initial cross-sectional analysis were 10,897 monozygotic (MZ; identical) and dizygotic (DZ; fraternal) twin pairs from the Genetics of High Cognitive Ability (GHCA) Consortium sample, which was recruited by six institutions in four different countries (United States, United Kingdom, The Netherlands, and Australia). Zygosity was determined in almost all cases by analysis of DNA microsatellites, blood group polymorphisms, or other genetic variants (see Supplementary Appendix 1 the Supplemental Material available online). The sample is described in detail elsewhere (Haworth et al., 2010) and is summarized in Table 1.

The longitudinal sample included MZ and DZ twins from the Colorado Longitudinal Twin Study (LTS) and adoptive and biological sibling pairs from the Colorado Adoption Project (CAP), two prospective community studies of behavioral development at the Institute for Behavioral Genetics, University of Colorado at Boulder. In total, 483 same-sex twin pairs (264 MZ and 219 DZ pairs), identified from local birth records, have participated in the LTS. [1] Twin zygosity status was determined using 12 molecular genetic markers, as described elsewhere (Haberstick & Smolen, 2004). In the CAP, families with an adoptive child and matched community families were identified when the children were infants. If siblings were subsequently born or adopted into the families, they were included in the study. In many families, more than one sibling pair was available. The current analysis used the pair with complete IQ data at the most ages. The final sample consisted of 185 biological sibling pairs and 184 adoptive sibling pairs. IQ data for age 16 only were available for 64 biological pairs and 75 adoptive pairs. IQ scores were standardized within age and across samples to maintain the slightly higher mean scores in the CAP than in the LTS. Table 2 presents demographic information on both samples and lists the IQ tests administered at the seven assessment waves, along with mean scores. For more details on the samples, see Rhea, Gross, Haberstick, and Corley (2006; LTS) and DeFries, Plomin, and Fulker (1994; CAP).

Twin methodology

Extensions of DeFries-Fulker regression, a special case of linear regression for deriving genetic and environmental components of variance in pairs of related individuals, were employed. We used DeFries-Fulker regression (for details, see Cherny, Cardon, Fulker, & DeFries, 1992) to predict the score of one member of a sibling pair from the score of the other, the coefficient of relationship (1.0 for MZ twins, who share 100% of their segregating alleles; .5 for DZ twins and biological siblings, who are 50% genetically related on average; and .0 for adoptive siblings, who are not genetically related), and the interaction between these two variables. When the data are standardized, as they were in this study, this regression yields direct estimates of the heritability (h²) of the measured trait and the proportional influence of the family-wide environment (c²) on differences between individuals in the sampled population. The influence of individual-specific environments (e²) can be derived by subtraction. Other variables of interest, which are allowed to interact with the standard predictors, can be added into the equation to test whether they affect the magnitude of either h² or c². In the current study, we were interested in whether the magnitude of h² or c² for IQ is moderated by IQ score itself, so we added a quadratic ability term (the square of the predictor sibling’s score) and the interaction of this quadratic term and the coefficient of relationship (Cherny et al., 1992). The significance of these interaction terms assessed whether IQ score had linear, continuous relationships with c² and h², respectively.

To directly test the extended-sensitive-period hypothesis, we were also interested in whether any effect of IQ score on h² or c² was restricted to a certain age range. We therefore estimated the coefficient for the quadratic ability term separately at each measured age. In the cross-sectional GHCA sample, we were additionally able to test for significant differences in the magnitude of the ability-dependent terms across ages by adding an age covariate into the regression equation. The extended-sensitive-period hypothesis predicts a relationship between IQ score and estimates of h² and c² only in adolescence (i.e., the coefficient for the age term should be zero at all other times). For this reason, continuous modeling of the effect of age was not possible, and we therefore decided to use discrete age categories. We split the sample into three age groups — childhood (4–12 years; 6,044 pairs), adolescence (13–18 years; 4,304 pairs), and adulthood (18+years; 549 pairs) — and constructed orthogonal contrast codes: a linear code comparing the childhood and adulthood groups and a quadratic code comparing these groups collectively with the adolescence group.

Because our hypothesis predicts that the values of h² and c² are dependent on IQ score only in adolescence (i.e., higher-scoring adolescents will have childlike influences on IQ, and lower scorers will have adult-like influences), we expected that the three-way interactions of the quadratic age contrast code, ability, and the h² and c² terms would be significant, whereas the corresponding terms for the linear age code would not be (as no interactions with ability were expected in either childhood or adulthood). Although the appropriate boundaries between the age categories were somewhat ambiguous, the broad expected pattern was clear, so we chose childhood, adolescence, and adulthood age boundaries as typically defined.

In the longitudinal sample, we added an extra covariate, age gap in days between the siblings in each pair (0 for all twin pairs), into the regression, and all results reported for this sample are from analyses including the interaction of this covariate with the c² and h² terms and the ability-dependent terms. Because maximum sharing of the family environment occurs when siblings are the same age, and the groups in our sample differed systematically not only by genetic relatedness but also by average age gap (adoptive siblings being more disparate than biological siblings, who in turn were more disparate than twins), it was prudent to account for this confounding variable in the analysis, so as not to overestimate the magnitude of h².

For every analysis reported, each pair appeared twice in the data set, with the IQ score of each member appearing once as a predictor and once as a dependent variable. This is routine in DeFries-Fulker regression using unselected samples because there is no a priori reason to favor a particular twin assignment. This procedure does, however, artificially narrow the standard errors derived from regression analysis (which assumes independence). We addressed this concern in the GHCA sample by bootstrapping the regression estimates, resampling first at the family level and then at the twin assignment level, and in the longitudinal sample by using the robust standard error correction outlined by Kohler and Rodgers (2001), which accounts for observations being independent at the level of the twin pair but not at the level of the individual. Further explanation of all analyses, including the regression equations, can be found in Supplementary Appendix 1 in the Supplemental Material.

Results

Cross-sectional analysis

Characteristics of the GHCA sample and sample-wide analysis. The mean age of the GHCA sample was 13.06 years (range: 4.33–71.03 years; see Table 1). Mean age differed considerably among the subsamples, from 6 in the Western Reserve Reading Project to almost 18 in the Netherlands Twin Register. There was also a considerable difference in the age range across the samples, so that some age groups were primarily made up of particular samples. Table 1 reports the percentage of pairs within each age group in each subsample and in the total sample. The IQ tests administered differed across subsamples (see Table 1), but all were age-appropriate, widely used, and validated tests. For the analyses reported here, after residualization for age and sex, the IQ scores were standardized within each subsample to maintain the subsample structure.

For the sample as a whole, h² was .55 (95% confidence interval, or CI = [.49–.61]), c² was .22 (95% CI = [.18–.26], and e² was .23 (95% CI = [.16–.39]). These findings closely match the results obtained in the same sample using different methodology (structural equation modeling; Haworth et al., 2010). When we examined the influence of IQ score on these parameters, we found a significant effect on c² (β = 0.036, p = .026), such that the influence of c² increased as IQ score increased. There was a slight trend for a decrease in h² as IQ score increased (β = −0.027, p = .187). As anticipated (for reasons outlined in the introduction), there were no detectable influences of IQ score on the magnitude of e². For this reason, we do not discuss this predictor further.

Age as a moderating variable. Separate analyses of the subsamples revealed variability in the strength of the relationship between IQ score and the causal influences on IQ (h² and c², which we refer to collectively as etiology), suggesting a moderation of this relationship by age. We therefore performed the regression analysis with age as an interacting variable, as described in the Twin Methodology section, to test the age dependence of the interaction between IQ score and both h² and c². As expected, the linear age contrast did not moderate the score-etiology relationship (in separate analyses of the age groups, the score-etiology relationship in both childhood and adulthood was not significantly different from zero). However, the quadratic contrast code, comparing the adolescence group with the childhood and adulthood groups collectively, revealed a significantly pronounced score-etiology relationship, a finding consistent with the extended-sensitive-period hypothesis. Specifically, both the increase in c² and the decrease in h² as IQ score increased were significantly greater in adolescence (β = 0.05, p = .04, and β = −0.06, p = .04, respectively). In adolescence, IQ score predicted the magnitude of genetic influence (β = −0.14, p < .001) and environmental influence (β = 0.12, p < .001), in a manner consistent with lower-IQ individuals transitioning earlier to an adultlike pattern of these influences.

Analyses removing scores below the 5th and above the 95th percentile ruled out undue influence of extreme scores on the results. We also assessed whether any of these results differed according to sex by repeating the analysis with non-sex-residualized data and adding sex as an interacting variable. Males had a slightly higher mean IQ than females (βsex = 0.061, p < .001), as would be predicted given the age range of our sample (Lynn & Kanazawa, 2011). However, no significant interactions with sex were found.

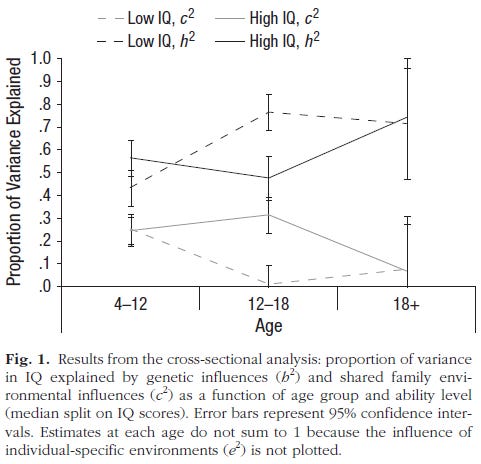

Transitions in causal influences. Figure 1 displays h² and c² in the three age groups, estimated separately for the top and bottom halves of the ability distribution (median split) at each age. [2] The figure shows that the estimates of both h² and c² changed with age, with the magnitude of shared environmental influence decreasing and the magnitude of genetic influence increasing between childhood and adulthood, a pattern consistent with previous results in this sample and others (see, e.g., Haworth et al., 2010). The magnitude of these effects was largely equal for the top and bottom halves of the ability distribution, and there were consistent beginning and end points in developmental change irrespective of ability level. However, the timing of this transition was different for the two ability groups. For the lower-ability group, the period of maximum change occurred between childhood and adolescence. There was largely no change between adolescence and adulthood. For the higher-ability group, in contrast, there was largely no change between childhood and adolescence, and the change in causal influence occurred between adolescence and adulthood.

Longitudinal sample

The estimates of h² and c² for each of the seven testing ages (with age gap modeled) are presented in Table 3. The pattern of increasing genetic influence and decreasing influence of the shared environment corroborates our findings in the cross-sectional analysis, with h² rising from .42 at age 1 to .85 at age 16, and c² showing the opposite effect, decreasing from a high of .39 to a low of .01. Additionally, we confirmed the influence of IQ score on the estimates of these parameters in adolescence (last two columns of Table 3). At age 16, the estimate of c² increased and the estimate of h² decreased as ability increased, but ability was not a significant moderator of these parameters at the earlier ages. We were, however, unable to test the sample in adulthood to confirm the transience of this effect.

Discussion

We have presented evidence, from two separate sets of data, that supports the existence of a sensitive period in IQ development that is extended in individuals of higher IQ. Using a large cross-sectional twin data set, we found a shift in causal influences on IQ between childhood and adulthood — a shift away from environmental and toward genetic influences. Moreover, we found that the period of childlike levels of environmental influence was prolonged in higher-IQ individuals, whereas lower-IQ individuals shifted earlier to an adultlike pattern. Thus, higher IQ is associated with a prolonged sensitive period. This result was replicated in a longitudinal sample of twin, biological, and adoptive siblings. The change in environmental influence was driven by the family-wide environment and not the individual-specific environment (including measurement error), which is consistent with prior longitudinal behavior genetic research showing age-related changes in the relative magnitude of the former but not the latter component of variance.

Alternative explanations of these results can be ruled out (see Supplementary Appendix 2 in the Supplemental Material for details). First, assortative mating (the tendency for parents to resemble each other in cognitive ability) could artifactually increase the influence of the family-wide environment, and therefore could have contributed to our results if assortative mating were higher in the parents of higher-IQ individuals. However, we found that higher-IQ parents actually showed less assortative mating; the difference between parental IQ scores was positively correlated with mean parental IQ score. Thus, assortative mating could have contributed only to an underestimation of the strength of the results reported here. Second, if different traits were measured at different IQ levels, and these traits differed in their extent of genetic and environmental influences, this could have given a false impression of a single trait that varied by IQ in the extent of genetic and environmental influences. However, principal component analyses showed that the same trait was measured across IQ levels.

Finally, genotype-environment interactions could have contributed to our results if the environmental variables were correlated with IQ, and estimates of environmental influence were greater for higher levels of the environmental variables. We tested for gene-environment interactions in the LTS twins’ age-16 IQ scores, using parental education and parental IQ. However, no interaction was present for parental education, and heritability of IQ was higher at higher levels of parental IQ, which would cause underestimation of the interaction between the individual’s own score and his or her environmental sensitivity. Moreover, all of these alternative explanations would face an additional challenge in explaining why the link between IQ and genetic and environmental influence changed across development.

Our findings raise the question of why a prolonged sensitive period in IQ development might be associated with higher IQ. One possibility is that protracted development is beneficial for development of higher and uniquely human cognitive functions, such as those measured by IQ tests (Rougier, Noelle, Braver, Cohen, & O’Reilly, 2005). This idea may be supported if future research determines that higher-IQ individuals have genetic polymorphisms that limit the rate of developmental cellular changes. Similar arguments have been made for prolonged immaturity being beneficial for other aspects of cognitive development (Bjorkland, 1997; Newport, 1990; Thompson-Schill, 2009). However, individuals with an eventual high IQ tend to score high from early in development (Deary, Whalley, Lemmon, Crawford, & Starr, 2000), which challenges the idea that prolonged immaturity alone leads to higher IQ.

An alternative possibility is that having a higher IQ prolongs sensitivity to the environment. For example, heightened levels of attention and arousal, as one may find in individuals of higher IQ, may allow plasticity to occur later into development (Knudsen, 2004). A related idea is that individuals of higher IQ may be more open to experience, more likely to try things and change in response to experience, whereas lower-IQ individuals may be less motivated to engage with IQ-promoting environments, as they do not get as much positive feedback from learning experiences. However, this explanation also faces some challenges. The increase in genetic influence over development comes from both an increase in the importance of existing genetic influences and the addition of new genetic influences (Brant et al., 2009). If the extension of the sensitive period is a feedback process from increased cognitive ability, it is unclear how this feedback process would lead to a delay in the introduction of new genetic influences.

The most prominent theory of developmental increases in the heritability of IQ posits that across development, individuals gain more scope to shape their own environments on the basis of their genetic propensities (active gene-environment correlation), which causes an increase in genetic influence over time (Haworth et al., 2010; Plomin, DeFries, & Loehlin, 1977). Our results challenge this explanation, as they show a later increase in heritability for individuals of higher IQ. To explain our results in the context of active gene-environment correlations, one would need to posit, counterintuitively, that higher-IQ individuals seek out environments concordant with their genetic propensities later in development than do lower-IQ individuals.

The reason for developmental increases in the heritability of IQ thus remains unclear. Other possibilities include amplification of existing genetic influence by increasing population variance in cognitive ability and the simultaneous limiting of environmental influences and introduction of new genetic influences as a result of synaptic pruning processes and myelination at the end of the sensitive period (Plomin, 1986; Plomin et al., 1977; Tau & Peterson, 2010). Although resolving this debate is beyond the scope of the current work, our key contribution is in showing for the first time that the timing of the decline in the magnitude of environmental influence depends on IQ, which is consistent with the extended-sensitive-period hypothesis. Further research investigating the developmental influence of specific genes and environments, aided by a better molecular-level understanding of the mechanisms underlying typical brain development, will help resolve this question.

Our results suggest that, like cortical thickness, other brain-related measures (e.g., functional connectivity, synaptic density, and characteristics of neurotransmitter systems) will show changing relationships to IQ across development, and that the timing of these changes will be dependent on IQ score. Thus, our results point to an important new direction in the search for biological and cognitive markers of IQ, and in the study of the genetic variation and developmental processes underlying individual differences in cognitive ability.

Notes

[1]. This total exceeds the number of twin pairs reported for the LTS in the cross-sectional analysis, which included only twins who had an IQ score measured at age 7 or above.

[2]. For these analyses, a sibling pair was double-entered only if both siblings were in the same half of the ability distribution.