The g Factor: The Science of Mental Ability

Arthur R. Jensen, 1998.

CONTENT [Jump links below]

Chapter 4: Models and Characteristics of g

Chapter 5: Challenges to g

Chapter 6: Biological Correlates of g

Chapter 7: The Heritability of g

Chapter 8: Information Processing and g

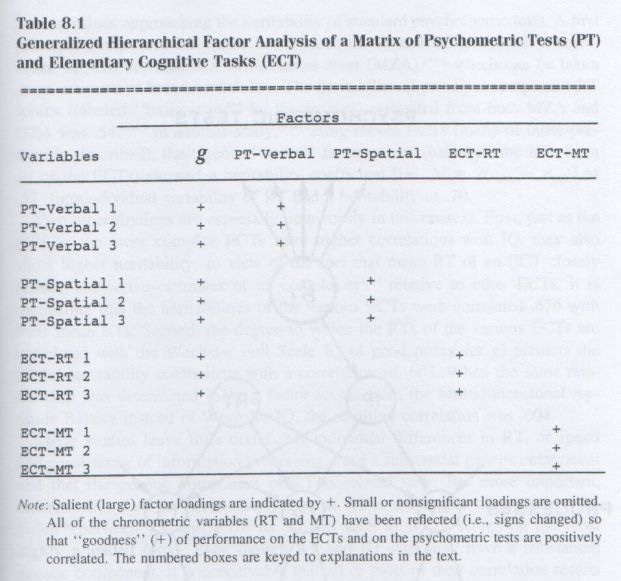

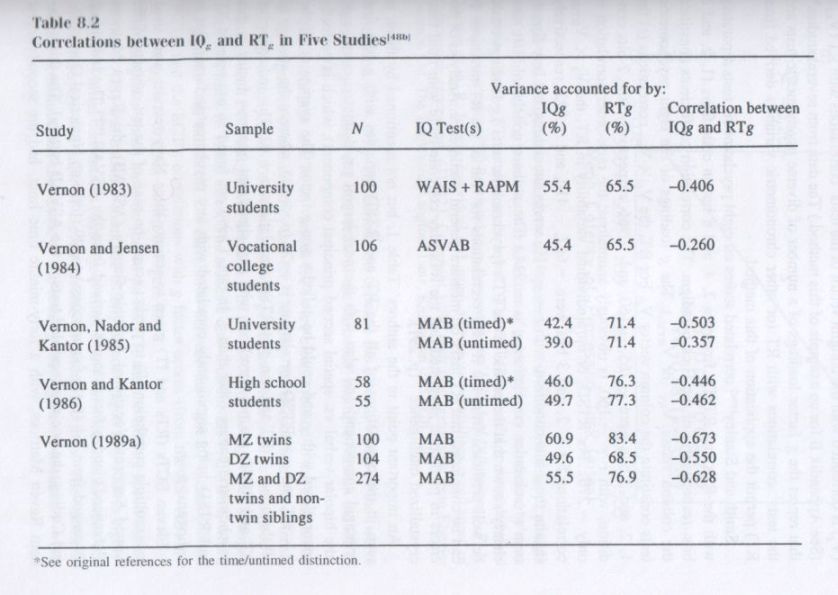

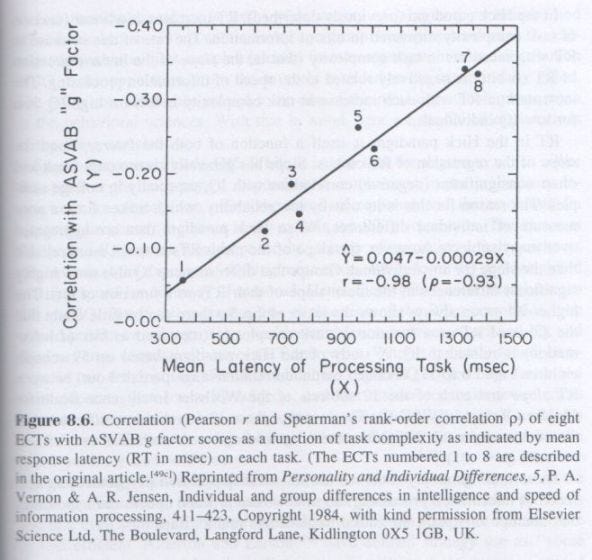

Chapter 9: The Practical Validity of g

Chapter 10: Construct, Vehicles, and Measurements

Chapter 11: Population Differences in g

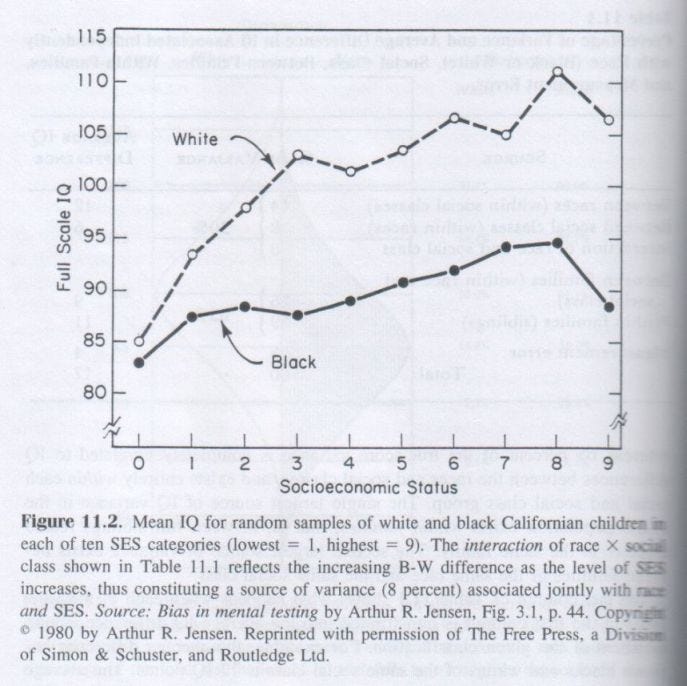

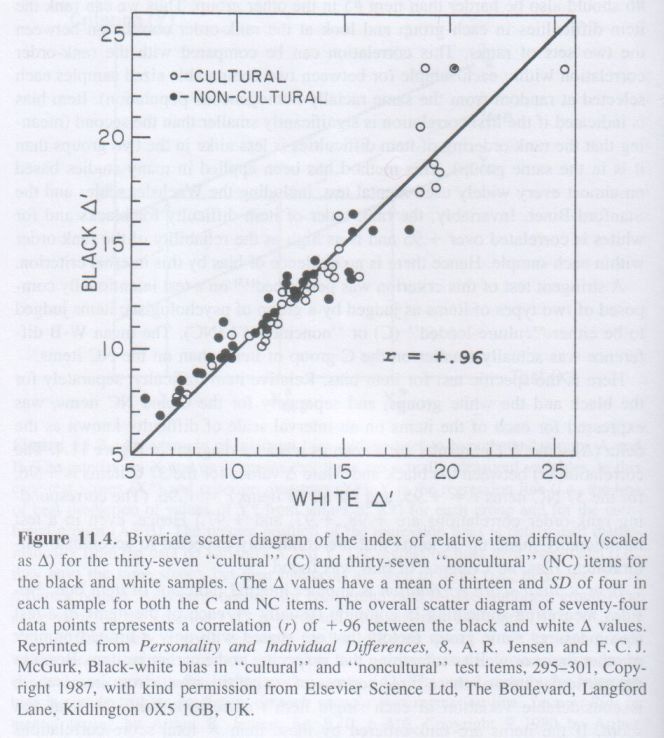

Chapter 12: Causal Hypotheses

Chapter 14: The g NexusChapter 4 : Models and Characteristics of g

STATISTICAL SAMPLING ERROR

The size of g, that is, the proportion of the total variance that g accounts for in any given battery of tests, depends to some extent on the statistical characteristics of the group tested (as compared with a large random sample of the general population). Most factor analytic studies of tests reported in the literature are not based on representative samples of the general population. Rather, subject samples are usually drawn from some segment of the population (often college students or military trainees) that does not display either the mean level of mental ability or the range of mental ability that exists in the total population. Because g is by far the most important ability factor in determining the aggregation of people into such statistically distinguishable groups, the study groups will be more homogeneous in g than in any other ability factors. Hence when the g factor is extracted, it is actually smaller than it would be if extracted from data for the general population. Relative to other factors, g is typically underestimated in most studies. This is especially so in samples drawn from the students at the most selective colleges and universities, where admission is based on such highly g-loaded criteria as superior grades in high school and high scores on scholastic aptitude tests.

Many factor analytic studies have been based on recruits in the military, which is a truncated sample of the population, with the lower 10 percent (i.e., IQs below 80) excluded by congressional mandate. Also, the various branches of the armed services differ in their selection criteria based in part on mental test scores (rejecting the lowest-scoring 10 to 30 percent), with consequently different range restrictions of g.

The samples most representative of the population are the large samples used to standardize most modern IQ tests and the studies of elementary schoolchildren randomly sampled from urban, suburban, and rural schools. Because the dropout rate increases with grade level and is inversely related to IQ, high school students are a somewhat more g-restricted sample of the general population.

A theoretically interesting phenomenon is that g accounts for less of the variance in a battery of tests for the upper half of the population distribution of IQ than for the lower half, even though the upper and lower halves do not differ in the range of test scores or in their variance. [11] The basis of g is that the correlations among a variety of tests are all positive. Since the correlations are smaller, on average, in the upper half than in the lower half of the IQ distribution, it implies that abilities are more highly differentiated in the upper half of the ability distribution. That is, relatively more of the total variance consists of group factors and the tests’ specificity, and relatively less consists of g for the upper half of the IQ distribution than for the lower half. (For a detailed discussion of this phenomenon, see Appendix A.)

Specificity (s) is the least consistent characteristic of tests across different factor analyses, because the amount of specific variance in a test is a function of the number and the variety of the other tests in the factor analysis. Holding constant the number of tests, the specificity of each test increases as the variety of the tests in the battery increases. As variety decreases, or the more that the tests in a battery are made to resemble one another, the variance that would otherwise constitute specificity becomes common factor variance and forms group factors. If the variety of tests in a battery is held constant, specificity decreases as the number of tests in the battery is increased. As similar tests are added, they contribute more to the common factor variance (g + group factors), leaving less residual variance (which includes specificity).

As more and more different tests are included in a battery, each newly added test has a greater chance of sharing the common factor variance, thereby losing some of its specificity. For example, if a battery of tests includes the ubiquitous g and three group factors but includes only one test of short-term memory (e.g., digit span), that test’s variance components will consist only of g plus s plus error. If at least two more tests of short-term memory (say, word span and repetition of sentences) are then added to this battery, the three short-term memory tests will form a group factor. Most of what was the digit span test’s specific variance, when it stood alone in the battery, is now aggregated into a group factor (composed of digit span, word span, and repetition of sentences), leaving little residual specificity in each of these related tests.

Theoretically, the only condition that limits the transformation of specific variance into common factor variance when new tests are added or existing tests are made more alike is the reliability of the individual test scores. When the correlation between any two or more tests is as high as their reliability coefficients will allow (the square root of the product of the tests’ reliability coefficients is the mathematical upper bound), they no longer qualify as separate tests and cannot legitimately be used in the same factor analysis to create another group factor. A group factor created in this manner is considered spurious. But there are also some nonspurious group factors that are so small and inconsistently replicable across different test batteries or different population samples that they are trivial, theoretically and practically.

PSYCHOMETRIC SAMPLING ERROR

How invariant is the g extracted from different collections of tests when the method of factor analysis and the subject sample remain constant? There is no method of factor analysis that can yield exactly the same g when different tests are included in the battery. As John B. Carroll (1993a, p. 596) aptly put it, the g factor is “colored” or “flavored” by its ingredients, which are the tests or primary factors whose variance is dominated by g. The g is always influenced, more or less, by both the nature and the variety of the tests from which it is extracted. If the g extracted from different batteries of tests was not substantially consistent, however, g would have little theoretical or practical importance as a scientific construct. But the fact is that g remains quite invariant across many different collections of tests.

It should be recognized, of course, that in factor analysis, as in every form of measurement in science, either direct or indirect (e.g., through logical inference), there are certain procedural rules that must be followed if valid measures are to be obtained. The accuracy of quantitative analysis in chemistry, for example, depends on using reagents of standardized purity. Similarly, in factor analysis, the extraction of g depends on certain requirements for proper psychometric sampling.

The number of tests is the first consideration. The extraction of g as a second-order factor in a hierarchical analysis requires a minimum of nine tests from which at least three primary factors can be obtained.

That three or more primary factors are called for implies the second requirement: a variety of tests (with respect to their information content, skills, and task demands on a variety of mental operations) is needed to form at least three or more distinct primary factors. In other words, the particular collection of tests used to estimate g should come as close as possible, with some limited number of tests, to being a representative sample of all types of mental tests, and the various kinds of tests should be represented as equally as possible. If a collection of tests appears to be quite limited in variety, or is markedly unbalanced in the varieties it contains, the extracted g is probably contaminated by non-g variance and is therefore a poor representation of true g.

If we factor-analyzed a battery consisting, say, of ten kinds of numerical tests, two tests of verbal reasoning, and one test of spatial reasoning, for example, we would obtain a quite distorted g. The general factor (or nominal g) of this battery would actually consist of g plus some sizable admixture of a numerical ability factor. Therefore, this nominal g would differ considerably from another nominal g obtained from a battery consisting of, say, ten verbal tests, two spatial reasoning tests, and one numerical test. The nominal g of this second battery would really consist of g plus a large admixture of verbal ability.

The problem of contamination is especially significant when one extracts g as the first factor (PF1) in a principal factor analysis. The largest PF1 loadings that emerge are all on tests of the same type, so a marked imbalance in the types of tests entering into the analysis will tend to distort the PF1 as a representation of g. If there are enough tests to permit a proper hierarchical analysis, however, an imbalance in the variety of tests is overcome to a large extent by the aggregation of the overrepresented tests into a single group factor. This factor then carries a weight that is more equivalent to the other group factors (which are based on fewer tests), and it is from the correlations among the group factors that the higher-order g is derived. This is one of the main advantages of a hierarchical analysis.

Ability Variation between Persons and within Persons. It is sometimes claimed that any given person shows such large differences in various abilities that it makes no sense to talk about general ability, or to attempt to represent it by a single score, or to rank persons on it. One student does very well in math, yet has difficulty with English composition; another is just the opposite; a third displays a marked talent for music but is mediocre in English and math. Is this a valid argument against g? It turns out that it is not valid, for if it were true, it would not be possible to demonstrate repeatedly the existence of all-positive correlations among scores on diverse tests abilities, or to obtain a g factor in a hierarchical factor analysis. At most, there would only be uncorrelated group factors, and one could orthogonally rotate the principal factor axes to virtually perfect simple structure.

A necessary implication of the claim that the levels of different abilities possessed by an individual are so variable as to contradict the idea of general ability is that the differences between various abilities within persons would, on average, be larger than the differences between persons in the overall average of these various abilities. This proposition can be (and has been) definitively tested by means of the statistical method known as the analysis of variance. The method is most easily explained with the following type of “Tests x Persons” matrix.

It shows ten hypothetical tests of any diverse mental abilities (A, B , . . . J) administered to a large number, N, of persons. The test scores have all been standardized (i.e., converted to z scores) so that every test has a mean z = 0 and standard deviation = 1. Therefore, the mean score on every test (i.e., Mean T in the bottom row) is the same (i.e., Mean = 0). Hence there can be only three sources of variance in this whole matrix: (1) the differences between persons’ (P) mean scores on the ten tests (Mean P [z1, z2, z3, etc.] in last column), and (2) the differences between test scores within each person (e.g., the zA1, zB1, zC1, etc., are the z scores on Tests A, B, C, etc., for Person 1). Now, if the average variance within persons proves to be as large as or larger than the variance between persons, one could say there is no overall general level of ability, or g, in which people differ. That is, differences in the level of various abilities within a person are as large or larger, on average, than differences between persons in the overall average level of these various abilities. In fact, just the opposite is empirically true: Differences in the average level of abilities between persons are very significantly greater than differences in various abilities within persons.

It should be remembered that g and all other factors derived from factor analysis depend essentially on variance between persons. Traits in which there is very little or no variance do not show up in a factor analysis. A small range of variation in the measurements subjected to factor analysis may result in fewer and smaller factors. A factor analysis performed on the fairly similar body measurements of all the Miss Universe contestants (or of heavyweight boxers), for example, would yield fewer and much smaller factors than the same analysis performed on persons randomly selected from the general population.

Chapter 5 : Challenges to g

VERBAL ARGUMENTS

THE SPECIFICITY DOCTRINE

If the only source of individual differences is past learning, it is hard to explain why individual differences in a variety of tasks that are so novel to all of the subjects as to scarcely reflect the transfer of training from prior learned skills or problem-solving strategies are still highly correlated. Transfer from prior learning is quite task-specific. It is well known, for example, that memory span for digits (i.e., repeating a string of n random digits after hearing them spoken at a rate of one digit per second) has a moderate correlation with IQ. It also has a high correlation with memory span for random consonant letters presented in the same way. The average memory span in the adult population is about seven digits, or seven consonant letters. (The inclusion of vowels permits the grouping of letters into pronounceable syllables, which lengthens the memory span.) Experiments have been performed in which persons are given prolonged daily practice in digit span memory over a period of several months. Digit span memory increases remarkably with practice; some persons eventually become able to repeat even 70 to 100 digits without error after a single presentation. [3] But this developed skill shows no transfer effect on IQ, provided the IQ test does not include digit span. But what is even more surprising is that there is no transfer to letter span memory. Persons who could repeat a string of seven letters before engaging in practice that raised their digit span from seven to 70 or more digits still have a letter span of about seven letters. Obviously, practicing one kind of task does not affect any general memory capacity, much less g.

What would happen to the g loadings of a battery of cognitive tasks if they were factor analyzed both before and after subjects had been given prolonged practice that markedly improved their performance on all tasks of the same kind? I know of only one study like this, involving a battery of cognitive and perceptual-motor skill tasks. [4] Measures of task performance taken at intervals during the course of practice showed that the tasks gradually lost much of their g loading as practice continued, and the rank order of the tasks' pre- and post-practice g loadings became quite different. Most striking was that each task's specificity markedly increased. Thus it appears that what can be trained up is not the g factor common to all tasks, but rather each task's specificity, which reflects individual differences in the specific behavior that is peculiar to each task. By definition a given task's specificity lacks the power to predict performance significantly on any other tasks except those that are very close to the given task on the transfer gradient.

The meager success of skills training designed for persons scoring below average on typical g-loaded tests illustrates the limited gain in job competence that can be obtained when specific skills are trained up, leaving g unaffected. In the early 1980s, for example, the Army Basic Skills Education Program was spending some $40 million per year to train up basic skills for the 10 percent of enlisted men who scored below the ninth-grade level on tests of reading and math, with up to 240 hours of instruction lasting up to three months. The program was motivated by the finding that recruits who score well on tests of these skills learn and perform better than low scorers in many army jobs of a technical nature. An investigation of the program's outcomes by the U.S. General Accounting Office (G.A.O.), however, discovered very low success rates. [...]

... Is there any principle of learning or transfer that would explain or predict the high correlations between such dissimilar tasks as verbal analogies, number series, and block designs? Could it explain or predict the correlation between pitch discrimination ability and visual perceptual speed, or the fact that they both are correlated with each of the three tests mentioned above?

“Intelligence” as Learned Behavior. If the only source of individual differences is past learning, it is hard to explain why individual differences in a variety of tasks that are so novel to all of the subjects as to scarcely reflect the transfer of training from prior learned skills or problem-solving strategies are still highly correlated. Transfer from prior learning is quite task-specific. It is well known, for example, that memory span for digits (i.e., repeating a string of n random digits after hearing them spoken at a rate of one digit per second) has a moderate correlation with IQ. It also has a high correlation with memory span for random consonant letters presented in the same way. The average memory span in the adult population is about seven digits, or seven consonant letters. (The inclusion of vowels permits the grouping of letters into pronounceable syllables, which lengthens the memory span.) Experiments have been performed in which persons are given prolonged daily practice in digit span memory over a period of several months. Digit span memory increases remarkably with practice; some persons eventually become able to repeat even 70 to 100 digits without error after a single presentation. [3] But this developed skill shows no transfer effect on IQ, provided the IQ test does not include digit span. But what is even more surprising is that there is no transfer to letter span memory. Persons who could repeat a string of seven letters before engaging in practice that raised their digit span from seven to 70 or more digits still have a letter span of about seven letters. Obviously, practicing one kind of task does not affect any general memory capacity, much less g.

SAMPLING THEORIES OF THORNDIKE AND THOMSON

[...] More complex tests are highly correlated and have larger g loadings than less complex tests. This is what one would predict from the sampling theory: a complex test involves more neural elements and would therefore have a greater probability of involving more elements that are common to other tests.

But there are other facts the overlapping elements theory cannot adequately explain. One such question is why a small number of certain kinds of nonverbal tests with minimal informational content, such as the Raven matrices, tend to have the highest g loadings, and why they correlate so highly with content-loaded tests such as vocabulary, which surely would seem to tap a largely different pool of neural elements. Another puzzle in terms of sampling theory is that tests such as forward and backward digit span memory, which must tap many common elements, are not as highly correlated as are, for instance, vocabulary and block designs, which would seem to have few elements in common. Of course, one could argue trivially in a circular fashion that a higher correlation means more elements in common, even though the theory can’t tell us why seemingly very different tests have many elements in common and seemingly similar tests have relatively few.

Even harder to explain in terms of the sampling theory is the finding that individual differences on a visual scan task (i.e., speed of scanning a set of digits for the presence or absence of a “target” digit), which makes virtually no demand on memory, and a memory scan test (i.e., speed of scanning a set of digits held in memory for the presence or absence of a “target” digit) are perfectly correlated, even though they certainly involve different neural processes. [21] And how would sampling theory explain the finding that choice reaction time is more highly correlated with scores on a nonspeeded vocabulary test than with scores on a test of clerical checking speed? Another apparent stumbling block for sampling theory is the correlation between neural conduction velocity (NCV) in a low-level brain tract (from retina to primary visual cortex) and scores on a complex nonverbal reasoning test (Raven), even though the higher brain centers that are engaged in the complex reasoning ability demanded by the Raven do not involve the visual tract.

Perhaps the most problematic test of overlapping neural elements posited by the sampling theory would be to find two (or more) abilities, say, A and B, that are highly correlated in the general population, and then find some individuals in whom ability A is severely impaired without there being any impairment of ability B. For example, looking back at Figure 5.2, which illustrates sampling theory, we see a large area of overlap between the elements in Test A and the elements in Test B. But if many of the elements in A are eliminated, some of its elements that are shared with the correlated Test B will also be eliminated, and so performance on Test B (and also on Test C in this diagram) will be diminished accordingly. Yet it has been noted that there are cases of extreme impairment in a particular ability due to brain damage, or sensory deprivation due to blindness or deafness, or a failure in development of a certain ability due to certain chromosomal anomalies, without any sign of a corresponding deficit in other highly correlated abilities. [22] On this point, behavioral geneticists Willerman and Bailey comment: “Correlations between phenotypically different mental tests may arise, not because of any causal connection among the mental elements required for correct solutions or because of the physical sharing of neural tissue, but because each test in part requires the same ‘qualities’ of brain for successful performance. For example, the efficiency of neural conduction or the extent of neuronal arborization may be correlated in different parts of the brain because of a similar epigenetic matrix, not because of concurrent functional overlap.” [22] A simple analogy to this would be two independent electric motors (analogous to specific brain functions) that perform different functions both running off the same battery (analogous to g). As the battery runs down, both motors slow down at the same rate in performing their functions, which are thus perfectly correlated although the motors themselves have no parts in common. But a malfunction of one machine would have no effect on the other machine, although a sampling theory would have predicted impaired performance for both machines.

GARDNER’S SEVEN “FRAMES OF MIND” AND MENTAL MODULES

In fact, it is hard to justify calling all of the abilities in Gardner’s system by the same term — “intelligences.” If Gardner claims that the various abilities he refers to as “intelligences” are unrelated to one another (which has not been empirically demonstrated), what does it add to our knowledge to label them all “intelligences”? … Bobby Fisher, then, could be claimed as one of the world’s greatest athletes, and many sedentary chess players might be made to feel good by being called athletes. But who would believe it? The skill involved in chess isn’t the kind of thing that most people think of as athletic ability, nor would it have any communality if it were entered into a factor analysis of typical athletic skills. [...]

Modular Abilities. Gardner invokes recent neurological research on brain modules in support of his theory. [35] But there is nothing at all in this research that conflicts in the least with the findings of factor analysis. It has long been certain that the factor structure of abilities is not unitary, because factor analysis applied to the correlations among any large and diverse battery of ability tests reveals that a number of factors (although fewer than the number of different tests) must be extracted to account for most of the variance in all of the tests. The g factor, which is needed theoretically to account for the positive correlations between all tests, is necessarily unitary only within the domain of factor analysis. But the brain mechanisms or processes responsible for the fact that individual differences in a variety of abilities are positively correlated, giving rise to g, need not be unitary. [...]

Some of the highly correlated abilities identified as factors probably represent what are referred to as modules. But here is the crux of the main confusion, which results when one fails to realize that in discussing the modularity of mental abilities we make a transition from talking about individual differences and factors to talking about the localized brain processes connected with various kinds of abilities. Some modules may be reflected in the primary factors; but there are other modules that do not show up as factors, such as the ability to acquire language, quick recognition memory for human faces, and three-dimensional space perception, because individual differences among normal persons are too slight for these virtually universal abilities to emerge as factors, or sources of variance. This makes them no less real or important. Modules are distinct, innate brain structures that have developed in the course of human evolution. They are especially characterized by the various ways that information or knowledge is represented by the neural activity of the brain. The main modules thus are linguistic (verbal/auditory/lexical/semantic), visuospatial, object recognition, numerical-mathematical, musical, and kinesthetic.

Although modules generally exist in all normal persons, they are most strikingly highlighted in two classes of persons, (a) those with highly localized brain lesions. Or pathology, and (b) idiots savants. Savants evince striking discrepancies between amazing proficiency in a particular narrow ability and nearly all other abilities, often showing an overall low level of general ability. Thus we see some savants who are even too mentally retarded to take care of themselves, yet who can perform feats of mental calculation, or play the piano by ear, or memorize pages of a telephone directory, or draw objects from memory with photographic accuracy. The modularity of these abilities is evinced by the fact that rarely, if ever, is more than one of them seen in a given savant.

In contrast, there are persons whose tested general level of ability is within the normal range, yet who, because of a localized brain lesion, show a severe deficiency in some particular ability, such as face recognition, receptive or expressive language dysfunctions (aphasia), or inability to form long-term memories of events. Again, modularity is evidenced by the fact that these functional deficiencies are quite isolated from the person’s total repertoire of abilities. Even in persons with a normally intact brain, a module’s efficiency can be narrowly enhanced through extensive experience and practice in the particular domain served by the module.

Such observations have led some researchers to the mistaken notion that they contradict the discovery of factor analysis that, in the general population, individual differences in mental abilities are all positively and hierarchically correlated, making for a number of distinct factors and a higher-order general factor, or g. The presence of a general factor indicates that the workings of the various modules, though distinct in their functions, are all affected to some degree by some brain characteristic(s), such as chemical neurotransmitters, neural conduction velocity, amount of dendritic branching, and degree of myelination of axons, in which there are individual differences. Hence individual differences in the specialized mental activities associated with different modules are correlated.

A simple analogy might help to explain the theoretical compatibility between the positive correlations among all mental abilities and the existence of modularity in mental abilities. Imagine a dozen factories (“persons”), each of which manufactures the same five different gadgets (“modular abilities”). Each gadget is produced by a different machine (“module”). The five machines are all connected to each other by a gear chain that is powered by one motor. But each of the five factories uses a different motor to drive the gear chain, and each factory’s motor runs at a constant speed different from the speed of the motors in any other factory. This will cause the factories to differ in their rates of output of the five gadgets (“scores on five different tests”). The factories will be said to differ in overall efficiency or capacity, because the rates of output of the five gadgets are positively correlated. If the correlations between output rates of the gadgets produced by all five factories were factor analyzed, they would yield a large general factor. Gadgets’ output rates may not be perfectly correlated, however, because the sales demand for each gadget differs across factories, and the machines that produce the gadgets with the larger sales are better serviced, better oiled, and kept in consistently better operating condition than the machines that make low-demand gadgets. Therefore, even though the five machines are all driven by the same motor, they differ somewhat in their efficiency and consistency of operation, making for less than a perfect correlation between the rates of output. Now imagine that in one factory the main drive shaft of one of the machines breaks, and it cannot produce its gadget at all (analogous to localized brain damage affecting a single module, but not g). In another factory, four of the machines break down and fail to produce gadgets, but one machine is very well maintained because it continues to run and puts out gadgets at a rate commensurate with the speed of the motor that powers the gear chain that runs the machine (analogous to an idiot savant).

Chapter 6 : Biological Correlates of g

SPECIFIC BIOLOGICAL CORRELATES OF IQ AND g

Body Size (Extrinsic). It is now well established that both height and weight are correlated with IQ. When age is controlled, the correlations in different studies range mostly between +.10 and +.30, and average about +.20. Studies based on siblings find no significant within-family correlation, and gifted children (who are taller than their age mates in the general population) are not taller than their nongifted siblings.

Because both height and IQ are highly heritable, the between-families correlation of stature and IQ probably represents a simple genetic correlation resulting from cross-assortative mating for the two traits. Both height and “intelligence” are highly valued in Western culture and it is known that there is substantial assortative mating for each trait.

There is also evidence of cross-assortative mating for height and IQ; there is some trade-off between them in mate selection. When short and tall women are matched on IQ, educational level, and social class of origin, for example, it is found that the taller women tend to marry men of higher intelligence (reasonably inferred from their higher educational and occupational status) than do shorter women. Leg length relative to overall height is regarded an important factor in judging feminine beauty in Western culture, and it is interesting that the height x IQ correlation is largely attributable to the leg-length component of height. Sitting height is much less correlated with IQ. If there is any intrinsic component of the height x IQ correlation, it is too small to be detected at a significant level even in quite large samples. The two largest studies [8] totaling some 16,000 sibling pairs, did not find significant within-family correlations of IQ with either height or weight (controlling for age) in males or females or in blacks or whites.

Head Size and Brain Size (Intrinsic). There is a great deal of evidence that external measurements of head size are significantly correlated with IQ and other highly g-loaded tests, although the correlation is quite small, in most studies ranging between +.10 and +.25, with a mean r ≈ +.15. The only study using g factor scores showed a correlation of +.30 with a composite measure of head size based on head length, width, and circumference, in a sample of 286 adolescents. [9] Therefore, it appears that head size is mainly correlated with the g component of psychometric scores. The method of correlated vectors applied to the same sample of 286 adolescents showed a highly significant rs = +.64 between the g vector of seventeen diverse tests and the vector of the tests’ correlations with head size. The head-size vector had nonsignificant correlations with the vectors of the spatial, verbal, and memory factors of +.27, .00, and +.05, respectively.

In these studies, of course, head size is used as merely a crude proxy for brain size. The external measurement of head size is in fact a considerably attenuated proxy for brain size.

The correlation between the best measures of external head size and actual brain size as directly measured in autopsy is far from perfect, being around +.50 to +.60 in adults and slightly higher in children. There are specially devised formulas by which one can estimate internal cranial capacity (in cubic centimeters) from external head measurements with a fair degree of accuracy. These formulas have been used along with various statistical corrections for age, body size (height, weight, total surface area), and sex to estimate the correlation between IQ and brain size from data on external head size. The typical result is a correlation of about .30.

These indirect methods, however, are no longer necessary, since the technology of magnetic resonance imaging (MRI) now makes it possible to obtain a three-dimensional picture of the brain of a living person. A highly accurate measure of total brain volume (or the volume of any particular structure in the brain) can be obtained from the MRI pictures. Such quantitative data are now usually extracted from the MRI pictures by computer.

To date there are eight MRI studies [10] of the correlation between total brain volume and IQ in healthy children and young adults. In every study the correlations are significant and close to +.40 after removing variance due to differences in body size. (The correlation between body size and brain size in adult humans is between +.20 and +.25.) Large parts of the brain do not subserve cognitive processes, but govern sensory and motor functions, emotions, and autonomic regulation of physiological activity. Controlling body size removes to some extent the sensorimotor aspects of brain size from the correlation of overall brain size with IQ. But controlling body size in the brain X IQ correlation is somewhat problematic, because there may be some truly functional relationship between brain size and body size that includes the brain’s cognitive functions. Therefore, controlling body size in the IQ X brain size correlation may be too conservative; it could result in overcorrecting the correlation. Moreover, the height and weight of the head constitute an appreciable proportion of the total body height and weight, so that controlling total body size could also contribute to overcorrection by removing some part of the variance in head and brain size along with variance in general body size. Two of the MRI studies used a battery of diverse cognitive tests, which permitted the use of correlated vectors to determine the relationship between the column vector of the varioustests’ g factor loadings and the column vector of the tests’ correlations with total brain volume. In one study, [10f] based on twenty cognitive tests given to forty adult males sibling pairs, these vectors were correlated +.65. In the other study, [10g] based on eleven diverse cognitive tests, the vector of the tests’ g loadings were correlated +.51 with the vector of the tests’ correlations with total brain volume and +.66 with the vector of the tests’ correlations with the volume of the brain’s cortical gray matter. In these studies, all of the variables entering into the analyses were the averages of sibling pairs, which has the effect of increasing the reliability of the measurements. Therefore, these analyses are between-families. A problematic aspect of both studies is that there were no significant within-family correlations between test scores and brain volumes, which implies that there is no intrinsic relationship between brain size and g. To conclude that the within-family correlation in the population is zero, however, has a high risk of being a Type II error, given the unreliability of sibling difference scores (on which within-family correlations are based) and the small number of subjects used in these studies. Much larger studies based merely on external head size show significant within-family correlations with IQ. Clearly, further MRI studies are needed for a definitive answer on this critical issue.

Metabolically, the human brain is by far the most “expensive” organ in the whole body, and the body may have evolved to serve in part like a “power pack” for the brain, with a genetically larger brain being accommodated by a larger body. It has been determined experimentally, for example, that strains of rats that were selectively bred from a common stock exclusively to be either good or poor at maze learning were found to differ not only in brain size but also in body size. [11] Body size increased only about one-third as much as brain size as a result of the rats being selectively bred exclusively for good or poor maze-learning ability. There was, of course, no explicit selection for either brain size or body size, but only for maze-learning ability. Obviously, there is some intrinsic functional and genetic relationship between learning ability, brain size, and body size, at least in laboratory rats. Although it would be unwarranted to generalize this finding to humans, it does suggest the hypothesis that a similar relationship may exist in humans. It is known that body size has increased along with brain size in the course of human evolution. The observed correlations between brain size, body size, and mental ability in humans are consistent with these facts, but the nature and direction of the causal connections between these variables cannot be inferred without other kinds of evidence that is not yet available.

The IQ X head-size correlation is clearly intrinsic, as shown by significant correlations both between-families (r = +.20, p < .001) and within-families (r = +.11, p < .05) in a large sample of seven-year-old children, with head size measured only by circumference and IQ measured by the Wechsler Intelligence Scale for Children. [12] (Age, height, and weight were statistically controlled.) The same children at four years of age showed no significant correlation of head size with Stanford-Binet IQ, and in fact the WF correlation was even negative (-.04). This suggests that the correlation of IQ with head size (and, by inference, brain size) is a developmental phenomenon, increasing with age during childhood.

One of the unsolved mysteries regarding the relation of brain size to IQ is the seeming paradox that there is a considerable sex difference in brain size (the adult female brain being about 100 cm3 smaller than the male) without there being a corresponding sex difference in IQ. [13] It has been argued that some IQ tests have purposely eliminated items that discriminate between the sexes or have balanced-out sex differences in items or subtests. This is not true, however, for many tests such as Raven’s matrices, which is almost a pure measure of g, yet shows no consistent or significant sex difference. Also, the differing g loadings of the subscales of the Wechsler Intelligence Test are not correlated with the size of the sex difference on the various subtests. [14] The correlation between brain size and IQ is virtually the same for both sexes.

The explanation for the well-established mean sex difference in brain size is still somewhat uncertain, although one hypothesis has been empirically tested, with positive results. Properly controlling (by regression) the sex difference in body size diminishes, but by no means eliminates, the sex difference in brain size. Three plausible hypotheses have been proposed to explain the sex difference (of about 8 percent) in average brain size between the sexes despite there being no sex difference in g:

1. Possible sexual dimorphism in neural circuitry or in overall neural conduction velocity could cause the female brain to process information more efficiently.

2. The brain size difference could be due to the one ability factor, independent of g, that unequivocally shows a large sex difference, namely, spatial visualization ability, in which only 25 percent of females exceed the male median. Spatial ability could well depend upon a large number of neurons, and males may have more of these “spatial ability” neurons than females, thereby increasing the volume of the male brain.

3. Females have the same amount of functional neural tissue as males but there is a greater “packing density” of the neurons in the female brain. While the two previous hypotheses remain purely speculative at present, there is recent direct evidence for a sex difference in the “packing density” of neurons. [15] In the cortical regions most directly related to cognitive ability, the autopsied brains of adult women possessed, on average, about 11 percent more neurons per unit volume than were found in the brains of adult men. The males and females were virtually equated on Wechsler Full Scale IQ (112.3 and 110.6, respectively). The male brains were about 12.5 percent heavier than the female brains. Hence the greater neuronal packing density in the female brain nearly balances the larger size of the male brain. Of course, further studies based on histological, MRI, and PET techniques will be needed to establish the packing density hypothesis as the definitive explanation for the seeming paradox of the two sexes differing in brain size but not differing in IQ despite a correlation of about +.40 between these variables within each sex group.

Cerebral Glucose Metabolism. The brain’s main source of energy is glucose, a simple sugar. Its rate of uptake and subsequent metabolism by different regions of the brain can serve as an indicator of the degree of neural energy expended in various locations of the brain during various kinds of mental activity. This technique consists of injecting a radioactive isotope of glucose (F-18 deoxyglucose) into a person’s bloodstream, then having the person engage in some mental activity (such as taking an IQ test) for about half an hour, during which the radioactive glucose is metabolized by the brain. The isotope acts as a radioactive tracer of the brain’s neural activity.

Immediately following the uptake period in which the person was engaged in some standardized cognitive task, the gamma rays emitted by the isotope from the nerve cells in the cerebral cortex can be detected and recorded by means of a brain-scanning technique called positron emission tomography (or PET scan). The PET scan provides a picture, or map, of the specific cortical location and the amount of neural metabolism (of radioactive glucose) that occurred during an immediately preceding period of mental activity.

Richard J. Haier, a leading researcher in this field, has written a comprehensive review [26a] of the use of the PET scan for studying the physiological basis of individual differences in mental ability. The main findings can be summarized briefly. Normal adults have taken the Raven Advanced Progressive Matrices (RAPM) shortly after they were injected with radioactive glucose. The RAPM, a nonverbal test of reasoning ability, is highly g loaded and contains little, if any, other common factor variance. The amount of glucose metabolized during the thirty-five-minute testing period is significantly and inversely related to scores on the RAPM, with negative correlations between -.7 and -.8. In solving RAPM problems of a given level of difficulty, the higher-scoring subjects use less brain energy than the lower-scoring subjects, as indicated by the amount of glucose uptake. Therefore, it appears that g is related to the efficiency of the neural activity involved in information processing and problem solving. Negative correlations between RAPM scores and glucose utilization are found in every region of the cerebral cortex, but are highest in the temporal regions, both left and right.

The method of correlated vectors shows that g is specifically related to the total brain’s glucose metabolic rate (GMR) while engaged in a mental activity over a period of time. In one of Haier’s studies, [26b] the total brain’s GMR was measured immediately after subjects had taken each of the eleven subtests of the Wechsler Adult Intelligence Scale-Revised (WAIS-R), and the GMR was correlated with scores on each of the subtests. The vector of these correlations was correlated r = -.79 (rs = -.66, p < .05) with the corresponding vector of the subtests’ g loadings (based on the national standardization sample).

A phenomenon that might be called “the conservation of g” and has been only casually observed in earlier research, but has not yet been rigorously established by experimental studies, is at least consistent with the findings of a clever PET-scan study by Haier and co-workers. The “conservation of g” refers to the phenomenon that as people become more proficient in performing certain complex mental tasks through repeated practice on tasks of the same type, the tasks become automatized, less g demanding, and consequently less g loaded. Although there remain individual differences in proficiency on the tasks after extensive practice, individual differences in performance may reflect less g and more task-specific factors. Something like this was observed in a study [26b] in which subjects’ PET scans were obtained after their first experience with a video game (Tetris) that calls for rapid and complex information processing, visual spatial ability, strategy learning, and motor coordination. Initially, playing the Tetris game used a relatively large amount of glucose. Daily practice on the video game for 30 to 45 minutes over the course of 30 to 60 days, however, showed greatly increasing proficiency in playing the game, accompanied by a decreasing uptake of glucose and a marked decrease in the correlation of the total brain glucose metabolic rate with g. In other words, the specialized brain activity involved in more proficient Tetris performance consumed less energy. Significantly, the rate of change in glucose uptake over the course of practice is positively correlated with RAPM scores. The performance of high-g subjects improved more from practice and they also gained greater neural metabolic efficiency during Tetris performance than subjects who were lower in g, as indexed by both the RAPM test and the Wechsler Adult Intelligence Scale.

Developmental PET scan studies in individuals from early childhood to maturity show decreasing utilization of glucose in all areas of the brain as individuals mature. In other words, the brain’s glucose uptake curve is inversely related to the negatively accelerated curve of mental age, from early childhood to maturity. The increase in the brain’s metabolic efficiency seems to be related to the “neural pruning,” or normal spontaneous decrease in synaptic density. The spontaneous decrease is greatest during the first several years of life. “Neural pruning” apparently results in greater efficiency of the brain’s capacity for information processing. Paradoxical as it may seem, an insufficient loss of neurons during early maturation is associated with some types of mental retardation.

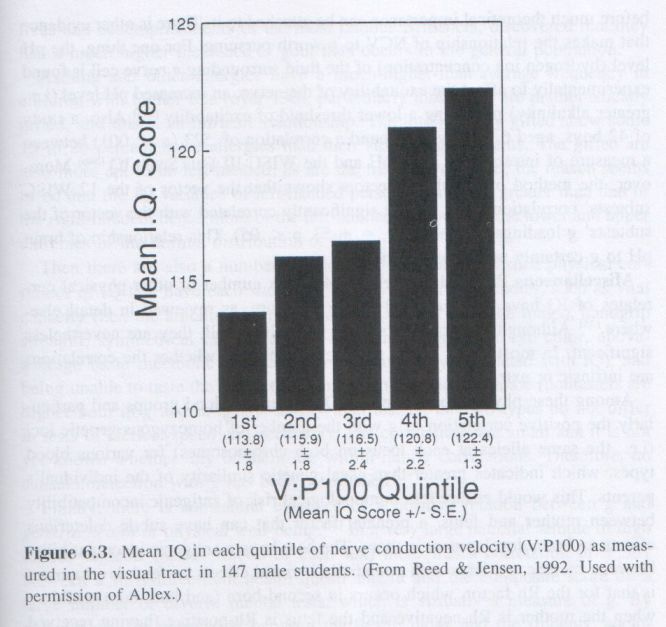

Brain Nerve Conduction Velocity. Reed and Jensen (1992) measured NCV in the primary visual tract between the retina of the eye and the visual cortex in 147 college males and found a significant correlation of +.26 (p = .002) between NCV and Raven IQ. (The correlation is +.37 after correction for restriction of range of IQ in this sample of college students.) When the sample is divided into quintiles (five equal-sized groups) on the basis of the average velocity of the P100 visual evoked potential (V:P100), the average IQ in each quintile increases as a function of the V:P100 as shown in Figure 6.3.

A theoretically important aspect of this finding is that the NCV (i.e., V:P100) is measured in a brain tract that is not a part of the higher brain centers involved in the complex problem solving required by the Raven test, and the P100 visual evoked potential occurs, on average, about 100 milliseconds after the visual stimulus, which is less than the time needed for conscious awareness of the stimulus. This means that although the cortical NCV involved in Raven performance may be correlated with the NCV in the subcortical visual tract, the same neural elements are not involved. This contradicts Thomson’s sampling theory of g, which states that tests are correlated to the extent that they utilize the same neural elements. But here we have a correlation between the P100 visual evoked potential and scores on the Raven matrices that cannot be explained in terms of their overlapping neural elements. In the same subject sample, Reed and Jensen found that although NCV and choice reaction time (CRT) are both significantly correlated with IQ, they are not significantly correlated with each other. [33] This suggests two largely independent processes contributing to g, one linked to NCV and one linked to CRT. As this puzzling finding is based on a single study, albeit with a large sample, it needs to be replicated before much theoretical importance can be attached to it. There is other evidence that makes the relationship of NCV to g worth pursuing. For one thing, the pH level (hydrogen ion concentration) of the fluid surrounding a nerve cell is found experimentally to affect the excitability of the nerve, an increased pH level (i.e., greater alkalinity) producing a lower threshold of excitability. [34a] Also, a study of 42 boys, aged 6 to 13 years, found a correlation of .523 (p < .001) between a measure of intracellular brain pH and the WISC-III Full Scale IQ. [34b] Moreover, the method of correlated vectors shows that the vector of the 12 WISC subtests’ correlations with pH are significantly correlated with the vector of the subtests’ g loadings (r = +.63, rs = +.53, p < .05). This relationship of brain pH to g certainly merits further study.

Chapter 7 : The Heritability of g

IS g ONE AND THE SAME FACTOR BETWEEN FAMILIES AND WITHIN FAMILIES?

As we shall see, the main factor in the heritability of IQ and other mental tests is g. Also, as we shall see, genetic analysis and the calculation of heritability depend on a comparison of the trait variance between families (BF) and the variance within families (WF). (The separation of the total or population variance and correlation into BF and WF components was introduced in Chapter 6, pp. 141-42.) Therefore, before discussing the heritability of g, we must ask whether the g factor that emerges from a factor analysis of BF correlations is the very same g that emerges from a factor analysis of WF correlations. Recall that BF is the mean of all the full siblings (reared together) in each family in the population; WF is the differences among full siblings (reared together). In other words, some proportion of the total population variance (VP) in a trait measured on individuals is variance between families (VBF) and some proportion is variance within families (VWF). Thus theoretically VP = VBF + VWF. Similarly, the population correlation between any two variables reliably measured on individuals can be apportioned to BF and WF. The method for doing this requires measuring the variables of interest in sets of full siblings who were reared together.

Why might one expect the correlations between different mental tests, say X and Y, to be any different BF than WF? If the genetic or environmental influences that cause families to differ from one another on X or Y (or both) are of a different nature than the influences that cause differences on X or Y among siblings reared together in the same family, it would be surprising if the BF correlation of X and Y were the same as the WF correlation. And if there were a large number of diverse tests, the probability would be nil that all their intercorrelations would have the same factor structure in both BF and WF if the tests did not reflect the same causal variables acting to the same degree in both cases.

BF differences can be genetic or environmental, or both. A typical source of BF variance is social class or socioeconomic status (SES). Families differ in SES, but siblings reared in the same family do not differ in SES; therefore SES is not a source of WF variance. The same is true of differences associated with race, cultural identification, ethnic cuisines, and other such variables. They differ between families (BF) but seldom differ between full siblings reared together in the same household.

Now consider two sets of tests: A and B, X and Y. If the scores on Tests A and B are both strongly influenced by SES and other variables on which families differ and on which siblings in the same family do not differ, and if scores on Test X and Test Y are very little influenced by these BF variables, we should expect two things: (1) the BF correlation of A and B (rAB) would be larger than the BF correlation of X and Y (rXY), and (2) the BF correlation of A and B (rAB) would be unrelated to the BF correlation of X and Y (rXY). The greater size of the correlation rAB reflects similarity in the greater effect of SES (or other BF variables) on the scores of these two tests. This could be shown further by the fact that the BF correlation is much larger than the WF correlation for tests A and B. The size of the correlation rXY, on the other hand, reflects something other than SES (or other variables) on which families differ. So if the BF rXY and the WF rXY are virtually equal (after correction for attenuation [2]) and if this is also true of the BF and WF correlations for many diverse tests, it suggests that the same causal factors are involved in both the BF and WF correlations for these tests (unlike Tests A and B).

We can examine the hypothesis that the genetic and environmental influences that produce BF differences in tests’ g loadings are the same as the genetic and environmental influences that produce WF differences in the tests’ g loadings. If we find that this hypothesis cannot be rejected, we can rule out the supposed direct BF environmental influences on g, such as SES, racial-cultural differences, and the like. The observed mean differences between different SES, racial, and cultural groups on highly g-loaded tests then must be attributed to the same influences that cause differences among the siblings within the same families. As will be explicated later, these sibling differences result from both genetic and environmental (better called nongenetic) effects.

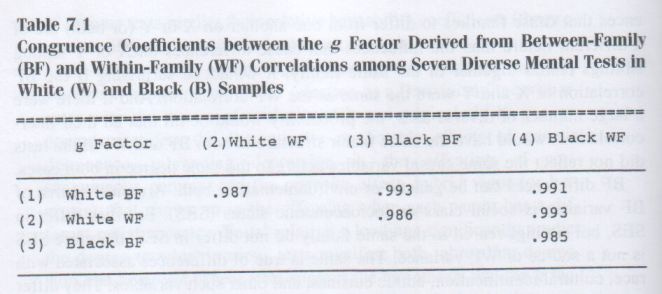

Two large-scale studies have tested this hypothesis. In each study, a g factor was extracted from the BF correlations among a number of highly diverse tests and also from the WF correlations among the same tests. [3] These two factors are referred to as gBF and gWF.

The first study [4] was based on pairs of siblings (nearest in age, in grades 2 to 6) from 1,495 white and 901 black families. They were all given seven highly diverse age-standardized tests (Memory, Figure Copying, Pictorial IQ, Nonverbal IQ, Verbal IQ, Vocabulary, and Reading Comprehension). Both BF and WF correlations among the tests were obtained separately for whites and blacks, and a g factor (first principal component) was extracted from each of the four correlation matrices. The degree of similarity between factors is properly assessed by the coefficient of congruence, rc. [5] Two factors with values of rc larger than .90 are generally interpreted as “highly similar”; values above .95 as having “virtual identity.” The values of rc are shown in Table 7.1. They are all very high and probably not significantly different from one another. The high congruence of the gBF and gWF factors in each racial group indicates that g is clearly an intrinsic factor (as defined in Chapter 6, p. 139). Also, both gBF and gWF are virtually the same across racial groups. In this California school population, little, if any, of the variance in the g factor in these tests is attributable to the effects of SES or cultural differences. Whatever SES and cultural differences may exist in this population do not alter the character of the general factor that all these diverse tests have in common.

The second study [6] is based on groups that probably have more distinct cultural differences than black and white schoolchildren in California, namely, teenage Americans of Japanese ancestry (AJA) and Americans of European ancestry (AEA), all living in Hawaii. Each group was composed of full siblings from a large number of families. They were all given a battery of fifteen highly diverse cognitive tests representing such first-order factors as verbal, spatial, perceptual speed and accuracy, and visual memory. In this study, the g factor was extracted as a second-order factor in a confirmatory hierarchical factor analysis. The same type of factor analysis was performed separately on the BF and WF correlations in each racial sample. The congruence coefficient between gBF and gWF was +.99 in both the AJA group and the AEA group, and the congruence across the AJA and AEA groups was +.99 for both gBF and gWF. These results are essentially the same as those in the previous study, even though the populations, tests, and methods of extracting the general factor all differed. Moreover, the four first-order group factors showed almost as high congruence between BF and WF and between AJA and AEA as did the second-order g factor. The authors of the second study, behavioral geneticists Craig Nagoshi and Ronald Johnson [6] concluded, “Nearly all of the indices used in the present analyses thus support a high degree of similarity in the factor structures of cognitive ability test scores calculated between versus within families. In other words, they suggest that the genetic and environmental factors underlying cognitive abilities are intrinsic in nature. These indices also suggest that these BF and WF structures are similar across the AEA and AJA ethnic groups, despite some earlier findings that may have led one to expect especially strong between-family effects for the AJA group” (p. 314).

EMPIRICAL EVIDENCE ON THE HERITABILITY OF IQ

Also, due to “placement bias” by adoption agencies the environments of the separated MZ twins in these studies are not perfectly uncorrelated, so one could argue that the high correlation between MZAs is attributable to the similarity of the postadoptive environments in which they were reared. This problem was thoroughly investigated in the MZAs of the ongoing Minnesota twin study, [10] which has a larger sample of MZAs than any other study to date. It is not enough simply to show that there is a correlation between the separated twin’s environments on such variables as father’s and mother’s level of education, their socioeconomic status, their intellectual and achievement orientation, and various physical and cultural advantages in the home environment. One must also take account of the degree to which these placement variables are correlated with IQ. The placement variables’ contribution to the MZA IQ correlation, then, is the product of the MZA correlation on measures of the placement variables and the correlation of the placement variables with IQ. This product, it turns out, is exceedingly small and statistically nonsignificant, ranging from -.007 to +.032, with an average of +.0045, when calculated for nine different placement variables. In other words, similarities in the MZA’s environments cannot possibly account for more than a minute fraction of the IQ correlation of +.75 between MZAs. If there were no genetic component at all in the correlation between the twins’ IQs, the correlation between their environments would not account for an IQ correlation of more than +.10. [...]

The diminishing, or even vanishing, effect of differences in home environment revealed by adoption studies, at least within the wide range of typical, humane child-rearing environments in the population, can best be understood in terms of the changing aspects of the genotype-environment (GE) covariance from predominantly passive, to reactive, to active. [16] (See Figure 7.1, p. 174.)

The passive component of the GE covariance reflects all those things that happen to the phenotype, independent of its own characteristics. For example, the child of musician parents may have inherited genes for musical talent and is also exposed (through no effort of its own) to a rich musical environment.

The reactive component of the GE covariance results from the reaction of others to the individual’s phenotypic characteristics that have a genetic basis. For example, a child with some innate musicality shows an unusual sensitivity to music, so the parents give the child piano lessons; the teacher is impressed by the child’s evident musical talent and encourages the child to work toward a scholarship at Julliard. The phenotypic expression of the child’s genotypic musical propensities causes others to treat this child differently from how they would treat a child without these particular propensities. Each expression of the propensity has consequences that lead to still other opportunities for its expression, thus propelling the individual along the path toward a musical career.

The active component of the GE covariance results from the child’s actively seeking and creating environmental experiences that are most compatible with the child’s genotypic proclivities. The child’s enlarging world of potential experiences is like a cafeteria in which the child’s choices are biased by genetic factors. The musical child uses his allowance to buy musical recordings and to attend concerts; the child spontaneously selects radio and TV programs that feature music instead of, say, cartoons or sports events; and while walking alone to school the child mentally rehearses a musical composition. The child’s musical environment is not imposed by others, but is selected and created by the child. (The same kind of examples could be given for a great many other inclinations and interests that are probably genetically conditioned, such as literary, mathematical, mechanical, scientific, artistic, histrionic, athletic, and social talents.) The child’s genotypic propensity can even run into conflict with the parents’ wishes and expectations.

From early childhood to late adolescence the predominant component of the GE covariance gradually shifts from passive to reactive to active, which makes for increasing phenotypic expression of individuals’ genotypically conditioned characteristics. In other words, as people approach maturity they seek out and even create their own experiential environment. With respect to mental abilities, a “good” environment, in general, is one that affords the greatest freedom and the widest variety of opportunities for reactive and active GE covariance, thereby allowing genotypic propensities their fullest phenotypic expression. [...]

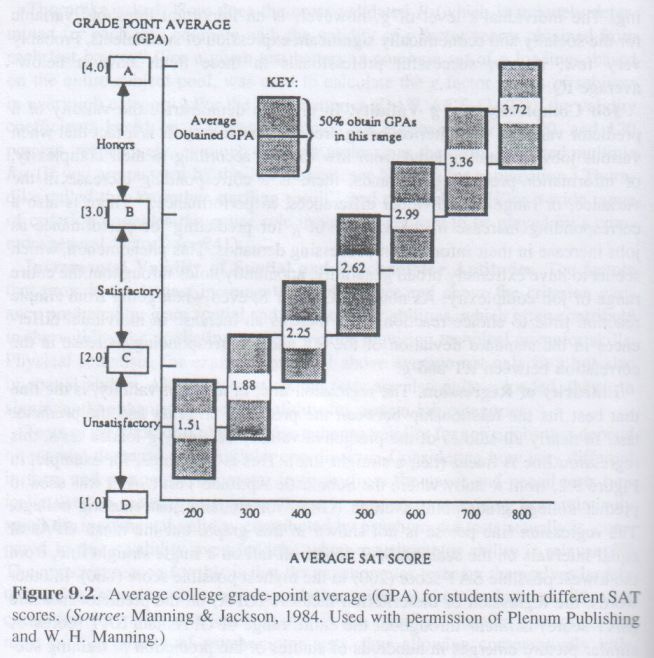

Heritability of Scholastic Achievement. There is no better predictor of scholastic achievement than psychometric g, even when the g factor is extracted from tests that have no scholastic content. In fact, the general factor of both scholastic achievement tests and teachers’ grades is highly correlated with the g extracted from cognitive tests that are not intended to measure scholastic achievement. It should not be surprising, then, that scholastic achievement has about the same broad heritability as IQ.

Three large-scale studies [18] of the heritability of scholastic attainments, based on twin, parent-child, and sibling correlations, have shown broad heritability coefficients that average about .70, which does not differ significantly from the heritability of IQ in the same samples. The heritability coefficients for various school subjects range from about .40 to .80. As will be seen in the following section, nearly all of the variance that measures of scholastic achievement have in common with nonscholastic cognitive tests consists of the genetic component of g itself.

GENETIC AND ENVIRONMENTAL COMPONENTS OF THE g FACTOR PER SE

Heritability. Of course, any single kinship correlation (except MZ twins reared apart) does not prove that genetic factors are involved in it. The correlation theoretically could be entirely environmental due to the kinships’ shared family environment. To determine whether it is the genetic component of mental tests’ variance that is mainly reflected by g we have to look at the heritability coefficients of various tests. Again, we can apply the method of correlated vectors, using a number of diverse cognitive tests and looking at the relationship between the column vector of the tests’ g loadings (Vg) and the column vector of the tests’ heritability coefficients (Vh). Each test’s heritability coefficient in each of the following studies was determined by the twin method. [2]

Three independent studies [22] have used MZT and DZT twins to obtain the heritability coefficients of each of the eleven subtests of the Wechsler Adult Intelligence Scale. The correlations between Vg and Vh were +.62 (p < .05), +.61 (p < .05), and +.55 (p < .10). A fourth independent study [23] was based on a model-fitting method applied to adult MZ twins reared apart in addition to MZT and DZT to estimate the heritability coefficients of thirteen diverse cognitive tests used in studies of subjects in the Swedish National Twin Registry. The Vg x Vh correlation was +.77 (p < .025). (In this study, the g factor scores [based on the first principal component] had a heritability coefficient of .81.) The joint probability [24] that these Vg x Vh correlations based on four independent studies could have occurred by chance if there were not a true relationship between tests’ g loadings and the tests’ heritability coefficients is less than one in a hundred (p < .01).

Mental Retardation. Another relevant study [25] applied the method of correlated vectors to the data from over 4,000 mentally retarded persons who had taken the eleven subtests of the Wechsler Intelligence Scales. The column vector composed of the retarded persons’ mean scaled scores on each of the Wechsler subscales and the column vector of the subscales’ heritability coefficients (as determined by the twin method in three independent studies of normal samples) were rank-order correlated -.76 (one-tailed p < .01), -.46 (p < .08), and -.50 (p < .06). In other words, the higher a subtest’s heritability, the lower is the mean score of the retarded subjects (relative to the mean of the standardization population of the WISC-R).

The study also tested the hypothesis that Wechsler subtests on which the retarded perform most poorly are the subtests with the larger g loadings; that is, there is an inverse relationship (i.e., negative correlation) between the vector of mean scaled scores and the corresponding vector of their g loadings. This hypothesis was examined in four different versions of the Wechsler Intelligence Scales (WAIS, WAIS-R, WISC, WISC-R). Each version was given to a different group of retarded persons. On each of these versions of the Wechsler test the vector of the mean scaled scores and the vector of their g loadings were rank-order correlated, giving the following correlation coefficients:

WAIS .0

WAIS-R -.67 (p < .05)

WISC -.63 (p < .05)

WISC-R -.60 (p < .05)

The WAIS is clearly an outlier and appears to be based on an atypical group of retarded persons. The vectors of g loadings on all four of the Wechsler versions are all highly congruent (the six congruence coefficients range from .993 to .998), so it is only the vector of scaled scores of the group that took the WAIS that is anomalous. It is the only group whose vector of scaled scores is not significantly correlated with the corresponding vectors in the other three groups, all of which are highly concordant in their vectors of scaled scores. In general, these data show:

1. There is a genetic component in mental retardation.

2. This genetic component reflects the same genetic component that accounts for individual differences in the nonretarded population.

3. The genetic component in mental retardation is expressed in the same g factor that is a major source of variance in mental test scores in the nonretarded population.

Decomposition of Psychometric g into Genetic and Environmental Components. The obtained phenotypic correlation between two tests is a composite of genetic and environmental components. Just as it is possible, using MZ and DZ twins, to decompose the total phenotypic variance of scores on a given test into separate genetic and environmental components, it is possible to decompose the phenotypic correlation between any two tests into genetic and environmental components. [26] That is to say, the scores on two tests may be correlated in part because both tests reflect the same genetic factors common to twins and in part because both tests reflect the same environmental influences that are shared by twins who were reared together. Therefore, with a battery of n diverse tests, we can decompose the n x n square matrix of all their phenotypic intercorrelations (the P matrix) into a matrix of the tests’ genetic intercorrelations (the G matrix) and a matrix of the tests’ environmental intercorrelations (the E matrix). Each of these matrices can then be factor analyzed to reveal the separate contributions of genes and environment to the phenotypic factor structure of the given set of tests.

Several studies have been performed using essentially this kind of analysis. I say “essentially” because the analytic methods of the various studies differ depending on the specific mathematical procedures and computer routines used, although their essential logic is as described above. These studies provide the most sophisticated and rigorous analysis of the genetic and environmental composition of g and of some of the well-established group factors independent of g.

Thompson et al. (1991) compared large samples of MZ and DZ twin data on sets of tests specifically devised to measure Verbal, Spatial, Speed (perceptual-clerical), and Memory abilities, as well as tests of achievement levels in Reading, Math, and Language. They then obtained ordinary MZ and DZ twin correlations and MZ and DZ cross-twin correlations. From these they formed separate 7 x 7 matrices of G and E correlations. Three of their findings are especially relevant to g theory:

1. The phenotypic correlations of the four ability tests with the three achievement tests is largely due to their genetic correlations, which ranged from .61 to .80.

2. The environmental (shared and nonshared) correlations between the ability tests and achievement tests were all extremely low except for the test of perceptual-clerical speed, which showed very high shared environmental correlations with the three achievement tests.

3. The 7 x 7 matrix of genetic correlations has only one significant factor, which can be called genetic g, that accounts for 77 percent of the total genetic variance in the seven variables. (The genetic g loadings of the seven variables range from .62 to .99.) Obviously the remainder of the genetic variance is contained in other factors independent of g. The authors of the study concluded, “Ability-achievement associations are almost exclusively genetic in origin” (p. 164). This does not mean that the environment does not affect the level of specific abilities and achievements, but only that the correlations between them are largely mediated by the genetic factors they have in common, most of which is genetic g, that is, the general factor of the genetic correlations among all of the tests.

Separate hierarchical (Schmid-Leiman) factor analyses of two batteries of tests (eight subtests of the Specific Cognitive Abilities test and eleven subtests of the WISC-R) were decomposed by Luo et al. (1994) into genetic and environmental components using large samples of MZT and DZT twins in a model-fitting procedure. Factor loadings derived from the matrix of phenotypic correlations and the matrix of genetic correlations were compared.

The phenotypic g and genetic g found by Luo et al. are highly similar. The correlation between the vector of phenotypic g loadings and the vector of genetic g loadings was +.88 (p < .01) for the Specific Cognitive Abilities (SCA) tests and +.85 (p < .01) for the WISC-R.

A general factor which can be called environmental g was extracted from the environmental correlations among the variables. Only the environmental correlations based on the twins’ shared environment are discussed here. (The test intercorrelations that arose from the nonshared environment yielded a negligible and nonsignificant “general” factor in both the SCA and WISC-R, with factor loadings ranging from -.01 to +.35 and averaging +.08.) The correlation between the vector of phenotypic g loadings and the vector of (shared) environmental g loadings was +.28 for the SCA tests and +.09 for the WISC-R. In brief, phenotypic g closely reflects the genetic g, but bears hardly any resemblance to the (shared) environmental g.

A similar study of thirteen diverse cognitive tests taken by MZ and DZ twins was conducted in Sweden by Pedersen et al. (1994), but focused on the non-g genetic variance in the battery of 13 tests. They found that although genetic g accounts for most of the genetic variance in the battery of tests, it does not account for all of it. When the genetic g is wholly removed from the tests’ total variances, some 12 to 23 percent of the remaining variance is genetic. This finding accords with the previous study (Luo et al., 1994), which also found that 23 percent of the genetic variance resides in factors other than g. Pedersen et al. (1994) concluded that “phenotypic g-loadings can be used as an initial screening device to identify tests that are likely to show greater or less genetic overlap with g . . . if one were to pick a single dimension to focus the search for genes, g would be it” (p. 141).

Another study [27] focused on genetic influence on the first-order group factors, independent of g, in a Schmid-Leiman hierarchical factor analysis, using confirmatory factor analysis. The phenotypic factor analysis, based on eight diverse tests, yielded four first-order factors (Verbal, Spatial, Perceptual Speed, and Memory) plus the second-order factor, g. Using data on large samples of adopted and nonadopted children, and natural and unrelated siblings, the phenotypic factors were decomposed into the following variance components: genetic, shared environment, and unique (nonshared) environment. The variance of phenotypic g was .72 genetic and .28 nonshared environmental effects. Although g carries more of the genetic variance than any of the first-order factors, three of the first-order factors (Verbal, Spatial, and Memory) have distinct genetic components independent of genetic g. (There is no genetic Perceptual Speed factor independent of genetic g.) Very little of the environmental variance gets into even the first-order factors, much less the second-order g factor. The environmental variance resides mostly in the tests’ specificities, that is, the residual part of the tests’ true-score variance that is not included in the common factors. It is especially noteworthy that nearly all of the environmental variance is due to nonshared environmental effects. Shared environmental influences among children reared together contribute negligibly to the variance and covariance of the test scores. [28] In this study of children who all were beyond Grade 1 in school and averaged 7.4 years of age, g and the group factors reflect virtually no effects of shared environment.

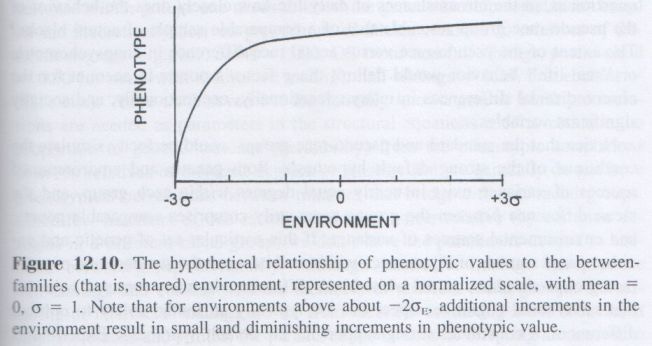

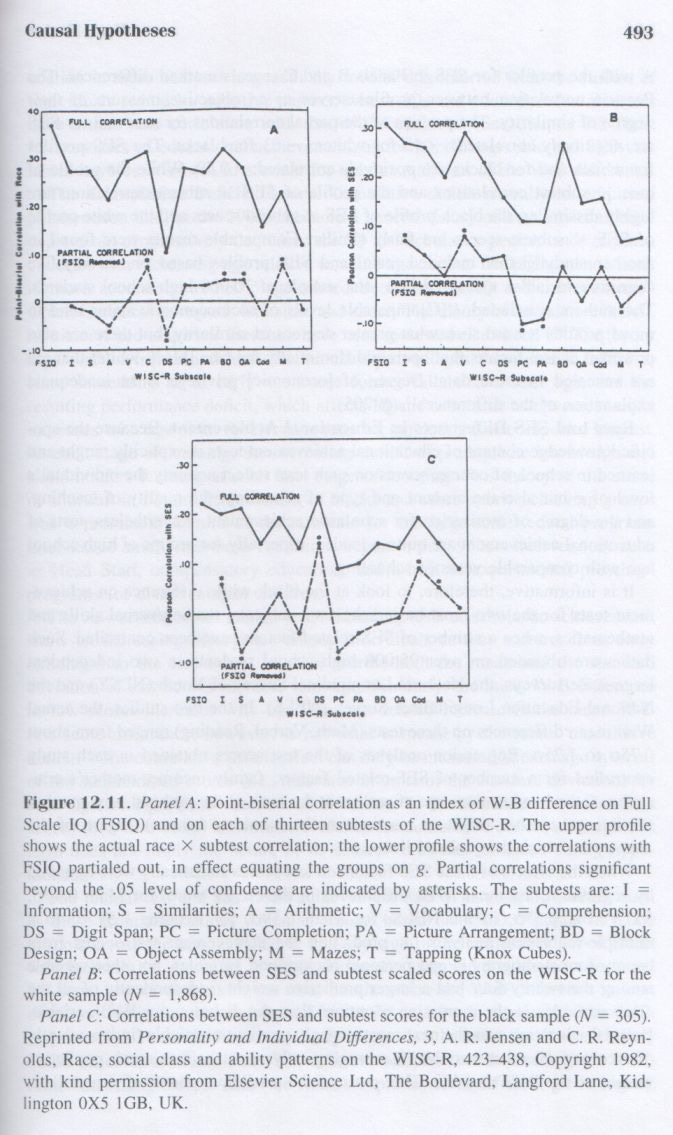

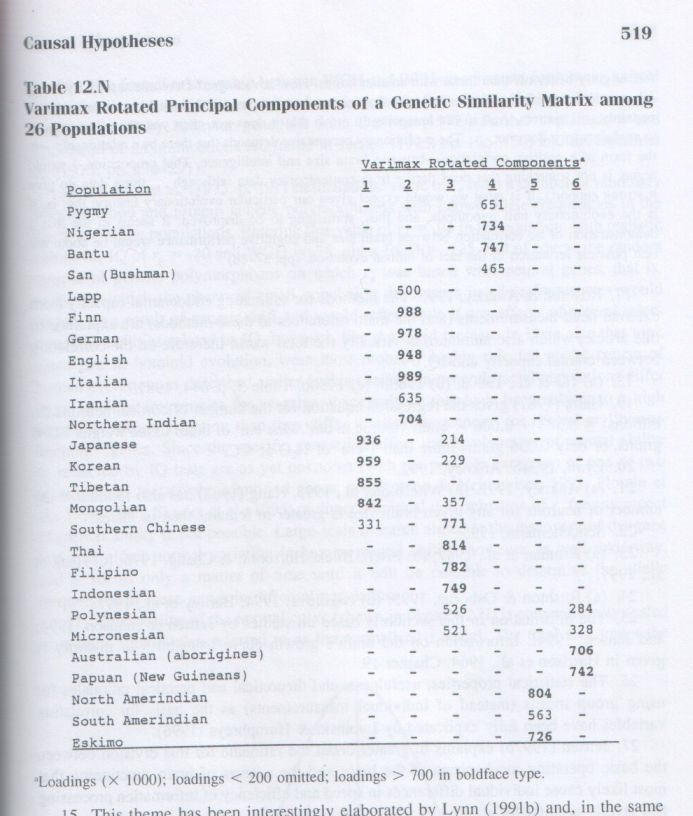

Genetic g of Scholastic Achievement. As noted previously, psychometric g is highly correlated with scholastic achievement and both variables have substantial heritability. It was also noted that it is the genetic component of g that largely accounts for the correlation between scores on nonscholastic ability tests and scores on scholastic achievement tests. The phenotypic correlations among different content areas of scholastic achievement have been analyzed into their genetic and shared and nonshared environmental components in one of the largest twin studies ever conducted. [29] The American College Testing (ACT) Program provided 3,427 twin pairs, both MZ and DZ, for the study, which decomposed the phenotypic correlations among the four subtests of the ACT college admissions examination. The four subtests of the ACT examination are English, Mathematics, Social Studies, and Natural Sciences.