by Jean Philippe Rushton (1997)

CONTENT [Jump links below]

Chapter 2: Character Traits

Chapter 3: Behavioral Genetics

Chapter 4: Genetic Similarity Theory

Chapter 6: Race, Brain Size, and Intelligence

Chapter 7: Speed of Maturation, Personality, and Social Organization

Chapter 8: Sexual Potency, Hormones, and AIDS

Chapter 9: Genes Plus Environment

Chapter 11: Out of Africa

Chapter 12: Challenges and Rejoinders

Chapter 13: Conclusions and DiscussionChapter 2 : Character Traits

The Altruistic Personality

The most important and largest study of the problem of generality versus specificity in behavior concerned altruism. This is the classic “Character Education Enquiry” carried out by Hartshorne and May in the 1920s and published in three books (Hartshorne & May, 1928; Hartshorne, May, & Mailer, 1929; Hartshorne, May, & Shuttleworth, 1930). These investigators gave 11,000 elementary and high school students some 33 different behavioral tests of altruism (referred to as the “service” tests), self-control, and honesty in home, classroom, church, play, and athletic contexts. Concurrently, ratings of the children’s reputations with teachers and classmates were obtained. Altogether, more than 170,000 observations were collected. Scores on the various tests were correlated to discover whether behavior is specific to situations or consistent across them.

This study is still regarded as a landmark that has not been surpassed by later work. It will be discussed in some detail because it is the largest examination of the question ever undertaken, it raises most of the major points of interest, and it has been seriously misinterpreted by many investigators. The various tests administered to the children are summarized in Table 2.1.

First, the results based on the measures of altruism showed that any one behavioral test of altruism correlated, on the average, only 0.20 with any other test. But when the five behavioral measures were aggregated into a battery, they correlated a much higher 0.61 with the measures of the child’s altruistic reputation among his or her teachers and classmates. Furthermore, the teachers’ and peers’ perceptions of the students’ altruism were in close agreement (r = 0.80). These latter results indicate a considerable degree of consistency in altruistic behavior. In this regard, Hartshorne et al. (1929:107) wrote:

The correlation between the total service score and the total reputation scores is .61 … Although this seems low, it should be borne in mind that the correlations between test scores and ratings for intelligence seldom run higher than .50.

Similar results were obtained for the measures of honesty and self-control. Any one behavioral test correlated, on average, only 0.20 with any other test. If, however, the measures were aggregated into batteries, then much higher relationships were found either with other combined behavioral measures, with teachers’ ratings of the children, or with the children’s moral knowledge scores. Often, these correlations were on the order of 0.50 to 0.60. For example, the battery of tests measuring cheating by copying correlated 0.52 with another battery of tests measuring other types of classroom cheating. Thus, depending on whether the focus is on the relationship between individual measures or on the relationship between averaged groups of behaviors, the notions of situational specificity and situational consistency are both supported. Which of these two conclusions is more accurate?

Hartshorne and colleagues focused on the small correlations of 0.20 and 0.30. Consequently, they argued (1928: 411) for a doctrine of specificity:

Neither deceit nor its opposite, “honesty” are unified character traits, but rather specific functions of life situations. Most children will deceive in certain situations and not in others. Lying, cheating, and stealing as measured by the test situations used in these studies are only very loosely related.

Their conclusions and data have often been cited in the subsequent literature as supporting situational specificity. For example, Mischel’s (1968) influential review argued for specificity on the ground that contexts are important and that people have different methods of dealing with different situations.

Unfortunately Hartshorne and May (1928-30), P. E. Vernon (1964), Mischel (1968), and many others, including me (Rushton, 1976), had seriously overinterpreted the results as implying that there was not enough cross-situational consistency to make the concept of traits very useful. This, however, turned out to be wrong. By focusing on correlations of 0.20 and 0.30 between any two measures, a misleading impression is created. A more accurate picture is obtained by examining the predictability achieved from a number of measures. This is because the randomness in any one measure (error and specificity variance) is averaged out over several measures, leaving a clearer view of what a person’s true behavior is like. Correlations of 0.50 and 0.60 based on aggregated measures support the view that there is cross-situational consistency in altruistic and honest behavior.

Further evidence for this conclusion is found in Hartshorne and May’s data. Examination of the relationships between the battery of altruism tests and batteries concerned with honesty, self-control, persistence, and moral knowledge suggested a factor of general moral character (see, e.g., Hartshorne et al., 1930: 230, Table 32). Mailer (1934) was one of the first to note this. Using Spearman’s tetrad difference technique, Mailer isolated a common factor in the intercorrelations of the character tests of honesty, altruism, self-control, and persistence. Subsequently, Burton (1963) reanalyzed the Hartshorne and May data and found a general factor that accounted for 35-40 percent of common variance.

As Eysenck (1970), among others, has repeatedly pointed out, failures to take account of the necessity to average across a number of exemplars in order to see consistency led to the widespread and erroneous view that moral behavior is almost completely situation specific. This, in turn, led students of moral development to neglect research aimed at discovering the origins of general moral “traits”. The fact that, judging from the aggregated correlational data, moral traits do exist, and, moreover, appear to develop early in life, poses a considerable challenge to developmental research.

Judges’ Ratings

One traditionally important source of data has been the judgments and ratings of people made by their teachers and peers. In recent years, judges’ ratings have been much maligned on the ground that they are little more than “erroneous constructions of the perceiver.” This pervasive view had led to a disenchantment with the use of ratings. The main empirical reason that is cited for rejecting rating methods is that judges’ ratings only correlate, on the average, 0.20 to 0.30. However, it is questionable that correlations between two judges’ ratings are stable and representative. The validity of judgments increases as the number of judges becomes larger.

Galton (1908) provided an early demonstration from a cattle exhibition where 800 visitors judged the weight of an ox. He found that the individual estimates were distributed in such a way that 50 percent fell between plus or minus three percent of the middlemost value that was itself within one percent of the real value. Gallon likened the results to the votes given in a democracy where, with the reservation that the voters be versed in the issues, the vox populi was correct. Shortly thereafter, K. Gordon (1924) had subjects order a series of objects by weight. When the number of subjects making the judgment increased from 1 to 5 to 50, the corresponding validities increased from 0.41 to 0.68 to 0.94.

Longitudinal Stability

The question of cross-situational consistency becomes a question about longitudinal consistency when the time dimension is introduced. To what extent, over both time and situation, do a person’s behaviors stem from enduring traits of character? When studies measure individual differences by aggregating over many different assessments, longitudinal stability is usually found. But when single measurements or other less reliable techniques are used, longitudinal stability is less marked.

Intelligence is the trait with the strongest stability over time. The ordering of an individual relative to his or her age cohort over the teenage and adult years shows typical correlations of 0.62 to 0.94 over 7 to 40 years (Brody, 1992). The trend is for the correlations to decline as the period of time between administrations of the test increases. But the correlations can be increased by further aggregation. For example, the combined score from tests administered at ages 10, 11, and 12 correlate 0.96 with a combined score from tests administered at ages 17 and 18 (Pinneau, 1961). This latter finding suggests that there was initially no change at all in an individual’s score relative to his or her cohorts over the high school years.

Intelligence in infancy, however, is either slightly less stable or somewhat less easy to measure. The correlations between a composite of tests taken from 12 to 24 months predicts the composite at ages 17 and 18 around 0.50 (Pinneau, 1961). Newer techniques based on infant habituation and recognition memory (the infant’s response to a novel or familiar stimulus) made in the first year of life predict later IQ assessed between 1 and 8 years of age with a weighted (for sample size) average of between 0.36 and 0.45 (McCall & Carriger, 1993).

The stability of personality has been demonstrated over several 30-year longitudinal studies. To summarize these, Costa and McCrae (1994:21) quote William James (1890/1981), saying that once adulthood has been reached, personality is “set like plaster.” At younger ages, personality stability was demonstrated by Jack Block (1971, 1981) in work where the principle of aggregation was strictly adhered to. For about 170 individuals data were first obtained in the 1930s when the subjects were in their early teens. Further data were gathered when the subjects were in their late teens, in their mid-30s, and in their mid-40s. The archival data so generated were enormously wide-ranging and often not in a form permitting of direct quantification. Block systematized the data by employing clinical psychologists to study individual dossiers and to rate the subject’s personality using the Q-sort procedure — a set of descriptive statements such as “is anxious,” which can be sorted into piles that indicate how representative the statement is of the subject. To ensure independence, the materials for each subject were carefully segregated by age level, and no psychologist rated the materials for the same subject at more than one time period. The assessments by the different raters (usually three for each dossier) were found to agree with one another to a significant degree, and they were averaged to form an overall description of the subject at that age.

Block (1971, 1981) found personality stability across the ages tested. Even the simple correlations between Q-sort items over the 30 years between adolescence and the mid-40s provided evidence for stability. Correlations indicating stability were, for example, for the male sample: “genuinely values intellectual and cognitive matters,” 0.58; “is self-defeating,” 0.46; and “has fluctuating moods,” 0.40; for the female sample, “is an interesting, arresting person,” 0.44; “aesthetically reactive,” 0.41; and “is cheerful,” 0.36. When the whole range of variables for each individual was correlated over 30 years, the mean correlation was 0.31. When typologies were created, the relationships became even more substantial.

Using self-reports instead of judgments made by others, Conley (1984) analyzed test-retest data from 10 to 40 years for major dimensions of personality such as extraversion, neuroticism, and impulsivity. The correlations in different studies ranged from 0.26 to 0.84 for periods extending from 10 to 40 years, with an average of about 0.45 for the 40-year period. Overall the personality traits were only slightly less consistent over time than were measures of intelligence (0.67, in this study).

Longitudinal stability has been cross-validated using different procedures. Thus, one method is used to assess personality at Time 1 (e.g., ratings made by others) and a quite different method at Time 2 (e.g., behavioral observations). Olweus (1979), for example, reported correlations of 0.81 over a 1-year time period between teacher ratings of the aggressive behavior of children and frequency count observations of the actual aggressive behavior. Conley (1985) reported correlations of about 0.35 between ratings made by a person’s acquaintances as they were about to get married and self-reports made some 20 years later.

In a 22-year study of the development of aggression, Eron (1987) found that children rated as aggressive by their peers when they were 8 years old were rated as aggressive by a different set of peers 10 years later and were 3 times more likely to have been entered on police record by the time they were 19 than those not so rated. By age 30, these children were more likely to have engaged in a syndrome of antisocial behavior including criminal convictions, traffic violations, child and spouse abuse, and physical aggressiveness outside the family. Moreover, the stability of aggression was found to exist across three generations, from grandparents to children to grandchildren. The 22-year stability of aggressive behavior is 0.50 for men and 0.35 for women.

Also in the 22-year data, early ratings of prosocial behavior were positively related to later prosocial behavior and negatively related to later antisocial behavior. Children rated as concerned about interpersonal relations at age 8 had higher occupational and educational attainment as well as low aggression, social success, and good mental health, whereas aggression at age 8 predicted social failure, psychopathology, aggression, and low educational and occupational success. In all of these analyses, social class was held constant. Eron’s (1987) data suggested that aggression and prosocial behavior are at two ends of a continuum (see Figure 2.3).

The general conclusion is that once people reach the age of 30 there is little change in the major dimensions of personality. McCrae and Costa (1990; Costa & McCrae, 1992) reviewed six longitudinal studies published between 1978 and 1992, including two of their own. The six had quite different samples and rationales but came to the same conclusions. Basic tendencies typically stabilized somewhere between 21 and 30. Retest measures for both self-reports and ratings made by others are typically about 0.70. Moreover, anything these dimensions affect stabilizes as well, such as self-concept, skills, interests, and coping strategies.

Predicting Behavior

Although a great deal of effort has gone into refining paper and pencil and other techniques for measuring attitudes, personality, and intelligence, relatively little attention has been given to the adequacy of measurements on the behavioral end of the relationship. Whereas the person end of the person-behavior relationship has often been measured by multi-item scales, the behavior to be predicted has often comprised a single act.

Fishbein and Ajzen (1974) proposed that multiple-act criteria be used on the behavioral side. Using a variety of attitude scales to measure religious attitudes and a multiple-item religious behavior scale, they found that attitudes were related to multiple-act criteria but had no consistent relationship to single-act criteria. Whereas the various attitude scales had a mean correlation with single behaviors ranging from 0.14 to 0.19, their correlations with aggregated behavioral measures ranged from 0.70 to 0.90.

In a similar paper to Fishbein and Ajzen’s, Jaccard (1974) carried out an investigation to determine whether the dominance scales of the California Psychological Inventory and the Personality Research Form would predict self-reported dominance behaviors better in the aggregate than they would at the single-item level. The results were in accord with the aggregation expectations. Whereas both personality scales had a mean correlation of 0.20 with individual behaviors, the aggregated correlations were 0.58 and 0.64.

Comparable observations were made by Eaton (1983) who assessed activity level in three- and four-year olds using single versus multiple actometers attached to the children’s wrists as the criterion and teachers’ and parents’ ratings of the children’s activity level as the predictors. The ratings predicted activity scores from single actometers relatively weakly (0.33) while predicting those aggregated across multiple actometers comparatively well (0.69).

One Problem with Experimental Studies

Failures to aggregate dependent variables in experimental situations may produce conclusions about the relative modifiability of behavior that may be incorrect. For example, with respect to social development, it is considered well established that observational learning from demonstrators has powerful effects on social behavior (Bandura, 1969, 1986). These findings have prompted governmental concern about possible inadvertent learning from television. Concerning intellectual development, it is equally well known that intervention programs designed to boost children’s intelligence, some of them employing observational learning, have achieved only modest success (Brody, 1992; Locurto, 1991).

The apparent difference in the relative malleability of social and intellectual development has been explained in various ways. One leading interpretation is that intellectual development is controlled by variables that are “structural” and, therefore, minimally susceptible to learning, whereas social development is controlled by variables that are “motivational” and, therefore, more susceptible to learning. An analysis of the dependent variables used in the two types of studies, however, suggests an interpretation based on the aggregation principle.

In observational learning studies, a single dependent variable is typically used to measure the behavior; for example, the number of punches delivered to a Bo-Bo doll in the case of aggression (Bandura, 1969) or the number of tokens donated to a charity in the case of altruism (Rushton, 1980). In intellectual training studies, however, multiple-item dependent variables such as standardized intelligence tests are typically used. Throughout this discussion it has been stressed that the low reliability of nonaggregated measures can mask strong underlying relationships between variables. In the case of learning studies, it can have essentially the opposite effect. It is always easier to produce a change in some trait as a consequence of learning when a single, less stable measure of the trait is taken than when more stable, multiple measures are taken. This fact may explain why social learning studies of altruism have generally been more successful than training studies of intellectual development.

Spearman’s g

The degree to which various tests are correlated with g, or are “g-loaded,” can be determined by factor analysis, a statistical procedure for grouping items. [...] Most conventional tests of mental ability are highly g-loaded although they usually measure some admixture of other factors in addition to g, such as verbal, spatial, and memory abilities, as well as acquired information of a scholastic nature (Brody, 1992). Test scores with the g factor statistically removed have virtually no predictive power for scholastic performance. Hence, it is the g factor that is the “active ingredient.” The predictive validity of g applies also to performance in nearly all types of jobs. Occupations differ in their complexity and g demands as much as do mental tests, so as the complexity of a job increases, the better cognitive ability predicts performance on it (e.g., managers and professions 0.42 to 0.87, sales clerks and vehicle operators 0.27 to 0.37; see Hunter, 1986, Table 1; Hunter & Hunter, 1984).

Chapter 3 : Behavioral Genetics

The Heritability of Behavior

Anthropometric and Physiological Traits

Height, weight, and other physical attributes provide a point of comparison to behavioral data. Not surprisingly, they are usually highly heritable accounting for 50 to 90 percent of the variance. These results are found from studies of both twins and adoptees (e.g., Table 3.1). The genes also account for large portions of the variance in physiological processes such as rate of breathing, blood pressure, perspiration, pulse rate, and EEG-measured brain activity.

Obesity was studied in a sample of 540 42-year-old Danish adoptees selected so that the age and sex distribution was the same in each of four weight categories: thin, medium, overweight, and obese (Stunkard et al., 1986). Biological and adoptive parents were contacted and their current weight assessed. The weight of the adoptees was predicted from that of their biologic parents but not at all from that of the adoptive parents with whom they had been raised. The relation between biologic parents and adoptees was present across the whole range of body fatness — from very thin to very fat. Thus, genetic influences play an important role in determining human fatness, whereas the family environment alone has no apparent effect. This latter result, of course, is one that varies from popular views. Subsequent evidence shows significant genetic transmission of obesity in black as well as in white families (Ness, Laskarzewski, & Price, 1991).

Testosterone is a hormone mediating many bio-behavioral variables in both men and women. Its heritability was examined in 75 pairs of MZ twins and 88 pairs of DZ twins by Meikle, Bishop, Stringham, & West (1987). They found that genes regulated 25 to 76 percent of plasma content for testosterone, estradiol, estrone, 3 alpha-audiostanediol glucuronide, free testosterone, lutinizing hormone, follicle stimulating hormone, and other factors affecting testosterone metabolism.

Activity Level

Several investigators have found activity level to be heritable from infancy onward (Matheny, 1983). In one study, activity in 54 identical and 39 fraternal twins aged 3 to 12 years was assessed with behaviors like “gets up and down” while “watching television” and “during meals” (Willerman, 1973). The correlation for identical twins was 0.88 and for fraternal twins was 0.59, yielding a heritability of 58 percent. An investigation of 181 identical and 84 fraternal twins from 1 to 5 years of age using parent ratings found correlations for a factor of zestfulness of 0.78 for identical and 0.54 for fraternal twins, yielding a heritability of 48 percent (Cohen, Dibble, & Grawe, 1977). Data from a Swedish sample aged 59 years and including 424 twins reared together and 315 twins reared apart showed the heritability for activity level in this older sample to be 25 percent (Plomin, Pedersen, McClearn, Nesselroade, & Bergeman, 1988).

Altruism and Aggression

Several twin studies have been conducted on altruism and aggression. Loehlin and Nichols (1976) carried out cluster analyses of self-ratings made by 850 adolescent pairs on various traits. Clusters labeled kind, argumentative, and family quarrel showed the monozygotic twins to be about twice as much alike as the dizygotic twins, with heritabilities from 20 to 42 percent. Matthews, Batson, Horn, and Rosenman (1981) analyzed adult twin responses to a self-report measure of empathy and estimated a heritability of 72 percent. In the Minnesota adoption study of twins raised apart, summarized in Table 3.1, the correlations for 44 pairs of identical twins reared apart are 0.46 for aggression and 0.53 for traditionalism, a measure of following rules and authority (Tellegen et al., 1988).

In a study of 573 pairs of identical and fraternal adult twin pairs reared together, all of the twins completed separate questionnaires measuring altruistic and aggressive tendencies. The questionnaires included a 20-item self-report altruism scale, a 33-item empathy scale, a 16-item nurturance scale, and many items measuring aggressive dispositions. As shown in Table 3.2, 50 percent of the variance on each scale was associated with genetic effects, virtually 0 percent with the twin’s common environment, and the remaining 50 percent with each twin’s specific environment. When the estimates were corrected for unreliability of measurement, the genetic contribution increased to 60 percent (Rushton, Fulker, Neale, Nias, & Eysenck, 1986).

At 14 months of age, empathy was assessed in 200 pairs of twins by the child’s response to feigned injury by experimenter and mother (Emde et al., 1992). Ratings were based on the strength of concern expressed in the child’s face, the level of emotional arousal expressed in the child’s body as well as prosocial intervention by the child (e.g., comforting by patting the victim or bringing the victim a toy). About 36 percent of the variance was estimated to be genetic.

Attitudes

Although social, political and religious attitudes are often thought to be environmentally determined, a twin study by Eaves and Eysenck (1974) found that radicalism-conservatism had a heritability of 54 percent, tough-mindedness had a heritability of 54 percent, and the tendency to voice extreme views had a heritability of 37 percent. In a review of this and two other British studies of conservatism, Eaves and Young (1981) found for 894 pairs of identical twins an average correlation of 0.67 and for 523 fraternal twins an average correlation of 0.52, yielding an average heritability of 30 percent.

In a cross-national study, 3,810 Australian twin pairs reared together reported their response to 50 items of conservatism such as death penalty, divorce, and jazz (Martin et al., 1986). The heritabilities ranged from 8 percent to 51 percent (see Table 4.4, next chapter). Overall correlations of 0.63 and 0.46 were found for identical and fraternal twins, respectively, yielding a heritability of 34 percent. Correcting for the high assortative mating that occurs on political attitudes raised the overall heritability to about 50 percent. Martin et al. (1986) also replicated the analyses by Eaves and Eysenck (1974) on the heritability of radicalism and tough-mindedness.

Religious attitudes also show genetic influence. Although Loehlin and Nichols (1976) found no genetic influences on belief in God or involvement in organized religious activities in their study of 850 high school twins, when religiosity items were aggregated with other items, such as present religious preference, then a genetic contribution of about 20 percent became observable (Loehlin & Nichols, 1976, Table 4-3, Cluster 15). Using a more complete assessment battery, including five well-established scales of religious attitudes, interests and values, and estimates of heritability from twins reared apart as well as together, the Minnesota study estimated the genetic contribution to the variance in their instruments to be about 50 percent (Table 3.1; also Waller, Kojetin, Bouchard, Lykken, & Tellegen, 1990).

Criminality

The earliest twin study of criminality was published in 1929 in Germany by Johannes Lange. Translated into English in 1931, Crime as Destiny reported on the careers of a number of criminal twins, some of them identical, others fraternal, shortly after the distinction between the two kinds had become generally accepted. Lange compared the concordance rates for 13 monozygotic and 17 dizygotic pairs of twins in which at least 1 had been convicted of a criminal offense. Ten of the 13 monozygotic pairs (77 percent) were concordant, whereas only 2 of the 17 dizygotic pairs (12 percent) were concordant. A summary of Lange’s (1931) study and of the literature up to the 1960s was provided by Eysenck and Gudjonsson (1989). For 135 monozygotic twins the concordance rate was 67 percent and for 135 dizygotic twins, 30 percent.

Among subsequent studies is an investigation of the total population of 3,586 male twin pairs born on the Danish Islands from 1881 to 1910, recording serious offenses only. For this nonselected sample, identical and fraternal twin concordances are 42 percent versus 21 percent for crimes against persons and 40 percent versus 16 percent for crimes against property (Christiansen, 1977). Three small studies carried out in Japan showed similar concordance rates to those in the West (see Eysenck & Gudjonsson, 1989: 97-99).

Replicating the concordance ratios based on official statistics are those from studies based on self-reports. Sending questionnaires by mail to 265 adolescent twin pairs, Rowe (1986) sampled the eighth through twelfth grades in almost all the school districts of Ohio. The results showed that identical twins were roughly twice as much alike in their criminal behavior as fraternal twins, the heritability being about 50 percent.

Converging with the twin work are the results from several American, Danish, and Swedish adoption studies. Children who were adopted in infancy were at greater risk for criminal convictions if their biological parents had been so convicted than if their adoptive parents had been. For example, in the Danish study, based on 14,427 adoptees, for 2,492 adopted sons who had neither adoptive nor biological criminal parents, 14 percent had at least one criminal conviction. For 204 adopted sons whose adoptive (but not biological) parents were criminals, 15 percent had at least one conviction. If biological (but not adoptive) parents were criminal, 20 percent (of 1,226) adopted sons had criminal records; if both biological and adoptive parents were criminal, 25 percent (of 143) adopted sons were criminals. In addition, it was found that siblings raised apart showed 20 percent concordance and that half-siblings showed 13 percent concordance while pairs of unrelated children reared together in the same adoptive families showed 9 percent concordance (Mednick, Gabrielli, & Hutchings, 1984).

Emotionality

The largest heritability study of emotional reactivity, or the speed of arousal to fear and anger, was carried out by Floderus-Myrhed, Pedersen, and Rasmuson (1980). They administered the Eysenck Personality Inventory to 12,898 adolescent twin pairs of the Swedish Twin Registry. The heritability for neuroticism was 50 percent for men and 58 percent for women. Another large twin study, carried out in Australia, involving 2,903 twin pairs, found identical and fraternal twin correlations of 0.50 and 0.23 for neuroticism (Martin & Jardine, 1986). The opposite side of the neuroticism continuum, emotional stability, as measured by the California Psychological Inventory’s Sense of Weil-Being scale, is also found to have a significant heritability, both in adolescence and 12 years later (Dworkin et al., 1976).

The studies of twins raised apart substantiate the genetic contribution to a neuroticism “superfactor.” In the Minnesota study (Table 3.1), the correlation for the 44 MZA twins is 0.61 for the trait of stress reaction, 0.48 for alienation, and 0.49 for harm avoidance (Tellegen et al., 1988). In a Swedish study of 59-year-olds the correlation for emotionality in 90 pairs of identical twins reared apart is 0.30 (Plomin et al., 1988). Other adoption studies also confirm that the familial resemblance for neuroticism is genetically based. In a review of three adoption studies, the average correlation for nonadoptive relatives was about 0.15 and the average correlation for adoptive relatives was nearly zero, suggesting a heritability estimate of about 0.30 (Henderson, 1982).

Intelligence

It is the g factor that is the most heritable component of intelligence tests. In Bouchard et al.’s study (Table 3.1) the g factor, the first principal component extracted from several mental ability tests, had the highest heritability (78 percent). Similarly in Pedersen et al.’s (1992) study, the first principal component had a heritability of 80 percent whereas the specific abilities averaged around 50 percent.

Remarkably, the strength of the heritability varies directly as a result of a test’s g loading. Jensen (1983) found a correlation of 0.81 between the g loadings of the 11 subtests of the Wechsler Intelligence Scale for Children and heritability strength assessed by genetic dominance based on inbreeding depression scores from cousin marriages in Japan. Inbreeding depression is defined as a lowered mean of the trait relative to the mean in a non-inbred population and is especially interesting because it indicates genetic dominance, which arises when a trait confers evolutionary fitness.

Jensen took the figures on inbreeding depression from a study by Schull and Neel (1965) who calculated them from 1,854 7- to 10-year-old Japanese children. Since about 50 percent of the sample involved cousin marriages, it was possible to assess the inbreeding depression on each subtest, expressed as the percentage decrement in the score per 10 percent increase in degree of inbreeding. These were calculated after statistically controlling for child’s age, birth rank, month of examination, and eight different parental variables, mostly pertaining to SES. The complement of inbreeding depression was found by Nagoshi and Johnson (1986) who observed “hybrid vigor” in offspring of Caucasoid-Mongoloid matings in Hawaii.

Subsequently, Jensen (1987a) reported rank order correlations of 0.55 and 0.62 between estimates of genetic influence from two twin studies and the g loadings of the Wechsler Adult Intelligence Scale subtests, and P. A. Vernon (1989) found a correlation of 0.60 between the heritabilities of a variety of speed of decision time tasks and their relationship with the g loadings from a psychometric test of general intelligence. More detailed analyses showed that the relationship among the speed and IQ measures are mediated entirely by hereditary factors. Thus, there are common biological mechanisms underlying the association between reaction time and information-processing speed and mental ability (Baker, Vernon, & Ho, 1991).

Heritabilities for mental ability have been examined within black and Oriental populations. A study by Scarr-Salapatek (1971) suggested the heritability might be lower for black children than for white children. Subsequently, Osborne (1978, 1980) reported heritabilities of greater than 50 percent both for 123 black and for 304 white adolescent twin pairs. Japanese data for 543 monozygotic and 134 dizygotic twins tested for intelligence at the age of 12 gave correlations of 0.78 and 0.49 respectively, indicating a heritability of 58 percent (R. Lynn & Hattori, 1990).

Related to intelligence at greater than 0.50 are years of education, occupational status, and other indices of socioeconomic status (Jensen, 1980a). All of these have also been shown to be heritable. For example, a study of 1,900 pairs of 50-year-old male twins yielded MZ and DZ twin correlations of 0.42 and 0.21, respectively for occupational status, and 0.54 and 0.30 for income (Fulker & Eysenck, 1979; Taubman, 1976). An adoption study of occupational status yielded a correlation of 0.20 between biological fathers and their adult adopted-away sons (2,467 pairs; Teasdale, 1979). A study of 99 pairs of adopted-apart siblings yielded a correlation of 0.22 (Teasdale & Owen, 1981). All of these are consistent with a heritability of about 40 percent for occupational status. Years of schooling also shows substantial genetic influence; for example, MZ and DZ twin correlations are typically about 0.75 and 0.50 respectively, suggesting that heritability is about 50 percent (e.g., Taubman, 1976).

Longevity and Health

Work on the genetics of longevity and senescence was pioneered by Kallman and Sander (1948, 1949). These authors carried out a survey in New York of over 1,000 pairs of twins aged 60 years or older and found that intra-pair differences for longevity, disease, and general adjustment to the aging process were consistently smaller for identical twins than for fraternal twins. For example, the average intra-pair difference in life span was 37 months for identical twins and 78 months for fraternal twins. In an adoption study of all 1,003 nonfamilial adoptions formally granted in Denmark between 1924 and 1947, age of death in the adult adoptees was predicted better by knowledge of the age of death in the biological parent than by knowledge of the age of death in the adopting parent (Sorensen, Nielsen, Andersen, & Teasdale, 1988).

Many individual difference variables associated with health are heritable. Genetic influences have been found for blood pressure, obesity, resting metabolic rate, behavior patterns such as smoking, alcohol use, and physical exercise, as well as susceptibility to infectious diseases. There is also a genetic component of from 30 to 50 percent for hospitalized illnesses in the pediatric age group including pediatric deaths (Scriver, 1984).

Psychopathology

Numerous studies have shown substantial genetic influences on reading disabilities, mental retardation, schizophrenia, affective disorders, alcoholism, and anxiety disorders. In a now classic early study, adopted-away offspring of hospitalized chronic schizophrenic women were interviewed at the average age of 36 and compared to matched adoptees whose birth parents had no known psychopathology (Heston, 1966). Of 47 adoptees whose biological parents were schizophrenic, 5 had been hospitalized for schizophrenia. None of the adoptees in the control group was schizophrenic. Studies in Denmark confirmed this finding and also found evidence for genetic influence when researchers started with schizophrenic adoptees and then searched for their adoptive and biological relatives (Rosenthal, 1972; Kety, Rosenthal, Wender, & Schulsinger, 1976). A major review of the genetics of schizophrenia has been presented by Gottesman (1991).

Alcoholism also runs in families such that about 25 percent of the male relatives of alcoholics are themselves alcoholics, as compared with less than 5 percent of the males in the general population. In a Swedish study of middle-aged twins who had been reared apart, twin correlations for total alcohol consumed per month were 0.71 for 120 pairs of identical twins reared apart and 0.31 for 290 pairs of fraternal twins reared apart (Pedersen, Friberg, Floderus-Myrhed, McClearn, & Plomin, 1984). A Swedish adoption study of males found that 22 percent of the adopted-away sons of biological fathers who abused alcohol were alcoholic (Cloninger, Bohman, & Sigvardsson, 1981).

Sexuality

A questionnaire study of twins found genetic influence on strength of sex drive in turn predictive of age of first sexual intercourse, frequency of intercourse, number of sexual partners, and type of position preferred (Eysenck, 1976; Martin, Eaves, & Eysenck, 1977). Divorce, or the factors leading to it at least, is also heritable. Based on a survey of more than 1,500 twin pairs, their parents, and their spouses’ parents, McGue and Lykken (1992) calculated a 52 percent heritability. They suggested the propensity was mediated through other heritable traits relating to sexual behavior, personality, and personal values.

Perhaps the most frequently cited study of the genetics of sexual orientation is that of Kallman (1952), in which he reported a concordance rate of 100 percent among homosexual MZ twins. Bailey and Pillard (1991) estimated the genetic component to male homosexuality to be about 50 percent. They recruited subjects through ads in gay publications and received usable questionnaire responses from 170 twin or adoptive brothers. Fifty-two percent of the identical twins, 22 percent of the fraternal twins, and 11 percent of the adoptive brothers were found to be homosexual. The distribution of sexual orientation among identical co-twins of homosexuals was bimodal, implying that homosexuality is taxonomically distinct from heterosexuality.

Subsequently, Bailey, Pillard, Neale, and Agyei (1993) carried out a twin study of lesbians and found that here, too, genes accounted for about half the variance in sexual preferences. Of the relatives whose sexual orientation could be confidently rated, 34 (48 percent) of 71 monozygotic co-twins, 6 (16 percent) of 37 dizygotic cotwins, and 2 (6 percent) of 35 adoptive sisters were homosexual.

Sociability

In one large study, Floderus-Myrhed et al. (1980) gave the Eysenck Personality Inventory to 12,898 adolescent twin pairs of the Swedish Twin Registry. The heritability for extraversion, highly related to sociability, was 54 percent for men and 66 percent for women. Another large study of extraversion involving 2,903 Australian twin pairs, found identical and fraternal twin correlations of 0.52 and 0.17 with a resultant heritability of 70 percent (Martin & Jardine, 1986). In a Swedish adoption study of middle-aged people, the correlation for sociability in 90 pairs of identical twins reared apart was 0.20 (Plomin et al., 1988).

Sociability and the related construct of shyness show up at an early age. In a study of 200 pairs of twins, Emde et al. (1992) found both sociability and shyness to be heritable at 14 months. Ratings of videotapes made of reactions to arrival at the home and the laboratory and other novel situations, such as being offered a toy, along with ratings made by both parents showed heritabilities ranging from 27 to 56 percent.

Values and Vocational Interests

Loehlin and Nichols’s (1976) study of 850 twin pairs raised together provided evidence for the heritability of both values and vocational interests. Values such as the desire to be well-adjusted, popular, and kind, or having scientific, artistic, and leadership goals were found to be genetically influenced. So were a range of career preferences including those for sales, blue-collar management, teaching, banking, literature, military, social service, and sports.

As shown in Table 3.1, Bouchard et al. (1990) reported that, on measures of vocational interest, the correlations for their 40 identical twins raised apart are about 0.40. Additional analyses from the Minnesota Study of Twins Reared Apart suggest the genetic contribution to work values is pervasive. One comparison of reared-apart twins found a 40 percent heritability for preference for job outcomes such as achievement, comfort, status, safety, and autonomy (Keller, Bouchard, Arvey, Segal, & Dawis, 1992). Another study of MZAs indicated a 30 percent heritability for job satisfaction (Arvey, Bouchard, Segal, & Abraham, 1989).

Chapter 4 : Genetic Similarity Theory

The Paradox of Altruism

The resolution of the paradox of altruism is one of the triumphs that led to the new synthesis called sociobiology. By a process known as kin selection, individuals can maximize their inclusive fitness rather than only their individual fitness by increasing the production of successful offspring by both themselves and their genetic relatives (Hamilton, 1964). According to this view, the unit of analysis for evolutionary selection is not the individual organism but its genes. Genes are what survive and are passed on, and some of the same genes will be found not only in direct offspring but in siblings, cousins, nephews/nieces, and grandchildren. If an animal sacrifices its life for its siblings’ offspring, it ensures the survival of common genes because, by common descent, it shares 50 percent of its genes with each sibling and 25 percent with each sibling’s offspring.

Thus, the percentage of shared genes helps determine the amount of altruism displayed. Social ants are particularly altruistic because of a special feature of their reproductive system that gives them 75 percent of their genes in common with their sisters. Ground squirrels emit more warning calls when placed near relatives than when placed near nonrelatives; “helpers” at the nest tend to be related to one member of the breeding pair; and when social groups of monkeys split, close relatives remain together. When the sting of the honey bee is torn from its body, the individual dies, but the bee’s genes, shared in the colony of relatives, survive.

Thus, from an evolutionary perspective, altruism is a means of helping genes to propagate. By being most altruistic to those with whom we share genes we help copies of our own genes to replicate. This makes “altruism” ultimately “selfish” in purpose. Promulgated in the context of animal behavior this idea became known as “kin-selection” and provided a conceptual breakthrough by redefining the unit of analysis away from the individual organism to his or her genes, for it is these that survive and are passed on.

Kin Recognition in Humans

Building on the work of Hamilton (1964), Dawkins (1976), Thiessen and Gregg (1980), and others, the kin-selection theory of altruism was extended to the human case. Rushton et al. (1984) proposed that, if a gene can better ensure its own survival by acting so as to bring about the reproduction of family members with whom it shares copies, then it can also do so by benefiting any organism in which copies of itself are to be found. This would be an alternative way for genes to propagate themselves. Rather than merely protecting kin at the expense of strangers, if organisms could identify genetically similar organisms, they could exhibit altruism toward these “strangers” as well as toward kin. Kin recognition would be just one form of genetic similarity detection.

The implication of genetic similarity theory is that the more genes are shared by organisms, the more readily reciprocal altruism and cooperation should develop because this eliminates the need for strict reciprocity. In order to pursue a strategy of directing altruism toward similar genes, the organism must be able to detect genetic similarity in others. As described in the previous section, four such mechanisms by which this could occur have been considered in the literature.

Humans are capable of learning to distinguish kin from non-kin at an early age. Infants can distinguish their mothers from other women by voice alone at 24 hours of age, know the smell of their mother’s breast before they are six days of age, and recognize a photograph of their mother when they are 2 weeks old. Mothers are also able to identify their infants by smell alone after a single exposure at 6 hours of age, and to recognize their infant’s cry within 48 hours of birth (see Wells, 1987, for review).

Human kin preferences also follow lines of genetic similarity. For example, among the Ye’Kwana Indians of South America, the words “brother” and “sister” cover four different categories ranging from individuals who share 50 percent of their genes (identical by descent) to individuals who share only 12.5 percent of their genes. Hames (1979) has shown that the amount of time the Ye’Kwana spend interacting with their biological relatives increases with their degree of relatedness, even though their kinship terminology does not reflect this correspondence.

Anthropological data also show that in societies where certainty of paternity is relatively low, males direct material resources to their sisters’ offspring (to whom their relatedness is certain) rather than to their wives’ offspring (Kurland, 1979). An analysis of the contents of 1,000 probated wills reveals that after husbands and wives, kin received about 55 percent of the total amount bequeathed whereas non-kin received only about 7 percent; offspring received more than nephews and nieces (Smith, Kish, & Crawford, 1987).

Paternity uncertainty also exerts predictable influence. Grandparents spend 35 to 42 percent more time with their daughters’ children than with their sons’ children (Smith, 1981). Following a bereavement they grieve more for their daughters’ children than for their sons’ children (Littlefield & Rushton, 1986). Family members feel only 87 percent as close to the fathers’ side of the family as they do to the mothers’ side (Russell & Wells, 1987). Finally, mothers of newborn children and her relatives spend more time commenting on resemblances between the baby and the putative father than they do about the resemblance between the baby and the mother (Daly & Wilson, 1982).

When the level of genetic similarity within a family is low, the consequences can be serious. Children who are unrelated to a parent are at risk; a disproportionate number of battered babies are stepchildren (Lightcap, Kurland, & Burgess, 1982). Children of preschool age are 40 times more likely to be assaulted if they are stepchildren than if they are biological children (Daly & Wilson, 1988). Also, unrelated people living together are more likely to kill each other than are related people living together. Converging evidence shows that adoptions are more likely to be successful when the parents perceive the child as similar to them (Jaffee & Fanshel, 1970).

Heritability Predicts Spousal Similarity

If people choose each other on the basis of shared genes, it should be possible to demonstrate that interpersonal relationships are influenced more by genetic similarity than by similarity attributable to a similar environment. A strong test of the theory is to observe that positive assortative mating is greater on the more heritable of a set of homogeneous items. This prediction follows because more heritable items better reflect the underlying genotype.

Stronger estimates of genetic influence have been found to predict the degree of matching that marriage partners have engaged in on anthropometric, attitudinal, cognitive, and personality variables. Thus, Rushton and Nicholson (1988) examined studies using 15 subtests from the Hawaii Family Study of Cognition and 11 subtests from the Wechsler Adult Intelligence Scale. With the Hawaii battery, genetic estimates from Koreans in Korea correlated positively with those from Americans of Japanese and European ancestry (mean r = 0.54, p < 0.01). With the Wechsler scale, estimates of genetic influence correlated across three samples with a mean r = 0.82.

Consider the data in Table 4.2 showing heritabilities predicting the similarity of marriage partners. Note, though, that many of the estimates of genetic influence in this table are based on calculations of midparent-offspring regressions using data from intact families, thereby combining genetic and shared-family environmental effects. The latter source of variance, however, is surprisingly small (Plomin & Daniels, 1987) and has not been found to add systematic bias. Nonetheless, it should be borne in mind that many of the estimates of genetic influence shown in Table 4.2 were calculated in this way.

Reported in Table 4.2 is a study by Russell, Wells, and Rushton (1985) who used a within-subjects design to examine data from three studies reporting independent estimates of genetic influence and assortative mating. Positive correlations were found between the two sets of measures (r = 0.36, p < 0.05, for 36 anthropometric variables; r = 0.13, p < 0.10, for 5 perceptual judgment variables; and r = 0.44, p < 0.01, for 11 personality variables). In the case of the personality measures, test-retest reliabilities over a three-year period were available and were not found to influence the results.

Another test of the hypothesis reported in Table 4.2 was made by Rushton and Russell (1985) using two separate estimates of the heritabilities for 54 personality traits. Independently and when combined into an aggregate, they predicted similarity between spouses (rs = 0.44 and 0.55, ps < 0.001). Rushton and Russell (1985) reviewed other reports of similar correlations, including Kamin’s (1978) calculation of r = 0.79 (p < 0.001) for 15 cognitive tests and DeFries et al.’s (1978) calculation of r = 0.62 (p < 0.001) for 13 anthropometric variables. Cattell (1982) too had noted that between-spouse correlations tended to be lower for the less heritable, more specific cognitive abilities (tests of vocabulary and arithmetic) than for the more heritable general abilities (g, from Progressive Matrices).

Also shown in Table 4.2 are analyses carried out using a between-subjects design. Rushton and Nicholson (1988) analyzed data from studies using 15 subtests from the Hawaii Family Study of Cognition (HFSC) and 11 subtests from the Wechsler Adult Intelligence Scale (WAIS); positive correlations were calculated within and between samples. For example, in the HFSC, parent-offspring regressions (corrected for reliability) using data from Americans of European ancestry in Hawaii, Americans of Japanese ancestry in Hawaii, and Koreans in Korea correlated positively with between-spouse similarity scores taken from the same samples and with those taken from two other samples: Americans of mixed ancestry in California and a group in Colorado. The overall mean r was 0.38 for the 15 tests. Aggregating across the numerous estimates to form the most reliable composite gave a substantially better prediction of mate similarity from the estimate of genetic influence (r = 0.74, p < 0.001). Similar results were found with the WAIS. Three estimates of genetic influence correlated positively with similarities between spouses based on different samples, and in the aggregate they predicted the composite of spouse similarity scores with r = 0.52 (p < 0.05).

Parenthetically, it is worth noting that statistically controlling for the effects of g in both the HFSC and the WAIS analyses led to substantially lower correlations between estimates of genetic influence and assortative mating, thus offering support for the view that marital assortment in intelligence occurs primarily with the g factor. The g factor tends to be the most heritable component of cognitive performance measures (chap. 3).

Chapter 6 : Race, Brain Size, and Intelligence

Intelligence Test Scores

Research on the academic accomplishments of Mongoloids in the United States continues to grow. Caplan, Choy, and Whitmore (1992) gathered survey and test score data on 536 school-age children of Indochinese refugees in five urban areas around the United States. Unlike some of the previously studied populations of “boat people,” these refugees had had limited exposure to Western culture, knew virtually no English when they arrived, and often had a history of physical and emotional trauma. Often they came with nothing more than the clothes they wore. All the children attended schools in low-income metropolitan areas. The results showed that whether measured by school grades or nationally normed standardized tests, the children were above average overall, “spectacularly” so in mathematics. [...]

An early study of the intelligence of “pure” African Negroids was carried out in South Africa by Fick (1929). He administered the American Army Beta Test, a nonverbal test designed for those who could not speak English, to 10- to 14-year-old white, black African, and mixed-race (mainly Negroid-Caucasoid hybrid) schoolchildren. In relation to the white mean of 100, based on more than 10,000 children, largely urban black African children obtained a mean IQ of 65, while urban mixed-race children obtained a mean IQ of 84. Thus South African mixed races obtained a mean IQ virtually identical to that of African-Americans. [...]

Since R. Lynn’s review, Owen (1992) has published another South African study. He gave Raven’s Standard Progressive Matrices to four groups of high school students. The results showed clear racial mean differences with 1,065 whites = 45.27 (SD = 6.34); 1,063 East Indians = 41.99 (SD = 8.24); 778 mixed races = 36.69 (SD = 8.89); and 1,093 pure Negroids = 27.65 (SD = 10.72). Thus, Negroids are from 1.5 to 2.7 standard deviations below the two Caucasoid populations and about 1 standard deviation lower than the mixed races. The four groups showed little difference in test reliabilities, the rank order of item difficulties, item discrimination values, and the loadings of items on the first principal component. Owen (1992: 149) concluded: “Consequently, from a psychometric point of view, the [test] is not culturally biased.”

R. Lynn also summarized the results of studies of the intelligence of Amerindians. The mean IQs have invariably been found to be somewhat below that of Caucasoids. The largest study is that of Coleman et al. (1966), which obtained a mean of 94, but a number of studies have reported means in the 70 to 90 range. The median of the 15 studies listed is 89, which Lynn took as a reasonable approximation, indicating that the Amerindian mean IQ falls somewhere between that of Caucasoids and Negroid-Caucasoid hybrids. The same intermediate position is occupied by Amerindians in performance on the Scholastic Aptitude Test (Wainer, 1988).

In addition, all the studies of Amerindians have found that they have higher visuospatial than verbal IQs. The studies listed are those where the Amerindians speak English as their first language, so this pattern of results is unlikely to be solely due to the difficulty of taking the verbal tests in an unfamiliar language. The verbal-visuospatial disparity is also picked up in the Scholastic Aptitude Test, where Amerindians invariably score higher on the mathematical test than on the verbal (Wainer, 1988).

Spearman’s g

Thus, Jensen (1985) examined 11 large-scale studies, each comprising anywhere from 6 to 13 diverse tests administered to large black and white samples aged 6 to 16½, with a total sample size of 40,000, and showed that a significant and substantial correlation was found in each between the test’s g loadings and the mean black-white difference on the same tests. In a follow up, Jensen (1987b; Naglieri & Jensen, 1987) matched 86 black and 86 white 10- to 11-year-olds for age, school, sex, and socioeconomic status and tested them with the Wechsler Intelligence Scale for Children-Revised and the Kaufman Assessment Battery for Children for a total of 24 subtests. The results showed that the black-white differences on the various tests correlated r = 0.78 with the test’s g loading.

Decision Times

To further examine the racial difference in reaction times and their relationship to g, P. A. Vernon and Jensen (1984) gave a battery of eight tasks to 50 black and 50 white college students who were also tested on the Armed Services Vocational Aptitude Battery (ASVAB). Despite markedly different content, the reaction time measures correlated significantly at about 0.50 with the ASVAB in both the black and the white samples. Blacks had significantly slower reaction time scores than whites, as well as lower scores on the ASVAB. The greater the complexity of the reaction time task, measured in milliseconds, the stronger its relationship to the g factor extracted from the ASVAB, and the greater the magnitude of the black-white difference. [...]

Meanwhile, Jensen (1993; Jensen & Whang, 1993) used similar decision time tasks as R. Lynn to extend his test of Spearman’s hypothesis. Thus, Jensen (1993) gave 585 white and 235 black 9- to 11-year-old children from middle-class suburban schools in California a battery of 12 reaction time tasks based on the simple, choice, and oddman procedures. The response time loadings on psychometric g were estimated by their correlations with scores on Raven’s Progressive Matrices. In another procedure, the chronometric tasks assessed speed of retrieval of easy number facts such as addition, subtraction, or multiplication of single digit numbers. These have typically been learned before the children are 9 years old, and all children in the study were able to perform them correctly.

In both studies, Spearman’s hypothesis was borne out as strongly as in the previous studies using conventional psychometric tests. Blacks scored lower than whites on the Raven’s Matrices and were slower than whites in decision time. In addition, the size of the black-white difference on the decision time variables was directly related to the variables’ loadings on psychometric g. Moreover, when the response time was separated into a cognitive decision component and a physical movement component, blacks were found to be slower than whites on the cognitive part and faster than whites on the physical part.

Using the same procedures as in the study just described, Jensen and Whang (1993), also in California, compared 167 9- to 11-year-old Chinese American children with the 585 white children. On Raven’s Matrices there was a 0.32 standard deviation advantage to the Oriental children (about 5 IQ points), although they were lower in socioeconomic status. Also, compared to the white American children, the Chinese American children were faster in the cognitive aspects of information processing (decision time) but slower in the motor aspects of response execution (movement time).

Chapter 7 : Speed of Maturation, Personality, and Social Organization

Speed of Maturation

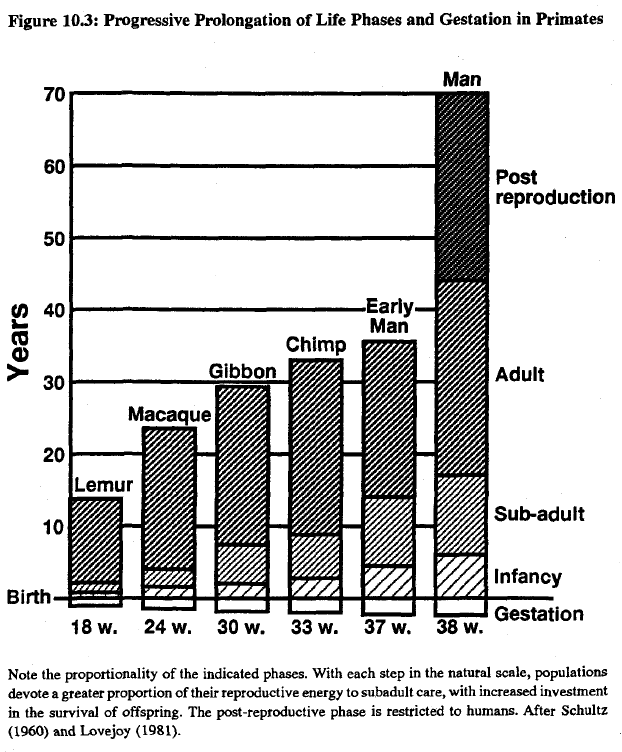

Table 7.1 summarizes the racial differences on several measures of life span development. In the United States, black babies have a shorter gestation period than white babies. By week 39, 51 percent of black children have been bom while the figure for white children is 33 percent; by week 40, the figures are 70 and 55 percent respectively (Niswander & Gordon, 1972). Similar results have been obtained in Paris. Collating data over several years, Papiernik, Cohen, Richard, de Oca, and Feingold (1986) found that French women of European ancestry had longer pregnancies than those of mixed black-white ancestry from the French Antilles, or black African women with no European admixture. These differences persisted after adjustments for socioeconomic status.

Other observations, made within equivalent gestational age groups established by ultrasonography, find that black babies are physiologically more mature than white babies as measured by pulmonary function, amniotic fluid, fetal birth weight between 24 and 36 weeks of gestation, and weight-specific neonatal mortality (reviewed in Papiernik et al., 1986). I am unaware of data on gestation time for Mongoloids.

Black precocity continues throughout life. Revised forms of Bayley’s Scales of Mental and Motor Development administered in 12 metropolitan areas of the United States to 1,409 representative infants aged 1-15 months showed black babies scored consistently above whites on the Motor Scale (Bayley, 1965). This difference was not limited to any one class of behavior, but included: coordination (arm and hand); muscular strength and tonus (holds head steady, balances head when carried, sits alone steadily, and stands alone); and locomotion (turns from side to back, raises self to sitting, makes stepping movements, walks with help, and walks alone).

Similar results have been found for children up to about age 3 elsewhere in the United States, in Jamaica, and in sub-Saharan Africa (Curti, Marshall, Steggerda, & Henderson, 1935; Knobloch & Pasamanik, 1953; Williams & Scott, 1953; Walters, 1967). In a review critical of the literature Warren (1972) nonetheless reported evidence for African motor precocity in 10 out of 12 studies. For example, Geber (1958:186) had examined 308 children in Uganda and reported an “all-round advance of development over European standards which was greater the younger the child.” Freedman (1974, 1979) found similar results in studies of newboms in Nigeria using the Cambridge Neonatal Scales (Brazelton & Freedman, 1971).

Mongoloid children are motorically delayed relative to Caucasoids. In a series of studies carried out on second- through fifth-generation Chinese-Americans in San Francisco, on third- and fourth-generation Japanese-Americans in Hawaii, and on Navajo Amerindians in New Mexico and Arizona, consistent differences were found between these groups and second- to fourth-generation European-Americans using the Cambridge Neonatal Scales (Freedman, 1974, 1979; Freedman & Freedman, 1969). One measure involved pressing the baby’s nose with a cloth, forcing it to breathe with its mouth. Whereas the average Chinese baby fails to exhibit a coordinated “defense reaction,” most Caucasian babies turn away or swipe at the cloth with the hands, a response reported in Western pediatric textbooks as the normal one.

On other measures including “automatic walk,” “head turning,” and “walking alone,” Mongoloid children are more delayed than Caucasoid children. Mongoloid samples, including the Navajo Amerindians, typically do not walk until 13 months, compared to the Caucasian 12 months and Negro 11 months (Freedman, 1979). In a standardization of the Denver Developmental Screening Test in Japan, Ueda (1978) found slower rates of motoric maturation in Japanese as compared with Caucasoid norms derived from the United States, with tests made from birth to 2 months in coordination and head lifting, from 3 to 5 months in muscular strength and rolling over, at 6 to 13 months in locomotion, and at 15 to 20 months in removing garments.

Eveleth and Tanner (1990) discuss race differences in terms of skeletal maturity, dental maturity, and pubertal maturity. Problems include poorly standardized methods, inadequate sampling, and many age/race/method interactions. Nonetheless, when many null and idiosyncratic findings are averaged out the data suggest that African-descended people have a faster tempo than others.

With skeletal maturity, the clearest evidence comes from the genetically timed age at which bone centers first become visible. Africans and African-Americans, even those with low incomes, mature faster up to 7 years. Mongoloids are reported to be more delayed at early ages than Caucasoids but later catch up, although there is some contradictory data. Subsequent skeletal growth varies widely and is best predicted by nutrition and socioeconomic status.

With dental development, the clearest pattern comes from examining the first phase of permanent tooth eruption. For beginning the first phase, a composite of first molar and first and second incisors in both upper and lower jaws showed an average for 8 sex-combined African series of 5.8 years compared to 6.1 years each for 20 European and 8 east Asian series (Eveleth & Tanner, 1990, Appendix 80, after excluding east Indians and Amerindian samples from the category “Asiatics”). For completion of the first phase, Africans averaged 7.6, Europeans 7.7, and east Asians 7.8 years. (The significance of this pattern will be discussed in chapter 10, where the predictive value of age of first molar for traits like brain size has been shown in other primate species.) No clear racial pattern emerged with the onset of deciduous teeth nor with the second phase of permanent tooth eruption.

In speed of sexual maturation, the older literature and ethnographic record suggested that Africans were the fastest to mature and Orientals slowest with Caucasian people intermediate (e.g., French Army Surgeon, 1898/1972). Despite some complexities this remains the general finding. For example, in the United States, blacks are more precocious than whites as indexed by age at menarche, first sexual experience, and first pregnancy (Malina, 1979). A national probability sample of American youth found that by age 12, 19 percent of black girls had reached the highest stages of breast and pubic hair development, compared to 5 percent of white girls (Harlan, Harlan, & Grillo, 1980). The same survey, however, found white and black boys to be similar (Harlan, Grillo, Coroni-Huntley, & Leaverton, 1979).

Subsequently, Westney, Jenkins, Butts, and Williams (1984) found that 60 percent of 11-year-old black boys had reached the stage of accelerated penis growth in contrast to the white norm of 50 percent of 12.5-year-olds. This genital stage significantly predicted onset of sexual interest, with over 2 percent of black boys experiencing intercourse by age 11. While some surveys find that Oriental girls enter puberty as early as whites (Eveleth & Tanner, 1990), others suggest that in both physical development and onset of interest in sex, the Japanese, on the average, lag one to two years behind their American counterparts (Asayama, 1975).

Mortality Rates

Black babies in the United States show a greater mortality rate than white babies. In 1950, a black infant was 1.6 times as likely to die as a white infant. By 1988, the relative risk had increased to 2.1. Controlling for some maternal risk factors associated with infant mortality or premature birth, such as age, parity, marital status, and education, does not eliminate the gap between blacks and whites within those risk groups. For instance, in the general population, black infants with nonnal birth weights have almost twice the mortality of their white counterparts.

One recent study examined infants whose parents were both college graduates in a belief that such a study would eliminate obvious inequalities in access to medical care. The researchers compared 865,128 white and 42,230 black children but they found that the mortality rate among black infants was 10.2 per 1,000 live births as against 5.4 per 1,000 among white infants (Schoendorf, Carol, Hogue, Kleinman, & Rowley, 1992).

The reason for the disparity appears to be that the black women give birth to greater numbers of low birth weight babies. When statistics are adjusted to compensate for the birth weight of the babies, the death rates for the two groups become virtually identical. Newborns who are not underweight, born to black and white college-educated parents, had an equal chance of surviving the first year. Thus, in contrast to black infants in the general population, black infants born to college-educated parents have higher mortality rates than similar white infants only because of their higher rates of low birth weight.

Mental Durability

Indices of social breakdown are also to be gained from figures of those confined to mental institutions or who are otherwise behaviorally unstable. Most of the data to be reviewed come from the United States. In 1970, 240 blacks per 100,000 population were confined to mental institutions, compared with 162 whites per 100,000 population (Staples, 1985). Blacks also use community mental health centers at a rate almost twice their proportion in the general population. The rate of drug and alcohol abuse is much greater among the black population, based on their overrepresentation among patients receiving treatment services. Moreover, it is estimated that over one-third of young black males in the inner city have serious drug problems (Jaynes & Williams, 1989).

Kessler and Neighbors (1986) have demonstrated, using cross-validation on eight different surveys encompassing more than 20,000 respondents, that the effect of race on psychological disorders is independent of class. They observed an interaction between race and class such that the true effect of race was suppressed and the true effect of social class was magnified in models that failed to take the interaction into consideration. Again, in contrast, Orientals are underrepresented in the incidence of mental health problems (P. E. Vernon, 1982).

Other Variables

Blacks have deeper voices than whites. In one study, Hudson and Holbrook (1982) gave a reading task to 100 black men and 100 black women volunteers ranging in age from 18 to 29 years. The fundamental vocal frequencies were measured and compared to white norms. The frequency for black men was 110 Hz, lower than the 117 Hz for white men, and the frequency for black women was 193 Hz, lower than the frequency of 217 Hz for white women.

Differences in bone density between blacks and whites have been noted at a variety of ages and skeletal sites and remain even after adjusting for body mass (Pollitzer & Anderson, 1989). Racial differences in bone begin even before birth. Divergence in the length and weight of the bones of the black and white fetus is followed by greater weight of the skeleton of black infants compared with white infants. Blacks have not only greater skeletal calcium content, but also greater total body potassium and muscle mass. These findings are important for osteoporosis and fractures, especially in elderly people.

Body structure differences likely account for the differential success of blacks at sporting events. Blacks are disproportionately successful in sports involving running and jumping but not at all successful at sports such as swimming. For example in the 1992 Olympic Games in Barcelona, blacks won every men’s running race. On the other hand, no black swimmer has ever qualified for the U.S. Olympic swim team. The bone density differences mentioned above may be a handicap for swimming.

The physique and physiology of blacks may give them a genetic advantage in running and jumping, as discussed in Runner’s World by long time editor Amby Burfoot (1992). For example, blacks have less body fat, narrower hips, thicker thighs, longer legs, and lighter calves. From a biomechanical perspective, this is a useful package. Narrow hips allow for efficient, straight-ahead running. Strong quadricep muscles provide horsepower, and light calves reduce resistance.

With respect to physiology, West Africans are found to have significantly more fast-twitch fibers and anaerobic enzymes than whites. Fast-twitch muscle fibers are thought to confer an advantage in explosive, short duration power events such as sprinting. East and South African blacks, by contrast, have muscles that provide great endurance by producing little lactic acid and other products of muscle fatigue.

A number of direct performance studies have shown a distinct black superiority in simple physical tasks such as running and jumping. Often, the subjects in these studies were very young children who had no special training. Blacks also have a significantly faster patellar tendon reflex time (the familiar knee-jerk response) than white students. Reflex time is obviously an important variable for sports that require lightning reflexes. It would be interesting to know if the measures on which blacks performed best were the ones on which Orientals performed poorest, and vice versa. Do reflex times and percentage of fast-twitch muscle show a racial gradient, and is it one opposite to that of cognitive decision time? Is this ultimately a physiological tradeoff?

Chapter 8 : Sexual Potency, Hormones, and AIDS

Reproductive Potency

The average woman produces one egg every 28 days in the middle of the menstrual cycle. Some women, however, have shorter cycles than others and some produce two eggs in a cycle. Both events translate into greater fecundity because of the greater opportunities they provide for a conception. Occasionally double ovulation results in the birth of dizygotic (two-egg) twins.

The races differ in the rate at which they double ovulate. Among Mongoloids, the frequency of dizygotic twins per 1,000 births is less than 4, among Caucasoids the rate is 8 per 1,000, and among Negroids the figure is greater than 16 per 1,000, with some African populations having twin frequencies of more than 57 per 1,000 (Buhner, 1970). Recent reviews of twinning rates in the United States (Allen, 1988) and Japan (Imaizumi, 1992) confirm the racial differences. Note that the frequency of monozygotic twinning is nearly constant at about 4 per 1,000 in all groups. Monozygotic twinning is the result of a single fertilized egg splitting into two identical parts.

The frequency of three-egg triplets and four-egg quadruplets shows a comparable racial ordering. For triplets, the rate per million births among Mongoloids is 10, among Caucasoids 100, and among Negroids 1,700; and for quadruplets, per million births, among Mongoloids 0, among Caucasoids 1, and among Negroids, 60 (Allen, 1987; Nylander, 1975). Data from racially mixed matings show that multiple births are largely determined by the race of the mother, independently of the race of the father, as found in Mongoloid-Caucasoid crosses in Hawaii, and Caucasoid-Negroid crosses in Brazil (Buhner, 1970).

Intercourse Frequency and Attitudes

Racial differences exist in frequency of sexual intercourse. Examining Hofmann’s (1984) review of the extent of premarital coitus among young people around the world, Rushton and Bogaert (1987) categorized the 27 countries by primary racial composition and averaged the figures. The results showed that African adolescents are more sexually active than Europeans, who are more sexually active than Asians (see Table 8.3). While some variation occurs from country to country, consistency is found within groups. As is typical of such surveys, young men report a greater degree of sexual experiences than young women (Symons, 1979). It is clear from Table 8.3, however, that the population differences are replicable across sex, with the men of the more restrained group having less experience than the women of the less restrained.

A confirmatory study was carried out in Los Angeles which held the setting constant and fully sampled the ethnic mix. Of 594 adolescent and young adults, 20 percent were classified as Oriental, 33 percent as white, 21 percent as Hispanic, and 19 percent as black. The average age at first intercourse was 16.4 for Orientals and 14.4 for blacks, with whites and Hispanics intermediate, and the percentage sexually active was 32 percent for Orientals and 81 percent for blacks, with whites and Hispanics intermediate (Moore & Erickson, 1985).

A Youth Risk Behavior Survey with a reading level for 12-year-olds was developed by the Centers for Disease Control in the United States to examine health-risk behaviors including sexual behaviors. In 1990, a representative sample of 11,631 students in grades 9-12 (ages 14 to 17) from across the United States anonymously completed the questionnaire during a 40-minute class period. Students were asked whether they had ever had sexual intercourse, with how many people they had had sexual intercourse, and with how many people they had had sexual intercourse during the past 3 months. They were also asked about their use of condoms and other methods of preventing pregnancy (Centers for Disease Control, 1992a).