This article summarizes the (experimental) studies on working memory training, its near- and far-transfer effect, hence generalizability to non-trained and less similar tests, the persistence of gain over time, and why such improvement is not expected to happen.

The second part of the article is organized as follows : I) negative result, II) ambiguous result, III) positive result, IV) meta-analyses. In order to be classified I) the study must either fail to prove far transfer effect or the sustained IQ gain if follow-up is available. To be classified II) the study must reveal the far transfer effect in some (but not all) of the IQ tests, unless the follow-up result (if available) is negative. To be classified III) the study must fulfill all the conditions except the availability of data on follow-up and predictive validity regarding real-world outcomes.

(Last update : September 2014)

CONTENT [Jump links below]

1. Summary of pitfalls in the studies.

2. Studies.

I) Negative findings.

II) Ambiguous findings.

III) Positive findings.

IV) Meta-analyses.

3. Why IQ gains are unlikely.1. Summary of pitfalls in the studies.

A well known problem in this kind of research is the Hawthorne effect, also called placebo effect. It is the phenomenon that occurs when the members of the experimental groups have improved motivation for the outcome of the experiments because they are the center of attention. This results in improvement in the outcome, not because of the experiment but because of the expectancy effect (Shipstead, 2012b, p. 191; see also Shipstead et al., 2010, pp. 251-252, for a thorough discussion). Melby-Lervåg & Hulme (2012) show that the score difference is much larger when the control group is no-contact rather than the active (or placebo) group.

A problem of non-random assignment can bias the results. One well-known phenomenon is the regression to the mean (Spitz, 1986, pp. 47, 62, 67-68, 70, 180, 187). If group #1 has initially a greater score than group #2, then the members of group #2 will have greater gains at the retest sessions simply because of the tendency of a low-scoring subject to perform better at post-test and for a high-scoring subject to perform worse at post-test. But this is mainly due to unreliability (Jensen, 1980, p. 276). True score estimation (e.g., through latent variable approach) is one solution. Ideally, one should have equal means and variances (or SD) in the two groups.

Moody (2009) faulted Jaeggi et al. (2008) for applying speeded tests in the transfer task. In the study, the group with least training (8 days) was tested on Raven APM but the 3 other training groups (12, 17, 19 days) on the BOMAT. Both are progressive tests, i.e., easier items at the beginning, harder items at the end of the test. The BOMAT is a 29-item test in which subjects are supposed to be allowed 45 min to complete. But Jaeggi et al. (2008) reduced the allotted time from 45 to 10 minutes. The test is not challenging anymore, as the hardest items are not attempted (or they will be guessed instead). The time restriction has limited the opportunity to learn about the test in the process of taking it. Jaeggi et al. (2008, 2010) cite an early study showing that the scores in speeded and non-speeded versions of the test are highly correlated, a view shared by Jensen (1980, p. 135). However, the structures of correlations and means are not equivalent, i.e., the gains can still be different depending on the versions. Such correlation only means that those people who score high(er) on the speeded version will be those who also score high(er) on the non-speeded version. Another defense comes from Chooi & Thompson (2012) and Jaeggi et al. (2014) who both agreed that timed tests can avoid ceiling effects. This claim is odd. Unless the test is made too easy, the ceiling effect is unlikely. One way to examine such an effect is to display histograms, but no single study even cares about that. The problem with speeded tests, as Jensen (1980, pp. 135-136; 1998, pp. 224-225, 236) explained, is that the test does not measure intelligence very well, and is more a personality factor than a cognitive factor. Jensen (1980) has written that “Practice effects are about 10 to 25 percent less for untimed tests than for speeded tests.” (p. 590). This may be consistent with the idea that timed testing tends to measure something else than just intelligence, hence the explanation for the greater gain. If researchers worry (as they do) about practice effect, then they should find a way to get rid of it. A related problem with limited time of the test is the possibility of guessing the answers to get higher scores. Improvements can’t be taken as true score increases. This should be investigated through IRT modeling (e.g., using the ltm package of R). In principle, timed tests allow the subjects less time to think and engage into mental manipulation of the stimuli presented to them (Jensen, 1980, p. 231). This has serious consequences for studies of WM training.

To be effective, the training task must be adaptive, i.e., the difficulty is adjusted according to individual’s performance by increasing/decreasing the number of items that the user has to remember and by increasing/decreasing the difficulty of the item sequences. The Cogmed explains this way :

For example, if the user views a 4 x 4 grid of dots on the computer screen and 3 of the dots light up in red, the user must hold in mind the order in which the dots lit up and then repeat back the sequence by clicking on the dots at the end of the stimuli presentation. If they are correct, they may be presented with 3 more dots to remember, but in a more complicated arrangement. If they correctly remember this series, they may be presented with 4 dots to remember. If they correctly remember this series, they may be presented with 4 more dots to remember but in a more complicated series and so on. However, if they incorrectly remember the sequence, they will prompted to remember only 3 items in the next trial. It is in this manner that Cogmed Working Memory Training adapts on an individualized basis to challenge each user’s particular WM capacity.

The unique presentation of stimuli in adaptive training demands that the user actively maintain the items and thus, prevents automation. The user must simultaneously maintain increasingly longer/shorter and more/less difficult sequences of stimuli, hold the stimuli representation in mind during a short delay before responding and must continue this process repeatedly. When the user can no longer hold in mind a certain number of items in a certain sequence, they have reached their WM capacity.

If Cogmed were not adaptive, one might expect the user to become increasingly faster and better practiced at responding to the stimuli - simply repeating the same number of items over and over - but, WM capacity would not be challenged.

Another factor that needs to be considered is the age of participants. Gains in old people cannot be generalized to young people, for example. Schmiedek et al. (2010) argue that we must expect less gain in older people because cognitive plasticity is reduced at late age.

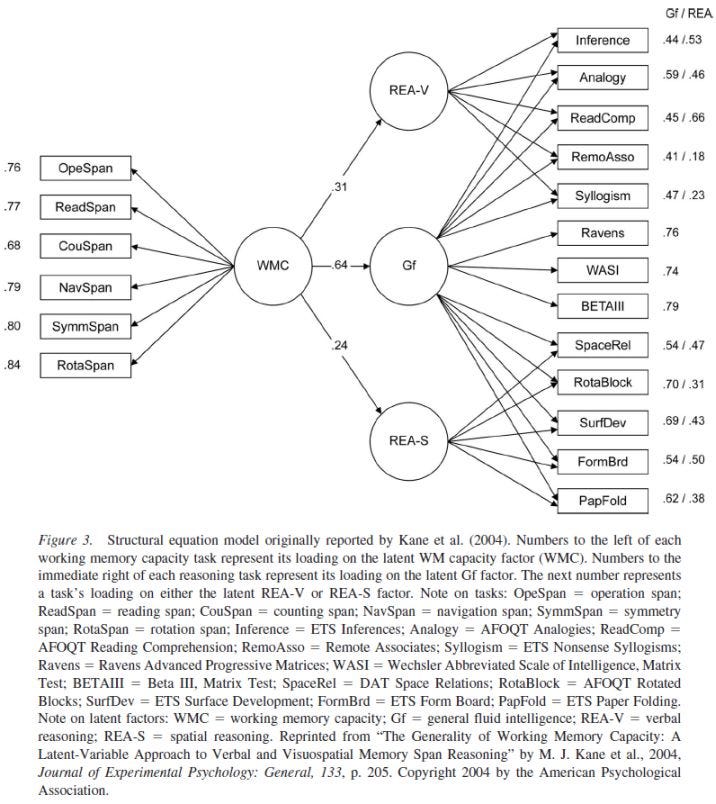

Shipstead et al. (2012a) recommend using a diverse battery of cognitive tests (p. 7). If the training effect is apparent, it must be so in all domains (verbal, fluid, spatial, etc.). But most studies up to 2011 or 2012 use a single task for testing the transfer effect. Scores on any single test are driven both by the ability of interest as well as other systematic and random influences. They explain (p. 14) that far transfer can’t be established until near-transfer tasks are given, and this was not done in Jaeggi et al. (2011) for example. They are indeed cautious (p. 17) about the study of Schmiedek et al. (2010) in which near transfer was found for WM tasks similar to tasks found in the training program (including n-back) while near transfer to untrained complex span tasks was not found. This suggests that the Gf gain (among young adults) may not be due to WM training. They believe (p. 17) the studies lack an analysis of transfer effect from IQ to scholastic achievement tests. They also argue (p. 7) that if Gf and WMC are imperfectly correlated, some changes (gains) in Gf may be due to influences other than WMC gains. They cite Kane et al. (2004) CFA study, showing a path correlation of 0.64 between WMC and Gf.

One factor that can seriously attenuate the gains is ceiling effect (Redick et al., 2013). In most studies, the tests used for transfer effect are considerably shortened. When this happens, the score at the pre-test for many subjects can reach a level near the maximum possible. In that situation, the gains at post-test will appear very unlikely because the score can’t be higher anymore. Nevertheless, if the test is not made too easy, ceiling effect is unlikely. A histogram can help to examine this possibility. A ceiling effect would be apparent if the distribution is not normal but negatively skewed.

Shortened tests have lower reliability. And this obviously reduces the score differences. A related procedure consists of splitting the test into odd- and even-numbered items which is aimed to create 2 (or more) alternative forms of the tests, in order to avoid giving the exact same items at the 2 (or more) sessions, each containing one half (or third) of the total number of items. One half is given at pre-test, the other half at the post-test. The sum of the 2 tests can be correlated, which produces an estimate of reliability of each of the two halves. This is called split-half reliability estimate. That seems to be a somewhat common practice, but this is not a problem in itself. The real trouble is the low number of items. Having a broader battery does nothing to remedy this problem because each test will have low effect size. But applying a formula of unreliability correction, summing the tests or building a latent factor certainly helps.

Jaeggi et al. (2014) told us that the researchers usually pay the participants. They argue this has the effect of reducing the intrinsic motivation of test-takers, which has a detrimental effect on performance. Motivation, indeed, may affect test scores. Duckworth et al. (2011) study shows that motivation is related to higher scores. This claim is highly problematic however. The problem is threefold. IQ is supposed to measure intelligence, e.g., the ability to understand the meaning of the questions. Such a test is not designed to measure some external factors such as stress or motivation, and they will be called nuisance ability, that depend on situation and context, whereas intelligence (the construct) is not expected to be dependent on the context of how the test is given. If the IQ gains depend greatly on motivation, this means that most gains is not on g (true ability) but is the product of test-taking attitude. Of course, one can easily conceive that higher motivation is likely to be correlated to school achievement, and the latter can boost cognitive development, but this is irrelevant for a study over a span of only a few months of follow-up. Furthermore, this argument would be just saying that it is school-related background that is responsible for higher gains, and not dual-n back experiments. Worse comes from the presence of near-transfer without far-transfer. If motivation only acts in a way to improve scores, it can’t possibly account for a lack of far transfer effect.

A related argument by Jaeggi et al. (2014) is that a positive belief of IQ malleability, or expectancy about the experiment, causes a larger gain and retention over time. The problem is similar to that related to motivation. If score gain is situation-specific, it won’t translate to latent gain. The ability to take the test is different from the ability to solve complex tasks.

For the same reason, a sustained belief among subjects that the experiment has yielded benefits in terms of cognition can explain part of the persistence of the IQ gain in the follow-up period. When taking the test, the subjects will be remembered about their past experiments and, by the same token, their high beliefs and expectancy. The effect of such a test-taking attitude (motivation) may easily boost the observed scores. By way of comparison, it has been found that when black people are subjected to implicit cues of racial stereotypes, their observed scores are reduced, and these depressed scores have been accompanied by measurement bias.

Colom et al. (2013) explain that differences in difficulty in tests across occasions can account for artificial increases or declines in scores at the re-test. A decline in score is unexpected given that retest effect is a well-known phenomenon, although devoid of g. Such IQ gain is due to the test becoming easier over time. They recommend using IRT, which has the benefit of equating for difficulty (b-)parameter, but also to reduce the problem of measurement errors raised by Jaeggi et al. (2014). However, it is unlikely to be a problem if the tests administered are the same across occasions.

Sprenger et al. (2013) emphasize on the importance of having a diverse battery of transfer tests. One commonly used transfer test is a measure of fluid IQ. But if the gain is general (g) we must expect that non fluid IQ tests would show similar improvement. In Jaeggi et al. (2008, 2011) there were only fluid tests. Another, related issue is that Gf tests such as the Raven rely heavily on working memory. Training on WM would indirectly train on the Raven and the likes.

One way to evaluate the hypothesis that the score gain is g-loaded is to correlate the gains with the g-loadings derived from factor analysis. Another way to test the hypothesis is to compute the intercorrelation across the test at each occasion. If the correlations diminish, it suggests higher improvement in more narrow abilities. Colom et al. (2010) perform such analyses but this is rarely attempted.

Lastly, a big problem often ignored is the high drop-out rate for these experiments (e.g., Chooi & Thompson, 2012; Jaeggi et al., 2014; Redick et al., 2013). Typically, the participants who abandon the experiments most likely have lower IQ, i.e., those who needed IQ gains the most.

2. Studies.

I) Negative findings.

Improving fluid intelligence with training on working memory

Jaeggi et al. (2008) is a widely known study, overly cited as illustration that memory working training can boost (Gf) IQ. There were 70 college students, with mean age=25, equally split (35-35) into experimental and control groups. The training task involves the Dual n-back, which consists of two independent sequences or tasks that are presented simultaneously. One is auditory, and the other one is visual.

For the training task, we used the same material as described by Jaeggi et al. (33), which was a dual n-back task where squares at eight different locations were presented sequentially on a computer screen at a rate of 3 s (stimulus length, 500 ms; interstimulus interval, 2,500 ms). Simultaneously with the presentation of the squares, one of eight consonants was presented sequentially through headphones. A response was required whenever one of the presented stimuli matched the one presented n positions back in the sequence. The value of n was the same for both streams of stimuli. There were six auditory and six visual targets per block (four appearing in only one modality, and two appearing in both modalities simultaneously), and their positions were determined randomly. Participants made responses manually by pressing on the letter “A” of a standard keyboard with their left index finger for visual targets, and on the letter “L” with their right index finger for auditory targets. No responses were required for non-targets. In this task, the level of difficulty was varied by changing the level of n (34), which we used to track the participants’ performance. After each block, the participants’ individual performance was analyzed, and in the following block, the level of n was adapted accordingly: If the participant made fewer than three mistakes per modality, the level of n increased by 1. It was decreased by 1 if more than five mistakes were made, and in all other cases, n remained unchanged. Onetraining session comprised 20 blocks consisting of 20n trials resulting in a daily training time of 25 min.

They report the result as strongly positive, with transfer gain in the fluid test BOMAT (but not Raven) increasing with the amount of training in dual n-back. But Sternberg (2008) identifies several complications. The IQ gain should be accompanied by real-world variables, e.g., school and job success. The transfer tests should be correlated among them and with other tests in post-test as much as they do in pre-test session. The absence of follow-up does not tell us if the gain is sustainable. The absence of active control group inflates the effect size. The use of a single WM task does not tell us if the gain in WM is due to the peculiarity of this single test or really due to an overall improvement in the WM at the construct level. The IQ gain in a single Gf measure (BOMAT) does not prove there is an improvement in Gf at the construct level. But we could also add that the inclusion of transfer tests targeting only a single cognitive domain (fluid ability) does not allow us to examine whether or not the gain can be extended to all other cognitive domains. This study is flawed and unhelpful.

There are still other drawbacks. Moody (2009) says that Jaeggi et al. (2008) had no reason to give different test to different groups, i.e., Raven for the 8-days training and BOMAT for the 12-, 17- and 19-day training. Also, because they have reduced the time allowed for the BOMAT (10 min. instead of 45 min.), the subjects were not given the opportunity to attempt the more complex items. Thus, the test failed to challenge their fluid intelligence. Moody also argues that the BOMAT consists of 5x3 matrices format, whereas the Raven consists of 3x3 matrices. With 15 visual figures to keep track of in each test item instead of 9, the BOMAT, compared to Raven, puts added emphasis on subjects’ ability to hold details of the figures in working memory, especially under condition of severe time constraint. Given this, it is not surprising that an extensive training on WM would facilitate performance on the easiest items in the BOMAT. Another problem is that one task of the dual n-back “involved recall of the location of a small square in one of several positions in a visual matrix pattern”. It seems to bear some similarity with fluid tests in which the format is based on visual matrix, such as BOMAT or Raven. Then, a gain in BOMAT and Raven can be considered near-transfer gains. A definitive proof of far-transfer effect must involve very dissimilar tests.

The relationship between n-back performance and matrix reasoning—Implications for training and transfer

Jaeggi et al. (2010) conduct two studies. First study involves multiple regression with transfer tests with dual or single n-back. For the second study, they recruit 99 undergraduates (mean age=19.4 years; SD= 1.5; 76 women) in Taipei, but ended up with a final sample of 21 for single n-back (visual), 25 for dual n-back, and 43 for the no-contact control group. They earned course credit. In addition, the training groups received NT$ 600 (about US$20) as well as the training software after study completion. There were 20 training sessions. The transfer task consists of single n-back (stimuli for which none of the groups has been trained), working memory span (OSPAN) and two tests of Gf (29-item, 16-min., BOMAT, and 18-item, 11 min., Raven). Table 9 reports the effect sizes (d) for single n-back, OPSAN, Raven, BOMAT, are 1.33, -0.18, 0.65, 0.70 for single n-back (visual) group, and they are 1.54, -0.07, 0.98, 0.49 for dual n-back group, and they are 0.20, 0.23, 0.09, 0.26 for no-contact group.

In response to Moody (2009), they cite Salthouse (1993) and Unsworth & Engle (2005). The first study examines how working memory might mediate adult age difference on Raven. The second study has merely correlated different portions (i.e., various levels of difficulty, memory load, and rule type) of the Raven with some other tests (e.g., OSPAN) and found similarity in the correlations. As explained in Section 1, the correlation tells nothing about mean score changes over time. However, in their own study, Jaeggi et al. (2010) report a larger transfer effect in the second half (which is harder) of Raven, but no larger nor smaller transfer effect in the second half of BOMAT. Even with this point granted, this study has the same flaws as the Jaeggi (2008) study. Refer to Section 1.

Cognitive training as an intervention to improve driving ability in the older adult

Seidler et al. (2010) trained 29 young and 11 old adults on the dual n-back, and 27 young and 18 old adults on a knowledge training test, all participants over a minimum of 17 sessions (that is, some but not all have completed up to 25). They were paid. The transfer task is another WM measure (card rotation, operation span and visual array comparisons), the Raven’s matrices along with a motor sequence learning task and a sensorimotor adaptation test for the measure of cognitive ability, and an STISIM Drive driving simulator (a test correlated with real-world, in-car driving performance). No effect size was reported, only the significance tests, and they show significant group*gain interaction on the WM but not on the Raven and driving simulator. Due to the absence of effect size, the study is worthless. At the very least, given the reported sample size, a non-significant interaction probably suggests either a small or nonexistent gain.

Working Memory Training in Older Adults: Evidence of Transfer and Maintenance Effects

Borella et al. (2010) had a sample of 67-75 old adults, randomly assigned, 20 in the trained group, and 20 in the control group. Both groups did not differ either on age, education or the WAIS-R vocabulary (Table 1). The criterion task was the Categorization Working Memory Span (CWMS), that is similar to the classic WM tasks. The nearest-transfer effects involve visuospatial WM (Dot Matrix), the near-transfer effects involve short-term memory (forward and backward digit span, the far-transfer effects involve fluid IQ tests (Cattell CFT, Stroop Color task, Pattern Comparison test). The training program involves a modified version of the CWMS, over 3 sessions (from #2 to #4). Session 5 is the post-test and the follow-up occurs 8 months after. Table 2 shows the results. In the trained group, Dot matrix, forward and backward digit span show a large increase in the post-test, but large decline at the follow-up. The difference between pre-test and follow-up is small. Cattell CFT shows considerable gain in the trained (but not control) group while the other fluid tests show a decline over time, a decline that wasn’t observed in the control group. Thus, the study is inconclusive. Especially if we consider the sample is not young and that the transfer tests are narrowly defined (only Gf).

Improvement in working memory is not related to increased intelligence scores

Colom et al. (2010, pp. 5-7, Figures 1 & 2) use 288 psychology undergraduates (82% females) with mean age of 20.1 (SD=3.4). They participated to fulfill a course requirement, and were randomly assigned to two groups. The first group (STM-WMC) with N=173 are given highly challenging and diverse tasks, the second group (PS-ATT) with N=115 are given simple and diverse tasks. All transfer (IQ) tests were split into odd and even items (the even items are harder). Intelligence was measured by the Raven (set II of APM, 18 items within 20 min.) along with the abstract reasoning (DAT-AR, 20 items within 10 min.), verbal reasoning (DAT-VR, 20 items within 10 min.), and spatial relations (DAT-SR, 25 items within 10 min.) subtests from the Differential Aptitude Test Battery. Short-term memory (STM) was measured by forward letter span and forward digit span. Working memory (WMC) was measured by the reading span, computation span, and dot matrix tasks. Processing speed (PS) was measured by simple verification tasks. Attention (ATT) was measured by the verbal and quantitative versions of Flanker task and Simon task. The procedure involves 5 sessions, one by week. In the 1st week, people are tested on the odd numbered of the 4 tests. In weeks, 2, 3, and 4, the STM-WMC group are given in each sessions the DAT-SR but each time the tests were different, while the PS-ATT are given in each sessions the APM, DAT-AR and DAT-VR but each time the tests were different. In week 5, they are given the even numbered items of the 4 tests. See table 1.

Improvement is evident in STM and WMC tasks from the 1st to the 2nd session (equivalent to 8 and 5 IQ points, respectively) but it was much smaller from the 2nd to the 3rd session (equivalent to 2 IQ points). This contrasts with the rate of improvement for PS and ATT, from the 1st to the 2nd session the change was equivalent to 8 and 11 IQ points respectively, whereas from the 2nd to the 3rd session the change was equivalent to 7 and 9 IQ points respectively. Thus, training on working memory is less effective than training on processing speed. In both groups, DAT-AR shows no gain at all. The correlation (Pearson and Spearman) between g-loadings and pre-test/post-test changes is strongly negative for both groups. But given the small number of (sub)tests (only 4), this relationship is not robust. The predictive validity for intelligence at pretesting seems to diminish for PS and ATT, but not for STM and WMC. Thus, even if PS and ATT performance increases over time, it seems to be unrelated with gains in intelligence.

Hundred days of cognitive training enhance broad cognitive abilities in adulthood: findings from the COGITO study

Schmiedek et al. (2010) studied a sample of 101 young (20-31 yrs) and 103 old (65-80 yrs) subjects. There were 44 young and 39 old subjects assigned to the no-training control group; they received 10 sessions of 2.0–2.5 hours filled with mostly cognitive testing. The experimental subjects received 100 daily sessions (1 hour each). No other study so far has been so intensive. The intervention group was paid 1450-1950 euros (depending on the number of completed sessions) and the control group was paid 460 euros.

The practice tasks given in each session involve 12 different tasks (two-choice reaction tasks and three comparison tasks for (PS) perceptual speed; memorization of word lists, number-word pairs, object position in a grid for (EM) episodic memory; alpha scan, numerical memory updating, spatial n-back for (WM) working memory). The transfer tasks involve WM near transfer (updating tasks such as animal span, 3-back numerical, and memory updating spatial) and WM far transfer (complex span such as reading span, counting span, rotation span) and episodic memory (word pairs such as the nine tasks of the BIS test which are measuring Gf, EM and PS).

They use a latent variable approach, which has the advantage of correcting for measurement error. Table 3 and Figure 3 show the effect sizes (standardized gain of experimental group minus control group) are very heterogeneous. For WM near transfer tasks, only animal span for old subjects showed meaningful gain (d=0.42) and only 3-back numerical for young subjects showed meaningful gain (d=0.42). At the latent factor level, there is also a large gain for young (d=0.36) and old (d=0.31) subjects. Strong measurement invariance (i.e., factor loading and intercepts) was apparently tenable. For WM far transfer tasks, the gain in rotation span and reading span for old subjects is d=0.60 and d=0.23. All three tasks of WM far transfer show little to no improvement in young adults. The authors believe that ceiling effect in CS and RS tasks of WM far transfer could have reduced the gains. Latent factors were not modeled because measurement invariance was violated. But these parameters are very unstable if sample size is small. For reasoning tasks (verbal, numerical, figural/spatial, and Raven) the d gains in young adults are 0.13, 0.33, 0.38, 0.33, respectively. For old people, they are effectively zero, but the Raven has an effect size of 0.54. Latent factor gains were d=0.19 and d=-0.02 for young and old adults. All four tasks of episodic memory show sizeable gain in young adults but two of the tasks show zero gain in old adults. Latent factor gains were d=0.52 and d=0.09 for young and old adults. For perceptual speed, verbal has d=0.07, numerical has d=0.20 and figural/spatial has d=-0.26 among young adults. The gains tend to be negative or null in old adults. They say that the interaction of group*session was not reliable.

Finally, they correlate the EM transfer latent change factor with EM practiced latent change factor, which was r=0.58, but this correlation with the WM latent change factor was just r=0.25. Also, in contrast to their perfect correlation among each other, the correlation of the latent WM factors with the latent change factor of EM practiced tasks was much smaller (r=0.52). This suggests that WM training does not induce general improvement.

In general, young people gain more than old people. On the other hand, the training tasks have fixed difficulty levels based on pre-test performance (i.e., they are not adaptive). The first consequence is that young people find the tasks relatively less challenging than old people. The second is that WM is not challenged anymore, and the training then has consisted in simple automation.

This study is usually cited as being one of the most intensive experiments, and has been said to be successful in producing gains. This is not true because the battery of tests is narrowly defined and WM far transfer tasks and perceptual speed (PS) show no improvement. The authors even admitted that the lack of transfer to PS reflects the lack of similarity between tests.

Short- and long-term benefits of cognitive training

Jaeggi et al. (2011) conduct another study. They have a sample of 62 elementary and middle school children (32 in experimental, 30 in active control) that are randomly assigned. They were requested to train for a month, five times a week, 15 min per session. The follow-up test occurs 3 months after the post-test. Apparently, they were not paid. The training task is a video-game like WM task. From their table 1, there is no doubt that the two groups improve equally in the Raven SPM and the Test of Nonverbal Intelligence (TONI) over the 3 assessment occasions. The result is thus negative for the hypothesis. The authors split the dual n-back group into small- and large-gain groups (N=16 and N=16) in order to compare their trajectories. There is no clear evidence that the group of large training gain have an advantage in score gain for Raven SPM, but they have an advantage for the TONI. The Raven SPM has been split into odd and even items with 29 items per version and 10 minutes whereas the TONI was a 45-item test with no time limit (the authors say that nearly all children completed the Raven within the given timeframe).

Working memory training does not improve intelligence in healthy young adults

Chooi & Thompson (2012) use a sample of students in psychology. Participants receive research credits for the first hours and are paid for each subsequent hour. The training group receives dual n-back and the active control receives a modified web-based version of the dual n-back task, in which the level of difficulty is fixed. The subjects working on the filler task completed 20 trials of the dual 1-back working memory task each time they came in for their “training” session. They divide the sample into 6 parts, 8-day training (N=9), 20-day training (N=13), 8-day active control (N=15), 20-day active control (N=11), 8-day passive control (N=22), 20-day passive control (N=23). They give the Mill-Hill vocabulary (22 items), another Vocabulary test (50 items in part I and 25 items in part II), Word Beginning and Ending, Colorado Perceptual Speed (30 items), Identical Pictures (48 items), Finding A’s, Card Rotations (14 items), Paper Folding (10 items), Mental Rotation (10 items), Raven APM (36 items). The Mill-Hill and Raven are not timed, an important feature usually absent in all other studies. The tests have been selected given the VPR model of Johnson & Bouchard (2005) known by now to be the best representation of the structure of human intelligence.

In their study, the group who trained more days (20 vs 8 days) gained more, which replicates Jaeggi et al. (2008). Table 4 shows the effect sizes for each subgroup in all tests. One obvious curiosity is the decline in scores for nearly all tests and all subgroups, even though they continue to improve on the training task after each day of training. Only Paper Folding, Card Rotation, and the OSPAN show a gain, but not consistently. It is difficult to explain what happened given the absence of practice effects. If there is a ceiling effect, that could be the explanation, but there is no analysis of histogram and skewness.

Working-memory training in younger and older adults: training gains, transfer, and maintenance

Brehmer et al. (2012) have a randomized sample of 29 young and 26 old adults in the adaptive training group and 26 young and 19 old adults in the low practice group (i.e., active control). The mean age of young people is 26, and for old people the mean age is 64. They have been paid an equivalent of 440 US dollars for participation. The program involves 5 weeks of intervention, and a follow-up after 3 months. The experimental and control groups receive both the Span Board Forward and Backward Digit Span. However, in the experimental group, the task difficulty was adjusted as a function of individual performance across training, while in the control group, the task difficulty remained constant at the same low starting level, namely remembering 2 items.

The near transfer tasks are the Span Board Backward and Forward Digit Span. The far transfer tasks are the PASAT (sustained attention), Stroop (interference control), RAVLT (episodic memory), the set E (12 items per set) of Raven SPM (non-verbal reasoning), and CFQ (a self-rating scale on cognitive functioning). Table 2 shows the mean scores for the tests. For the 2 tests of near-transfer, both young and old people, the experimentals clearly outperformed the controls. In the 4 cognitive tests (disregarding CFQ) of far transfer, for young people, there is no evidence of meaningful improvement, and the experimentals have only a slight advantage over the controls. For the old people, the experimentals have a better outcome on PASAT but worse on the Raven.

No evidence of intelligence improvement after working memory training: A randomized, placebo-controlled study

Redick et al. (2013) use a sample of 75 subjects having completed all sessions, and 55 additional subjects who completed at least the pre-test session. For the pre-test, mid-test, and post-test sessions, subjects were compensated $40 per completed session; subjects completing all three transfer sessions received a 10% bonus ($12). Compensation for each practice session was $10, with a 10% bonus at the end of the study for completing all practice sessions ($20). Both the dual n-back (experimental) and visual search (placebo) training group had 20 sessions. Visual search is unlikely to train WM, so it serves as an effective active control group. Because they emphasize on the importance of having a large set of cognitive tests, they give the RAPM (12 items within 10 min.), RSPM (20 items within 15 min.), Cattell CFT (19 items), and other tests of multi-tasking, working memory, crystallized, perceptual speed, and fluid ability, such as paper folding (6 items), letter sets (10 items), number series (5 items), inferences (6 items), analogies (8 items), vocabulary (13 items), general knowledge (10 items), and other WM tasks. The study compares the outcome of pre-test, mid-test (10 sessions) and post-test (20 sessions).

Given Table 2, some tests show improvements but most don’t, in the dual-n back condition. The scores in Raven APM and SPM as well as Cattell CFT have declined in all groups (the exception is visual search, for which the Cattell CFT score has increased modestly). In general, this group shows a slightly better outcome over time than either the visual search or control (passive) group. The dual n-back and visual search don’t differ meaningfully in terms of effort and enjoyableness, and in fact the visual search group has a greater score on these two variables. The members of the dual n-back group are more likely to report the experiment improved their intelligence and memory (Table 5), while they didn’t. WM capacity, as assessed by symmetry and running span, improved similarly in all 3 groups.

An important analysis is presented in Table 7. The authors correlate the amount of training gain (by median-splitting the group into low and high scores) with transfer gain for a composite score of spatial and verbal fluid intelligence, multitasking, WM capacity. This has the advantage of reducing unreliability. The respective correlations are 0.24, -0.19, 0.30, 0.39 for dual n-back (N=24) and -0.10, 0.36, -0.07, -0.19 for visual search group (N=29). There is larger gain in transfer tasks for the experimental group.

There is a serious limitation however (p. 374). The authors admit a possibility of ceiling effect, i.e., subjects having maximum (or near) score that was possible. This is because of their shortened tests.

Distinct transfer effects of training different facets of working memory capacity

von Bastian & Oberauer (2013) use a sample of 83 women and 38 men (with mean age=23.34, ranged 18–36 years) who were students at a Swiss university or held a university degree (there were 3 missing values for the longitudinal data). Those who complete the study received CHF 150 (about US$ 160) or course credits. Additionally, they had the chance to earn a bonus up to a maximum of CHF 50 depending on the level of difficulty they achieved during training. They were randomly assigned to 4 groups (1 active control and 3 training groups), and have 20 sessions (30-40 min.) over 4 weeks of extensive training. The active control group has N=30. The 3 training groups correspond to storage processing (N=30), relational integration (N=28), supervision (N=30) and each of these tasks were trained for 10 minutes per session. Following the common procedure, difficulty is adapted to individuals’ performance. On the other hand, the active control group has to complete 3 visual matching tasks. This group has to respond as fast and as correctly as possible. The training was self-administered at home via the software Tatool. To maximize commitment, participants had to sign a participation agreement at the beginning of the study stating that they would take the completion of the training sessions seriously. They were also alerted that their training data would be constantly monitored. After each session, they had to upload their data to a webserver, permitting a constant control of participants’ compliance.

They used linear mixed-effect (LME) approach because they can model fixed effects of groups (experimental vs control) and random effects of individuals and cognitive tasks. Accordingly, the standardized gain on individual tasks is modeled as deviating randomly from the construct mean in the same way as individual subjects deviate randomly from the group mean.

The battery of 15 transfer tasks consists of 13 working memory tests (3 Brown-Peterson task, 1 memory updating, 3 monitoring, 1 binding, 3 task switching, 1 stroop task, 1 flanker task) and 2 reasoning tests (32-item syllogisms, and the short form of BIS-4S which has 16 subtests). The BIS battery comprises reasoning, creativity, memory, and speed. The BIS has a relatively good correlation with most of the 12 WM tasks, while syllogisms have correlations going from weak to good. Each of the Brown-Peterson, Monitoring and Task Switching tests has 3 tasks; one figural, one numerical, one verbal. Given Figure 2, the CFA model with these nine tests yields 3 correlated latent factor models (storage processing, relational integration, supervision), and their correlations were 0.39, 0.16 and 0.21.

Figure 5 compares the gains measured at post-test and follow-up for all tests between active control and Storage-Processing group. The BIS-4S shows an advantage for the experimentals at post-test that was sustained in follow-up, while the syllogisms shows a large advantage for the experimentals in follow-up but no difference at all in post-test. There is a notable advantage at both periods for the experimentals in shifting and inhibition tasks (Flanker and Stroop). The advantage on WM tasks was only visible for binding. The others showed no notable difference, or fade out effect at follow-up.

Figure 6 compares the gains measured at post-test and follow-up for all tests between active control and Relational Integration group. There is no advantage for the experimentals for either of the two reasoning tests, a large advantage in shifting, a modest advantage in Flanker but not Stroop task, and a large advantage in only 2 tasks on WM.

Figure 7 compares the gains measured at post-test and follow-up for all tests between active control and Supervision group. There is a modest advantage for the experimentals in BIS-4S before a complete fade out at follow up, but the advantage on syllogisms was large and sustainable at follow-up. There is an advantage for the experimentals in all WM tasks, in inhibition tasks, and a very large one in shifting tasks.

Effects of working memory training in young and old adults

von Bastian et al. (2013) examine a sample of young (age=23) and old (age=69) adults who took part in a 4-week (20 sessions) cognitive training. They were randomly assigned to either WM training (34 young and 27 old) or active control training (32 young and 30 old), and in a double-blinded manner. They are paid CHF 100 (about US $127) or course credit. The WM training involves numerical complex span (storage processing), Tower of Fame (relational integration), figural task switching (supervision). The control group took a general knowledge quiz, a visual search task, and a counting task. The task difficulty was adapted to the subject’s performance. The near-transfer tasks were the three trained WM tasks but with a slight modification. The intermediate transfer tasks involve WM tasks such as Binding and N-back. The far transfer tasks comprise the Raven APM (the 36 items are split into odd-even items, thus 18 in each assessment) without time limit and the BOMAT (29 items within 45 minutes, but is given only to young). The training was self-administered, via Tatool Online.

Their previous study had 3 training groups, each engaged in a single specific training. These were storage processing, relational integration, and supervision. In this study, there is one training group engaging in these 3 types of training.

Table 2 shows that the control group has improved the RAPM scores among young and old people but the WM group did not. For the young who took the BOMAT, the score improvement appears larger in the control group.

Failure of Working Memory Training to Enhance Cognition or Intelligence

Thompson et al. (2013) recruited adults, aged 18-45. The experimental (N=20, age=21, 20 sessions in 29 days) and control (N=19, age=21, 20 sessions in 28 days) groups receive, respectively, the dual n-back and multiple object tracking (MOT). Their initial scores on RAPM and combined 4 tests of WASI are identical. The no-contact group has N=19, aged 23. The near-transfer tasks involve 3 complex WMC tests whereas far-transfer tasks involve RAPM, several subtests of the WASI/WAIS (blocks, matrices, vocabulary, similarities), a comprehension and a reading of the Nelson-Denny, and 3 tests of (psychometric) Processing Speed, such as WAIS III digit/symbol coding, visual matching and pair cancellation of the WJ III. Participants in the training groups were paid $20 per training session, with a $20 bonus per week for completing all five training sessions in that week. All participants were paid $20 per hour for behavioral testing, and $30 per hour for imaging sessions (data from imaging sessions are reported separately). The Raven has been split into two parts of 18 items each, but with equal difficulty, for the pre- and post-test.

One plausible reason to expect transfer from a trained task to an untrained task is that those tasks share common cognitive or neural processes, as evidenced by high correlations between those tasks. The correlations (Table 2) of transfer tasks are generally higher with dual n-back than with MOT. Figure 2 depicts the boxplots with duration of the gain. The dual n-back group improved substantially their n-back score (but not MOT) at the post-test session and maintained it at the follow-up session. The same pattern is evident for the MOT group, with no improvement in n-back but strong in MOT score. Table 3 shows the mean score in trained and transfer tasks for the 3 groups. For the 3 near transfer tasks, the dual n-back shows much stronger gain than MOT but (curiously) comparable with the control group. None of the groups show improvement in RAPM. There is no evidence that the dual n-back group did better (in fact, they did comparably worse) than any of the two other groups in either of the 4 WASI subtests. The dual n-back and MOT groups improve similarly on the Nelson-Denny reading measurements. Among the 3 processing speed tests, only the digit symbol coding shows moderate advantage in gains for dual n-back groups. Finally, they report that personality assessments and motivation were not clearly associated with positive transfer outcomes. While Jaeggi (2008) found a significant effect size, Thompson’s study had 33% more training per session but no greater gains.

Near and Far Transfer of Working Memory Training Related Gains in Healthy Adults

Savage (2013) has a sample of 70 adults (aged 30-60). The experimental (Working Memory, N=35, but N=24 at post-test) group receives the dual n-back, and the active control (Processing Speed, N=35 but N=30 at post-test) group receives 2 visual 1-back games, that require to quickly determine whether a given symbol matched a symbol presented immediately prior. The focus of this training (in the active control) was on speed of thought and decision making rather than maintenance and/or manipulation of information in working memory. All subjects were instructed to train for 20-30 minutes per day, 5-days per week, for 5 weeks at a location of their convenience.

Table 2 shows the mean scores at time 1 and 2. The WM group (N=23) has only a slight advantage over the PS group (N=27) on CCFT but not at all on Raven (18 items within 20 min.). There is no difference in gains for the 2 processing speed tests, Symbol Search, and Coding subtests of the Wechsler’s scale. There was no differential gain either in tests of WM, such as Digit Span, Spatial Maintenance and Manipulation, and Automated Operation Span. The CCFT, forms A and B, were administered and counterbalanced across participants, as with the Raven. CCFT Scale 3 contains four subtests: series, classifications, matrices, and conditions, providing a measure of fluid intelligence beyond matrices.

Adaptive n-back training does not improve fluid intelligence at the construct level: Gains on individual tests suggest that training may enhance visuospatial processing

Colom et al. (2013) use latent variable approach (IRT) because it provides better scaling of individual differences relative to the raw score metric, and reduces the attenuation bias due to measurement error. IRT also takes into account non-linear relationships between traits and observed scores. When different forms are applied in pre-test and post-test sessions, as in their case, IRT is an ideal tool for adjusting (equating) differences in difficulty. The tests involve Gc tests of cultural knowledge such as the verbal reasoning and the numerical reasoning subtests of DAT (DAT-VR and DAT-NR), and the vocabulary subtest of PMA (PMA-V), and Gf tests such as the Raven SPM, the abstract reasoning subtest of DAT (DAT-AR), the inductive reasoning of PMA (PMA-R). WMC was measured by the reading span, the computation span, and the dot matrix tasks. Attention control was tapped by cognitive tasks based on the quick management of conflict: verbal (vowel–consonant) and numerical (odd–even) flanker tasks, along with the spatial (right–left) Simon task. There were 24 sessions (12 weeks). They use a passive control group (i.e., no contact). The subjects (all women) were paid (200€ if training, 100€ if control). There were 3 types of training tasks, visual n-back, auditory n-back, and dual n-back.

Figure 3 shows the scores for training (N=28) and control (N=28) groups at the construct level (Gc and Gf derived from IRT transformations). Figure 4 shows the standardized change (post-test - pre-test / SD at pre-test) at the construct level, for Gf (0.81 vs 0.46), Gc (-0.03 vs 0.07), WM (0.54 vs 0.41), and ATT (0.26 vs 0.12). Figure 5 shows the gains in each test of each construct. WMC gains across tasks, i.e., Reading Span (0.23 vs 0.09), Computation Span (0.25 vs 0.52), Dot Matrix (0.92 vs 0.65). It appears that WMC changes display some ambiguities. The 3 attention tasks show no evidence of an advantage for the training group. Interestingly, across the 3 Gf tests, only PMA-R was given with a time limit and it was the only test showing no gain advantage for the training group. Colom et al. (2013) explain there are ambiguities with Chooi & Thompson (2012) studies because of their suggestion that the verbal fluency and perceptual speed tests given in the retest session could have been more difficult, and if so, it will nullify any retest effect. What they imply, is that an easier (harder) test at retest can artificially increase (reduce) gains. Then, equating the difficulty parameter through IRT could have been an appropriate approach.

Working memory training may increase working memory capacity but not fluid intelligence

Harrison et al. (2013, Figures 4-6) use a sample of 83 undergraduate students, with random assignment. All subjects completed a pretest on a battery of near-, moderate-, and far-transfer tasks, followed by 20 sessions of training lasting approximately 45 min each, and finally a posttest. All subjects were paid $40 for each assessment session, and $10 per training session. Subjects could also earn up to $12 per training session by obtaining high levels of performance on the tasks. This increased the chance that subjects were highly motivated to perform the training tasks over the 20 sessions. They use 3 training groups, each being trained on different tasks; visual search, simple span, complex span. Each group receives 6 near-, 4 moderate-, and 3 far-transfer tasks. The main analysis is ANCOVA (pre-test score used as control variable). In the 6 near-transfer tasks, nearly all show some gains, more for the complex span group. In the 4 moderate-transfer tasks, the gain is evident only for 2 tasks and for only complex span and simple span training groups. In the 3 far-transfer tasks, there is no gain for all groups.

Training working memory: Limits of transfer

Sprenger et al. (2013) conduct 2 studies. They utilize Bayesian methods to evaluate the strength of the evidence for and against the null hypothesis. This is because, according to them, most research relies on the so-called NHST, or null hypothesis significance testing. Such a method cannot test the likelihood of the null (no effect) versus alternative hypothesis (effect). Their statistical tests use Bayes factors (BF), a BF 1.0 indicates evidence in favor of the null hypothesis. The BF statistics were based on the usual t (the greater the sample, the greater is the t stats). As a point of reference, a BF = .33 (1/3) corresponds to positive support for the alternative hypothesis whereas a BF 1 corresponds to evidence for the null hypothesis, with values greater than 3 denoting strong support. BFs between 0.33 and 3 generally are interpreted as providing weak evidence, and values close to 1 are essentially uninformative. One obvious problem with BF stats is to be dependent on sample size; the higher is N, the greater is BF. In fact, Bayesian approach may not even be needed. If the mean score is different, then, no matter how large is the sample, the hypothesis supports the null vs alternative.

The first study has a sample of 127 participants, randomly assigned, with a mean age of 23. Members of the control group have no knowledge of the existence of the training group, and vice versa. Participants in the training condition earned $500 for completing all 3 assessment periods plus all 20 training sessions. Participants in the control condition received $180 for completion of the 3 assessment periods. The battery is wide, consists of 16 tests, measuring WM (verbal and spatial), inhibition, verbal reasoning (from the Kit of Factor-Referenced Cognitive Tests) and verbal skills (from the AFOQT). All tests are timed (around 10-15 minutes for each WM tests and 5-10 minutes for each cognitive tests; see table 1). The training battery consisted of 8 tasks designed to train executive WM functions, including a letter N-back task, auditory letter running-span, block-span, letter-number-sequencing, and tasks developed by Posit Science (match-it, sound-replay, listen-and-do, and jewel-diver).

Table 2 shows the result, with BF stats. In the post-test result, first. Among WM tests, operation, listening and symmetry span show training effect, but not rotation span. Among verbal reasoning, one test has BF=0.31, the other has BF=6.73. In verbal abilities, both have BF>6. The difference in inhibition is inconsistent. In the 3-month follow-up, next. Among WM tests, only the listening span has BF larger than 1. All 4 verbal tests have BF larger than 3. The difference in inhibition is inconsistent, once again. Regarding WM, they write “For instance, the operation-span task required participants to remember sequences of letters, as did several of our training tasks (LNS, n-back, and running span). Symmetry-span required participants to remember the spatial location of a dot flashed in a 4 × 4 grid, which is similar to the block-span training task.” (p. 647). They suspect gain is greater when the transfer tasks share characteristics or stimuli with the trained tasks. Even when looking at the mean score in both conditions (and disregarding BF stats), there is no consistent evidence that the training group gained more than the control group.

The second study has a sample of 138 females with complete longitudinal data. Participants were compensated $30 for completing the pre-test and $70 for completing the post-test, and were randomly assigned to one of 4 possible conditions; a placebo (i.e., active) control training group trained on N-back, an interference training group, a visuo-spatial (WM) training group trained on Shapebuilder test, and a combination training group (which performed both the interference and visuo-spatial WM training tasks). This study also uses a true double-blind pre-test/post-test experimental design, in which neither participants nor study moderators had knowledge of the condition to which participants were assigned. The 4 tests of interest were, shapebuilder, N-back, reading span, and Raven APM, and they all show acceptable correlations with each other (Table 4) and this means they share similar underlying processes. Then, one believes that a transfer effect will be effective for the other tests that are positively correlated. Some of the other tests involve reading and grammar skills.

Table 5 shows the mean scores of post-tests, estimated from ANCOVA (pre-test as covariate, i.e., control variable). The NFC test of motivation was also controlled. The interference group has stronger mean score (and BF favoring the alternative hypothesis) than the control group only for N-back hits-FA, while it has lower score on ANT-exec and ANT-orient. Other tests show no notable difference, with large BFs, usually greater than 3. The spatial WM group has a large advantage (over the controls) for the Shapebuilder test (the trained task of visuo-spatial WM), but no difference at all for all other tests. The combination group shows very strong advantage (over the controls) for N-back hits-FA and Shapebuilder, but has lower mean score on ANT-orient and Reading span. All other tests show similarity in mean score. In other words, the gains were only present for the tests that have been trained. No transfer effect. One interesting finding is that the combination group (having broader training) yields negative results. One related hypothesis would be that the broader the training experiment, the broader is the transfer effect. There is no strong evidence of this.

The role of individual differences in cognitive training and transfer

Jaeggi et al. (2014) studied a group having dual n-back (N=25), single n-back (N=26) and knowledge training (N=27). The latter group (an active control) had to solve GRE-type general knowledge, vocabulary questions. Mean age is 25. Participants are not paid, except those having completed only the pre-test. The examiners give 10 transfer tests. The 6 visual reasoning tests are the Raven APM, Cattell CFT, BOMAT, ETS Surface Development Test, ETS Form Board Test, DAT Space Relations. The 3 verbal reasoning tests are the ETS inferences, AFOQT reading comprehension, Verbal Analogies Test. The speed test is the Digit-Symbol Coding (WAIS). The authors pointed out a widespread problem in this kind of research. Individual tests are made short, and thus, the reliability is low, and the effect size is artificially reduced. Consequently, they have reported effect size (ES) with correction for unreliability.

Table 2 shows the mean scores and effect size (ES) as Cohen’s d for all groups and all tests in the pre- and post-test. In general, the single n-back training gained more than the dual n-back but in both groups, the gain is generally much larger than in the active control group. One interesting feature is the near absence of gain or advantage in verbal tests for the experimental groups. Regarding inferences, reading comprehension, and verbal analogies, respectively, the ES are 0.25, 0.04, 0.10 for dual n-back group, 0.02, 0.35, 0.00 for single n-back group, 0.47, -0.09, 0.26 for knowledge training group. The transfer effect is present only for fluid tests, not crystallized.

Table 3 shows mean score, reliability and effect size for each transfer test at each session in all 3 groups (N=17, 14, and 23), for people having complete data, i.e., with follow-up. The ES (pre vs follow-up) for BOMAT, CFT and DST, respectively, are 0.27, 0.44, 1.30 for dual n-back, 0.28, 0.30, 1.09 for single n-back, 0.02, 0.26, 0.76 for knowledge training. The ES (post vs follow-up) for BOMAT, CFT and DST, respectively, are 0.10, 0.35, 1.01 for dual n-back, -0.33, 0.32, 0.55 for single n-back, 0.23, 0.15, 0.18 for knowledge training. The pre vs follow-up is the most relevant comparison. The large gain is apparent.

They note (Figure 4) that belief in the malleability of intelligence affects the degree of transfer. This is irrelevant, since latent intelligence is not affected by attitude. Besides, Sprenger et al. (2013) reported no transfer effect and had controlled for this variable.

Working memory training improvements and gains in non-trained cognitive tasks in young and older adults

Heinzel et al. (2014) have a sample of 15/15 young people (age=26) and 15/15 old people (age=66) randomly assigned and split equally into training and (no-contact) control group. They receive €150 and €50, respectively. The experimentals took part in a 4-week adaptive n-back WM training program (3 sessions of 45 min. per week). Members of the training group appear somewhat more educated than members of the control group.

Figure 3 shows that the young people achieved a much higher level of difficulty (or score) in n-back over the sessions, compared to old people. Table 2 shows the effect sizes (in Cohen’s d) for the experimentals and controls in the battery of transfer tests consisting of two short-term memory (Digit span forward of WAIS, 1.33 vs 0.74; Digit span backward of WAIS, 1.96 vs 1.42), two episodic memory with 10 words to be recalled (CERAD immediate Recall, 1.26 vs 1.03; CERAD delayed Recall, 1.33 vs 1.12), one processing speed (Digit Symbol of WAIS within 1 min., 3.19 vs 1.98), one executive task (Verbal Fluency within 1 min., 1.58 vs 0.00), two fluid intelligence measures (Raven SPM within 7.5 min., 1.58 vs 0.20; Figural Relations subtest of the LPS within 3 min., 2.90 vs 3.29). These reported effect sizes concern the young people. There is a clear improvement in some tests, but the fact that the battery is so narrowly defined, extremely speeded, and the effect size inflated with the use of no-contact group makes this study very inconclusive.

II) Ambiguous findings.

Improved matrix reasoning is limited to training on tasks with a visuospatial component

Stephenson & Halpern (2013) examine a sample of college students randomly assigned (N=136, with 71 women and 65 men). They have not been paid. The training group receives the dual n-back (N=28). They have 3 active control groups, 2 groups for the WMC training regimen, namely, the visual n-back (N=29), auditory n-back (N=25), and 1 group for the STM training regimen spatial matrix span (N=28). Finally, the 5th group receives no training, and acts as no-active control (N=26). To assess far-transfer effect, they use 4 measures of Gf, namely, Raven APM (set II, 36 items within 10 minutes), Cattell CFT (form A, having four subtests, series (13), classifications (14), matrices (13), conditions (10), for a total of 50 items), the matrix reasoning subtest of WASI (29 items within 5 min.), and matrix reasoning subtest of the BETA-III (25 items within 5 min.). They also use 2 visuospatial ability tests, namely, Mental Rotation Test and Paper Folding Test. They also use 2 vocabulary tests, namely, the Extended Range Vocabulary Test and the Lexical Decision Test. Each participant completed 18 to 20 sessions (each lasting 20-22 minutes), 5 days a week, over 4 weeks.

Table 1 shows the mean scores of Gf tests. The effect size in each group can be calculated by taking the mean of post-test minus mean of pre-test and dividing the difference by the SD of pre-test score, and then compare the SD or d gain of each group. The dual group (d=0.69) shows better gains than visual (d=0.40) or auditory (d=0.39) or STM (d=0.54) group on Raven. The dual group (d=0.38) shows no better gains than visual (d=0.34) but clearly better than auditory (d=0.22) and STM (d=0.15) group on Cattell CFT. The dual group has a substantial improvement over all other groups on BETA subtest but not on the WASI subtest. The full result of my computations can be found here, along with the results presented in Table 2. There is a slight advantage (d~0.10) for dual groups versus all others on the Extended Range Vocabulary. For Lexical Decision, there is no gain in dual, a gain in visual (d=0.31) and negative effect size in other groups. For Mental Rotation Test, dual and visual groups have lower d values than other groups. For Paper Folding, the dual group outperforms the active control groups by 0.2 or 0.3 d gap. To summarize, the d gaps show an ambiguous behavior in all 3 cognitive domains.

Curiously, the dual group has better mean scores at pre-test on Raven APM and Cattell CFT than all other groups. There might be some regression effect toward the mean for the dual group. If so, it will attenuate the improvement of dual groups versus the others. But that can’t explain the differential gain between the two tests. The authors try to explain (p. 355) why Cattell CFT has smaller gain than Raven despite their high correlation (0.69 in the current study). The reason is that CCFT is supposed, at least psychometrically, to measure g and not Gf exclusively. Besides, Jensen (1980, p. 650) tells us that the Cattell CFT (or CFI) is based on 4 different types of culture-reduced items, so that the specific factors are averaged out, making it a purer measure of Gf than is the Raven. Unlike the Cattell CFT, the Raven contains a matrix-specific factor. However, this pattern was not replicated in Savage (2013) and Jaeggi et al. (2014, Table 2).

Is Working Memory Training Effective? A Study in a School Setting

Rode et al. (2014) evaluate the effect of WM training on achievement tests. The experimental and control groups consist of 156 (six classrooms) and 126 children (five classrooms), respectively. The control group is no-contact, thus any positive effect can be exaggerated. All are randomly assigned. There is no evidence they have been paid. The computerized training task is given as game-like format, and involves a series of numbers to memorize (storage) interspersed with math tasks (process) at increasing levels of complexity. There were on average 17 sessions. The training was delivered in the school context during normal class time, while students in the control condition experienced regular classroom activities. The test scores are the subtests Reading Comprehension and Mathematical Reasoning of the Wechsler Individual Achievement Test–II, the reading fluency and math of the Easy Curriculum-Based Measurement (CBM-Read, and CBM-Math), and the Automated Working Memory Assessment (AWMA) but the last test has only a sample of 36 experimentals and 30 experimentals.

Because the children are from different schools, they employ mixed linear modeling. The pre-test/post-test difference is estimated as a within-subject fixed effect, the experimental condition as a subject-level fixed effect. They include the interaction between these two predictors. They also include the pre-test/post-test contrast as a random effect, and they allow intercepts and coefficients for random effects to vary both across schools and across children within schools.

Figure 3 shows the raw score difference, estimated by the linear mixed regression model. Of utmost importance is that the effect size for WIAT math (d=0.05) and reading (d=0.17), CBM math (d=0.26) and reading (d=-0.15), AWMT (d=0.24) shows no clear-cut evidence of an improvement of the experimental vs control group. If we exclude AWMT (which is not an achievement but a WM test), the benefit of the experiment is evident for only 2 tests. Figure 4 shows the effect sizes by transfer tasks, along with the expected effect sizes based on average improvement in the training task and the strength of the relation between training task performance (assessed during the first session) and each transfer measure at pre-test. The observed effect sizes did not match the expected effect sizes. Expressed as a correlation, the similarity between expected and observed gain profiles was r=.02. Figure 5 shows the relation between the gain in training tests and gain in transfer tests. If WM is effective, the expectation is that the higher the learning rate among people, the higher the transfer gain among people. But the values of each transfer test do not correspond with the pattern of gains in the training tests. Finally, the correlation between scores in transfer test and training gains is positive, this indicates the persons with higher achievement scores have larger gains on the WM task.

III) Positive findings.

Working memory training: improving intelligence - Changing brain activity

Jaušovec & Jaušovec (2012) use a sample of 29 psychology students (mean age 20), taking a course in educational psychology. The participants have been assigned to the WM group (n=14) and the active control group (n=15) equalized with respect to gender and intelligence. This prevents any possible regression effects. The subjects were informed about the goal of the experiment – to improve intelligence with training. They were further told that the training will aim at improving working memory (WM group) or components of emotional intelligence (control group). They were given a partial course credit (40%) for participating in the research. The WM group has been trained on n-back and WM maintenance tasks. The control group has been trained in communication and social skills. On average, they complete 30 hours of training.

The 4 transfer tests consist of Digit Span from WAIS-R, Raven APM (50 items), Paper Folding & Cutting (25 items) from Standard-Binet, and the crystallized subtest Verbal Analogies (25 items) from BTI. In WM group, the easy (25 items) and difficult (25 items) portions of Raven have undergone similar improvements. My calculation of the effect sizes is available here. The advantage of WM group over the control group, in terms of effect size, is large for digit span (0.514 vs 0.194), Raven (0.588 vs 0.033), paper folding (0.355 vs 0.133) and verbal analogies (0.506 vs 0.144). The outcome is very encouraging, although it lacks a follow-up analysis and an even broader test battery that includes tests that do not concern either fluid/speed/visuospatial ability or the kind of abilities (e.g., similarities and analogies) elicited by Raven-like tests.

IV) Meta-analyses.

Is Working Memory Training Effective? A Meta-Analytic Review

Melby-Lervåg & Hulme (2012) conducted a meta-analysis. They have excluded some studies for not having applied adequate methodological criteria (pp. 4-5), notably the total absence of control groups. Among other problems, the regression to the mean effect, i.e., the tendency of a low-scoring subject to perform better at post-test and for a high-scoring subject to perform worse at post-test, also has to be taken care of by randomizing the assignment to the different groups of study. This being said, regarding the studies included in their meta-analysis, they found an overall effect size ranging from 0.16 to 0.23, which means that the magnitude of the effect between the treated and untreated groups is relatively small. A moderator analysis (table 2) reveals however d values of 0.04 for studies using randomization procedures and 0.00 for those using active (i.e., treated) control group, concerning immediate effect on nonverbal abilities. Here are the findings :

Immediate Effects of Working Memory Training on Far-Transfer Measures

Nonverbal ability. Figure 6 shows the 22 effect sizes comparing the pretest–posttest gains between working memory training groups and control groups on nonverbal ability (N training groups = 628, mean sample size = 28.54, N controls = 528, mean sample size = 24.0). The mean effect size was small (d = 0.19), 95% CI [0.03, 0.37], p = .02. The heterogeneity between studies was significant, Q(21) = 39.17, p < .01, I² = 46.38%. The funnel plot indicated a publication bias to the right of the mean (i.e., studies with a higher effect size than the mean appeared to be missing), and in a trim and fill analysis, the adjusted effect size after imputation of five studies was d = 0.34, 95% CI [0.17, 0.52]. A sensitivity analysis showed that after removing outliers, the overall effect size ranged from d = 0.16, 95% CI [0.00, 0.32], to d = 0.23, 95% CI [0.06, 0.39].

Moderators of immediate transfer effects of working memory training to measures of nonverbal ability are shown in Table 2. There was a significant difference in outcome between studies with treated controls and studies with only untreated controls. In fact, the studies with treated control groups had a mean effect size close to zero (notably, the 95% confidence intervals for treated controls were d = -.24 to 0.22, and for untreated controls d = 0.23 to 0.56). More specifically, several of the research groups demonstrated significant transfer effects to nonverbal ability when they used untreated control groups but did not replicate such effects when a treated control group was used (e.g., Jaeggi, Buschkuehl, Jonides, & Shah, 2011; Nutley, Söderqvist, Bryde, Thorell, Humphreys, & Klingberg, 2011). Similarly, the difference in outcome between randomized and nonrandomized studies was close to significance (p = .06), with the randomized studies giving a mean effect size that was close to zero. Notably, all the studies with untreated control groups are also nonrandomized; it is apparent from these analyses that the use of randomized designs with an alternative treatment control group are essential to give unambiguous evidence for training effects in this field.

Long-Term Effects of Working Memory Training on Transfer Measures

Table 4 shows the total number of participants in training and control groups, the total number of effect sizes, the time between the posttest and the follow-up, and the mean difference in gain between training and control groups from the pretest to the follow-up. It is apparent that all these long-term effects were small and nonsignificant. The true heterogeneity between studies was zero for all variables, indicating that the results were consistent across the studies included here. The funnel plot with trim and fill analyses did not indicate any publication bias. As for the attrition rate, on average, the studies lost 10% of the participants in the training group and 11% of the participants in the control group between the posttest and the follow-up. Only one study with two independent comparisons reported long-term effects for verbal ability (E. Dahlin et al., 2008). For the younger sample in this study, with 11 trained and seven control participants, long-term effects for verbal ability was nonsignificant (d = 0.46) 95% CI [-0.45, 1.37]. For the older participants in this study (13 trained, seven controls), the long term effects were negative and nonsignificant (d = -0.08), 95% CI [-0.96, 0.80].

In summary, there is no evidence from the studies reviewed here that working memory training produces reliable immediate or delayed improvements on measures of verbal ability, word reading, or arithmetic. For nonverbal reasoning, the mean effect across 22 studies was small but reliable immediately after training. However, these effects did not persist at the follow-up test, and in the best designed studies, using a random allocation of participants and treated controls, even the immediate effects of training were essentially zero. For attention (Stroop task), there was a small to moderate effect immediately after training, but the effect was reduced to zero at follow-up.

Methodological Issues in the Studies of Working Memory Training

Several studies were excluded because they lack a control group, since as outlined in the introduction, such studies cannot provide any convincing support for the effects of an intervention (e.g., Holmes et al., 2010; Mezzacappa & Buckner, 2010). However, among the studies that were included in our review, many used only untreated control groups. As demonstrated in our moderator analyses, such studies typically overestimated effects due to training, and research groups who demonstrated transfer effects when using an untreated control group typically failed to replicate such effects when using treated controls (Jaeggi, Buschkuehl, Jonides, & Shah, 2011; Nutley, Söderqvist, Bryde, Thorell, Humphreys, & Klingberg, 2011). Also, because the studies reviewed frequently use multiple significance tests on the same sample without correcting for this, it is likely that some group differences arose by chance (for example, if one conducts 20 significance tests on the same data set, the Type 1 error rate is 64% (Shadish, Cook, & Campbell, 2002). Especially if only a subset of the data is reported, this can be very misleading.

Finally, one methodological issue that is particularly worrying is that some studies show far-transfer effects (e.g., to Raven’s matrices) in the absence of near-transfer effects to measures of working memory (e.g., Jaeggi, Busckuehl, Jonides, & Perrig, 2008; Jaeggi et al., 2010). We would argue that such a pattern of results is essentially uninterpretable, since any far-transfer effects of working memory training theoretically must be caused by changes in working memory capacity. The absence of working memory training effects, coupled with reliable effects on far-transfer measures, raises concerns about whether such effects are artifacts of measures with poor reliability and/or Type 1 errors. Several of the studies are also potentially vulnerable to artifacts arising from regression to the mean, since they select groups on the basis of extreme scores but do not use random assignment (e.g., Holmes, Gathercole, & Dunning, 2009; Horowitz-Kraus & Breznitz, 2009; Klingberg, Forssberg, & Westerberg, 2002).

3. Why IQ gains are unlikely.

One should ask first. If intensive educational programs over 10 and more years succeed to improve scholastic achievement but fail to improve intelligence, and in particular, g, why would working memory training over just a few weeks (or months) result in huge gains, even higher than educational programs ?

What is needed is an examination of the effect of score gain on real-world criterion (e.g., scholastic or job performance). If higher IQ is associated with better social outcome, there is no reason why score gain won’t be associated with these variables, unless the score gain is hollow. Of course, the gain needs to be strong enough, or the association won’t be easily detected.

But the best way to know if there is any transfer effect is to assess chronometric tests (ECTs). For instance, the Flynn effect shows large gains on every kind of cognitive test, and is greater on fluid tests than on crystallized tests. But ECTs such as reaction or inspection time don’t show this effect.

Other studies that attempt to evaluate the impact of cognitive training (but don’t involve working memory as per Jaeggi’s recommendation) don’t find any relationship with g. For example, te Nijenhuis et al. (2007) re-analyze Skuy et al. (2002) study in which black, white, indian and colored participants are given the Mediated Learning Experience (MLE) and the Raven SPM. The authors correlate the RSPM gain with the item g-loadings and found a negative correlation, probably attenuated due to the low reliability of a given item. They found a decline in the total variance explained by the first unrotated factor loading (or g) in the post-test scores, which is likely due to the higher test sophistication induced by the MLE. Previously, it was found by Skuy et al. (2002, Figure 3) that those gains in the RSPM are not generalizable to other tests (e.g., Stencil) that require abstract thinking, and thus not g-loaded again, even if the black south african students improved more than did the white students. One possible reason for this is that g is quite heritable and relatively stable (Beaver et al., 2013) during the developmental period.